Posts

-

The Blogging Gauntlet: May 12 - I Just Watched Someone Eat a Sandwich While Blindfolded

CommentsThis is part of The Blogging Gauntlet of May 2016, where I try to write 500 words every day. See the May 1st post for full details.

Today, I watched a blindfolded man eat and critique Chicago’s two most popular Italian beef sandwiches.

Why did I do this?

A simple explanation is that I was bored. My browser was already open to YouTube, and this video appeared in my recommendations.

That’s an okay explanation, but I’m not satisfied with it. Why did I choose to watch YouTube instead of opening a Steam game? Why did I click on that video instead of any of the other YouTube recommendations? When I reflect on my decisions, I always assume there’s a reason behind my behavior. This is true even if I don’t know why I did something. In that case, it just means my subconscious influenced me in a certain way, and it’s up to me to puzzle out the pieces.

Let’s consider a few explanations that might have played into my decision.

The Novelty Explanation

I’m not 100% sure of this, but I’d guess most people don’t spend their time watching blindfolded food critics eat sandwiches. Just a hunch.

That makes this video special. How often have I seen blindfolded taste testing? Not very often. From an exploration-exploitation perspective, that places a ton of value of watching it once. Maybe I’m actually a huge fan of blindfolded taste-testing. I have to watch to make sure.

However, by that logic I should be watching every video on YouTube, because I haven’t seen anything quite like it yet. This explanation doesn’t accurately incorporate my prior beliefs. Yes, novelty played a role in my decision, but I must have already had a prior belief that this specific blindfolded taste testing video would be worth watching.

Luckily, it’s easier to explain where that belief came from.

The Nostalgia Explanation

This video isn’t of any food critic, it’s of Alton Brown, host of Good Eats. I used to watch Good Eats as a kid, because

- It taught the science behind cooking in a way that a kid could understand

- I got to watch someone cook delicious food.

Nostalgia is a very powerful force. My past enjoyment from watching Good Eats must have made me interested in watching more Alton Brown videos.

This satisfies my curiosity by enough, but for completeness let’s add one more detail.

The Celebrity Explanation

I’ve watched some of Alton Brown’s videos in the past. See Grilled Grilled Cheese and Champagne Saber Time.

Again, these are entertaining videos. And that’s the key. Alton Brown is, at heart, an entertainer. He’s a celebrity in the food world. I don’t want to get into stardom theory, because my memory of it is fairly hazy, but you could call me a fan of his. The natural instinct of fandom is to watch everything related to the fandom, and thus, Alton Brown YouTube videos.

It doesn’t matter that I’ve never been to Chicago, and have no opinions on Italian beef sandwiches. If Alton Brown is giving a review, I’m going to find it interesting, because I trust his work to be good.

Now if you’ll excuse me, I’m hungry. Time to head to a sandwich place.

-

The Blogging Gauntlet: May 11 - From Gaming, Lead Me To Optimality

CommentsThis is part of The Blogging Gauntlet of May 2016, where I try to write 500 words every day. See the May 1st post for full details.

From ignorance, lead me to truth.

From darkness, lead me to light.

From death, lead me to immortality.

(Translation of the Pavamāna Mantra)

As a kid, I played a lot of games. That hasn’t really changed since college.

In Dustforce, I’ve logged 316 hours.

In The Binding of Isaac: Rebirth, I’ve logged 212 hours.

In Dominion, I don’t know how many hours I’ve spent, but I know I’ve played about 3500 games.

This is a ton of time, to put things mildly. It’s so many hours! I could be doing so many more productive things. And sure, that’s true, but playing games is fun, I need ways to relax, and playing games doesn’t automatically make you a lazy person.

In fact, far from it. Despite all the time I’ve spent, I’d say gaming has been a net positive force in my life.

***

First, I should explain my viewpoint on games, to clarify where I’m coming from. We’re diving into philosophy land here - hold on to your hats.

All games take place in their own world.

That world follows certain rules. For example, baseball. There are two teams. There are four bases. There is a pitcher, and a shortstop, and so on. There are strikes and outs and home runs.

This is true of all worlds, but for worlds constructed by games, the rules of the game are the rules of the world. When you get three strikes, you’re out. This is a fact that you cannot argue against. It’s how the world works.

In this viewpoint, playing a game is the same as taking actions in the world, game strategy is the same as task planning, and winning a game is the same as executing a series of actions that maximizes your win percentage.

***

When viewed this way, deciding whether to buy Park Place in Monopoly is similar to choosing where to go for lunch, or what job to pick. However, there’s one key difference. Game worlds are much simpler than the real world.

In the real world, I’m a pretty quiet person. But put me in a game of Resistance, and I’ll talk a bunch. What changes? If I’m playing Resistance, everybody’s context changes. The objective is very simple: find the spies, or pretend you aren’t a spy. The rules of Resistance place very heavy constraints on everyone’s behavior, and within those constraints it’s massively easier for me to judge social behavior and make reads on whether someone is hiding something or not. And I don’t have to worry about what to talk about, because everyone’s only going to talk about Resistance. That’s something I know.

This leads into my next point. When the rules the world follows are simpler, it’s easier to act optimally. In Dominion, one of the first lessons people teach is that all cards are judged relative to one another. What matters is not how impressive a card looks, but how impressive it looks compared to cards of similar cost.

You may recognize this as a textbook example of opportunity cost.

I’ve seen other life lessons over the years. A simple strategy can be better than a complicated one. Dominion is zero-sum, so you don’t need to be good, you just need to be better than your opponent. The thousands of Dominion games I’ve played have thrown me into tens of thousands of situations where I’ve gotten to practice implementing these ideas. Sure, the context is different, but the core skill is the same.

About two weeks ago, I was playing a game where I had fallen behind due to bad shuffles. I paused to take stock. I needed a lucky break to have a shot. After thinking for a bit, I wagered the entire game on drawing cards in exactly the order I needed. It was about a 10% chance of happening, and if it went wrong I’d be so behind that I’d have to resign.

The choice wasn’t intuitive, but when I envisioned the worlds where I won the game, they all needed that 10% chance to come true. So I risked it all, and went for it.

And it did.

And I won.

On one hand, it didn’t feel like I deserved to win. On the other hand, I won. I made the perfect calculated risk, and it paid off.

Yes, I could have been doing something productive instead, but you know what? I wouldn’t trade that victory for anything else I could have done in those 15 minutes.

-

The Blogging Gauntlet: May 10 - Splitwise is NP-Complete

CommentsThis is part of The Blogging Gauntlet of May 2016, where I try to write 500 words every day. See the May 1st post for full details.

Say you go on a group trip. People foot the bill for different expenses, and need to get paid back later. Splitwise is a webapp that does all the bookkeeping for you.

As an example, consider this case. Alice, Bob, Carol, and Dave went on a trip together. Alice spent $60 and needs to get paid back by Bob, Carol, and Dave. Carol spent $20 and needs to get paid back by Bob and Dave. Dave spent $30 and needs to get paid back by Alice and Bob, and Alice’s share should be twice as big as Bob’s.

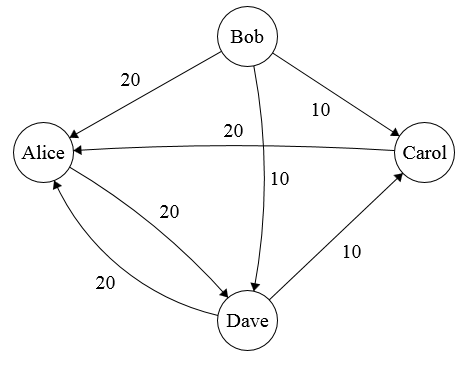

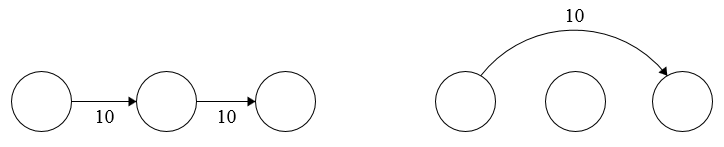

We can interpret these payments as a weighted directed graph, where people are vertices and edges are payments.

Alice needs $20 from everyone else, Carol needs $10 from Bob and Dave, and Dave needs $20 from Alice and $10 from Bob.

There are some redundancies in the graph. For example, Alice and Dave each need to pay each other $20. Since the net money between them is $0, we should just remove those edges to avoid doing extra transactions.

Splitwise does this for you. In fact, it goes one step further. Splitwise tries to minimize the total number of transactions in the graph.

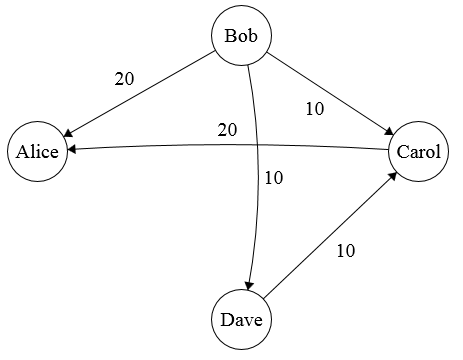

Let’s go back to the example above. Carol receives $20 in total from Bob and Dave, then pays $20 to Alice. Instead of routing their money through Carol to Alice, Bob and Dave could pay Alice directly. Carol is still net +$0 and Alice is still net +$20, but now Carol doesn’t have to pay anyone. That reduces the graph to this one.

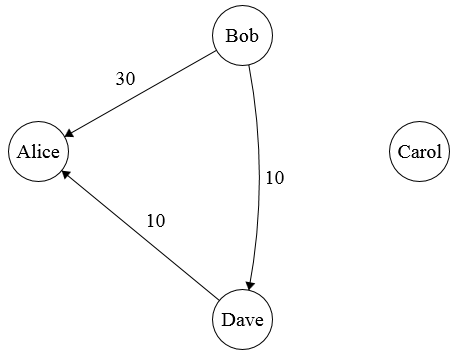

Bob is sending $10 to Dave, who is sending $10 to Alice. We can simplify this by having Bob send $10 to Alice straight.

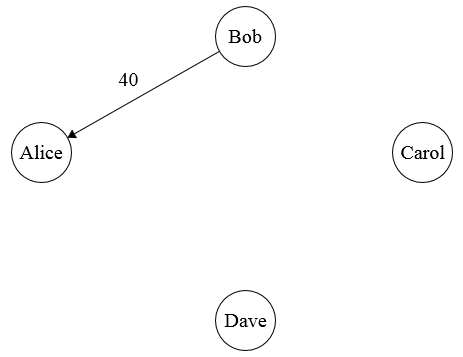

We’ve simplified the graph down to a single payment. Pretty neat, right? You can see how a tool that does this automatically could be useful.

This raises an interesting question. How would you solve the Splitwise problem in general? We formalize the problem below.

Let \(G = (V,E)\) be a weighted directed graph. Given \(G\), construct an equivalent graph \(G'\) such that every vertex \(v\) receives/gives the same amount of money it would in \(G\), and \(G'\) has as few edges as possible.

Flow Representation

My first intuition was that the Splitwise problem was very similar to a network flow problem. You want to shuffle money around a graph while minimizing costs.

This motivated looking at the min-cost flow problem. In the min-cost flow problem, sending one unit of flow through an edge \((u,v)\) has some cost. We want to minimize cost while sending enough flow through the graph.

The system on the left has \(20\) total flow, while the system on the right has \(10\) total flow. Assuming all edges have the same cost per unit flow, the latter graph is cheaper.

Min-cost flow is solvable through linear programming, but it’s not obvious that minimizing the flow in the graph is the same as minimizing the number of edges needed. So, I started looking elsewhere.

A Greedy Algorithm

Note that in flow problems, we assume only edges \((u,v)\) in the graph can send things to each other. In the Splitwise problem, anyone can send money to anybody else.

Instead of trying to reduce existing edges, let’s figure out how much everybody should pay, then add edges by ourselves. To minimize the number of edges, one natural idea is to send as much money as possible in every edge. This motivates the following algorithm.

- Read the input \((V, E)\) to compute the net balance for everyone.

- While someone owes money to somebody else:

- Find the person \(v_{max}\) who owes the most money.

- Find the person \(v_{min}\) who requests the most money.

- Have \(v_{max}\) send as much money as possible to \(v_{min}\).

- Update everyone’s net balance.

Here’s an example run. \(v_1,v_2,v_3,v_4\) owe $1,$2,$3,$4 respectively, and \(v_5,v_6\) request $5 each. The outputted set of edges is

- \(v_4\) sends $4 to \(v_5\)

- \(v_3\) sends $3 to \(v_6\). (Before this step, \(v_5\) is owed $1 and \(v_6\) is owed $5.)

- \(v_2\) sends $2 to \(v_6\). (Before this step, \(v_5\) is owed $1 and \(v_6\) is owed $2.)

- \(v_1\) sends $1 to \(v_5\).

Every party that owes money must send money at least once, which gives a lower bound of \(4\) transactions. This solution uses \(4\) transactions, so it’s optimal.

On mentioning this problem to a friend, he pointed out the following example. Have \(v_1,v_2\) owe $3, and \(v_3\) owe $5. Then have \(v_4\) request $6 and \(v_5\) request $5. The output is

- \(v_3\) sends $5 to \(v_4\)

- \(v_2\) sends $3 to \(v_5\)

- \(v_1\) sends $2 to \(v_5\)

- \(v_1\) sends $1 to \(v_4\)

However, we only need \(3\) transactions if \(v_3\) sends money to \(v_5\) in the first step.

He then proved Splitwise is NP-Complete, by showing Partition reduces to Splitwise.

NP-Completeness Proof

First, reformulate Splitwise as a decision problem.

Let \(G = (V,E)\) be a weighted directed graph. Given \(G\) and \(k\), return whether there exists an equivalent graph \(G'\) with at most \(k\) edges.

Given a \(G'\), we can verify it’s valid and has at most \(k\) edges in polynomial time. So Splitwise is in NP.

Next, construct a reduction from Partition to Splitwise. Given a list \(S\) of positive integers, we want to know if there is a partition \(S_1,S_2\) such that both sets have the same total sum.

Given \(S = \{a_1,a_2, \ldots, a_n\}\), construct \(G\) with \(n+2\) vertices \(v_1,v_2,\ldots, v_{n+2}\). Add edges such that \(v_i\) owes \(a_i\), and \(v_{n+1}, v_{n+2}\) request \(\frac{1}{2}\sum_{i=1}^n a_i\) each.

Any solution to this instance must have at least \(n\) transactions, because \(n\) people owe money. Furthermore, if the solution has exactly \(n\) transactions, \(v_i\) must send \(a_i\) for their transaction. The edges entering \(v_{n+1}\) and \(v_{n+2}\) correspond exactly to a partition of \(\{a_1,\ldots,a_n\}\) Thus, there is a solution with \(n\) transactions if and only if a partition exists. Since Partition is NP-Complete, it follows that Splitwise is NP-Complete. \(\blacksquare\)

Splitwise in Practice

It turns out the greedy algorithm gets pretty close to the optimal value in most cases. Based on a Quora post by a Splitwise founder, the site uses something similar.

So, Splitwise isn’t finding optimal solutions on a regular basis. Oh well. I still think it’s neat that an NP-Complete problem fell out at all.