Posts

-

My Understanding of Politics

CommentsLet me start by saying I’m incredibly underqualified to discuss anything about politics.

On the other hand, lots of people are underqualified, and that doesn’t stop them from getting into flame wars on the Internet. And what, my understanding of politics is going to get better if I avoid thinking about it? That’s literally the opposite of what you should be doing if you want to understand politics.

So here’s how this post is going to go. I explain how I view politics and politicians. And that’s it. No follow-up. I wait for people to object to the stupider things I say, and then I don’t reply to their objections unless I want to.

Is this going to make me a political genius? No. Am I looking to become a political genius? No. All I want is to be less of a political idiot. This seems like a good way to get some easy gains in that direction.

Here we go.

The Two Rules

I have two golden rules about politics.

- Politicians are motivated by two desires: power for the sake of power, and power for the sake of making the world a better place.

- No one gets everything they want, which turns politics into a game of compromise and quid pro quo.

Let’s break it down.

The Power to Change the World

Most politicians want power. I don’t think this is a controversial claim. Try saying the reverse. “Most politicians don’t want power.” That sounds resoundingly false to me.

What they want power for? Some people want power for the feeling of dominance over others. The euphoric feeling they get, when they tell people what to do, and it actually happens the way they asked it to get done.

Power is my mistress. I have worked too hard at her conquest to allow anyone to take her away from me.

(Napoleon)

I know some political scientists claim that having power is the only desire of politicians. That’s not entirely wrong, and it’s also not entirely true. If someone wanted only power and nothing else, they’d probably have an easier time in the private sector. (I’ll freely admit this is just my intuition talking.)

That leads to the second part of the rule. When I look at a politician, I see somebody who believes world order is broken. When someone runs for office, their entire thesis is that they’d be a better leader than the current one. So of course they want power - the people in power are doing it wrong!

To place it in narrative terms, it’s the difference between a hero who became a hero for fame, and a hero who became a hero because they believed no one else was going to rise to the occasion.

It’s somewhat icky to admit out loud, but sometimes the first step to improving the world is a naked grab for power. If you have influence, it makes it much easier to convince people to act the way you think they ought to.

And this should go without saying, but all politicians are genuinely trying to make the world a better place. Including the ones that disagree with you. Politicians aren’t trolls. They don’t disagree with you solely to piss you off. They disagree with you because their better world looks different from your better world.

That being said, how much this altruistic desire matters is dependent on the politician. A town mayor is more likely to care about helping people. A senator is closer towards the end of power for power’s sake. Someone running for president almost certainly has power as their primary goal. Only a very hungry sort of person would look at the presidency, look at the incredible stress is causes, consider the increased odds of death by assassination, understand that a large part of the world is going to hate them with undying passion, and conclude that yeah, they want that.

(As a corollary, I’m not bothered when people attack politicians for being opportunists. I expect this of them. Whether they do so discreetly or openly doesn’t influence my opinion by much.)

Even the most cynical interpretation of politics doesn’t sound that bad to me. When I was an intern, the city mayor visited our office, to talk about the role of technology in government. During the Q&A session, an audience member asked how politicians could justify switching positions so often. The mayor said that of course politicians are going to switch positions to match what votes want to do. Otherwise, they wouldn’t be representing their constituents.

I saw his reply as a positive spin on this: even politicians motivated only by power have to follow the will of the voters.

If a majority of the country agrees that interracial marriage should be legal, and a politician says they don’t approve of it, they’re going to lose their next election, badly. And therefore every politician agrees that interracial marriage is okay, despite the fact that as recently as 2013, thirteen percent of Americans did not approve of marriage between blacks and whites. I’m guessing the fraction is lower among members of Congress, but there are over 500 people in Congress. I think it’s reasonable to assume at least one privately disagrees with interracial marriage, but goes along with it publicly.

At some point, there’s no difference between pandering to racists and being a racist. And similarly, at some point there’s no difference between pandering to equal rights activists and believing in equal rights.

Votes are the means by which the public keeps a politician’s desire for power aligned with the public’s interests. Unfortunately, what’s best for the nation isn’t always what the nation wants, but that’s the world we live in. Someone who doesn’t pander for votes is going to lose their election to someone who does. And remember, the whole reason people go into politics is because they believe they’ll do a better job than everyone else running.

It does bother me that politicians may be saying things they don’t believe in, but considering the other upsides of democracy, I’m not too upset about the current state of affairs.

“How About a Nice Game of Politics?”

Take a bunch of people, all interested in advancing their own agenda. Then throw them into a room, and tell them they have to agree on something.

That’s what Congress feels like to me. Hence, the second rule of politics: no one gets everything they want.

The president has a lot of power, but the president isn’t a dictator. Similarly, a congressman or congresswoman is just one person in the House of Representatives. Governments are huge systems of incredible complexity. All things considered, it’s surprising they actually work.

To advance their pet issues, politicians have to balance their personal convictions with the realities of their situation. People who stick to their guns and refuse to make concessions tend not to get anything done.

[BURR]

You got more than you gave.[HAMILTON]

And I wanted what I got.

When you got skin in the game, you stay in the game.

But you don’t get a win unless you play in the game.(Hamilton, “The Room Where It Happens”)

That’s not saying stubbornness doesn’t have its place. Weaponized stubbornness can be a powerful tool. Consider the Obama administration. The broad narrative I heard from my decidedly Democratic bubble was that Republicans blocked Democratic legislation by being obstinate.

If you ally yourself with the blue tribe, sure, that sucks. But think about the broader play. Republicans stall the legislative process, making voters disillusioned by Democratic leadership. That gives ammunition for Republicans to use in upcoming midterm elections, which already tend to swing against the president’s party.

The Democrats know all of this, but the clock is still ticking on this power play. They now have two options. Make huge concessions that get their bills through (at the cost of the bills’ original spirits), or gamble that painting Republicans as do-nothings will be enough come election time.

Which move was right? Or were there other moves? I don’t know. The point is that politics is messy. You don’t get into Congress without some level of shrewdness. Backroom deals are all a part of the game.

You can try to rise above the game, proclaiming that governments should hold themselves to higher ideals. Then you lose to the people who compromised their principles and accepted money from lobbyists.

Politicians have to learn how to navigate this mess, or else they’re not the leader they promised voters they were going to be.

That means quid pro quo. It means advocating for something you don’t believe in, because you were promised support for the bill they care about. Accepting money from lobbyists even if you don’t want to, because you’re stuck in a political prisoner’s dilemma. Over time, politicians become exactly the kind of person they hated as teenagers.

In short, politics is where ideals go to die.

The great politicians are not the ones with the purest hearts. The great politicians are the ones that recognize the system, dive into it with disdain, and surface with the general thrust of the bill they wanted to pass.

What Guides My Vote?

Given this lens, what makes me decide I should vote for a specific politician?

My main goal is to support politicians who publicly support causes that match my belief. They could be lying, but guessing what they believe behind their claims is a road towards madness. It’s easier for me to trust what they say. And if they don’t actually believe in their cause, who cares? They have to support it to get votes, and if they didn’t try to support it after getting elected, they’re not getting my vote again.

My secondary goal is to support politicians that hold council and deeply consider the best solutions to issues. Politicians are trying to make the world a better place, but they’re not going to be experts in everything. Good politicians stay informed by surrounding themselves with experts and asking them questions. Great politicians actually change their position after talking to their experts.

(This is why I’ve never been very bothered by attacks based around politicians flip-flopping. Why should I care that they voted against something four years ago, if they’re voting for it now? Is it really that unreasonable that they changed their position?)

Finally, I support politicians who look like they’ll actually get things done. I’m much fonder of proposals that push the status quo by a bit instead of a lot. It’s hard enough to pass a bill. Proposing a radical bill that most of the legislature hates sounds pointless. All it does is proclaim you have strange beliefs. That’s interesting, but it doesn’t lead to actual results.

***

Well, there you have it. My broad views on politics. I’d like to think that it’s not totally wrong, but we’ll see how it goes.

If you had any objections, I’d appreciate it if you could comment. And if you agreed with what I said, I’d also appreciate comments, to get confirmation that I’m on the right track. I suspect readers of this post in particular will be more politically aware than me, and if I expect politicians to evolve their policy by asking the advice of experts, I should ask the same of myself.

-

Prioritized Contradiction Resolution

CommentsI know I said my next post was going to be my position on the existential risk of AI, but I love metaposts too much. I’m going to explain why I’m writing that post, through the lens of how I view self-consistency. Said lens may be obvious to the kinds of people who read my posts, but oh well.

At a party several months ago, Alice, Bob, and I were talking about effective altruism. (Fake names, for privacy.) Alice self-identified as part of the movement, and Bob didn’t. At one point, Bob said he approved of EA, and agreed with its arguments, but didn’t actually want to donate. From his viewpoint, diminishing marginal utility of money meant the logical conclusion was to send all disposable income to Africa. He wasn’t okay with that.

Alice replied like so.

I agree that’s the conclusion, but that doesn’t mean you have to follow it. The advice I’d give is to give up on achieving perfect self-consistency. For EA in particular, you can try to live up to that conclusion by donating some money, which is better than none, and then by donating to effective charities, which is a lot better than donating to ineffective ones. That being said, you should only be donating if it’s something you really want to do. It’s more sustainable that way.

If you’re like me, you want to have consistent beliefs that lead to consistent actions.

If you’re like me, you don’t have consistent beliefs, and you don’t execute consistent actions.

Instead you have a trainwreck of a brain that manages to squeak by every day.

Let me vent for a bit. Brains are pretty awesome. It’s pretty amazing what we can do, if you stop to think about it. Unfortunately, in many ways our brains are also complete garbage. Our intuitive understanding of probability is good at making quick decisions, but awful at solving simple probability questions. We double check information that challenges our ideas, but don’t double check information that confirms them. And if someone else does try to challenges our world view, we may double down on our beliefs instead of moving away from them, flying in the face of any reasonable model of intelligence.

What’s worse is when you get into stuff like Dunning-Kruger, where you aren’t even aware your brain is doing the wrong thing. There’s no way I actually remembered what Alice and Bob said at that party several months ago. Sure, that conversation was probably about EA, but it’s pretty likely I’m misremembering it in a way that validates one of my world views.

As a kid, I once realized I knew how to add small numbers, but had no memory of ever learning addition. Having an existential crisis at the age of seven is a fun experience, I’ll say that much.

Recently, someone I know pointed out that schizophrenics often don’t know they’re schizophrenic, and there was a small chance I was schizophrenic right now. The most surprising thing was that I wasn’t surprised.

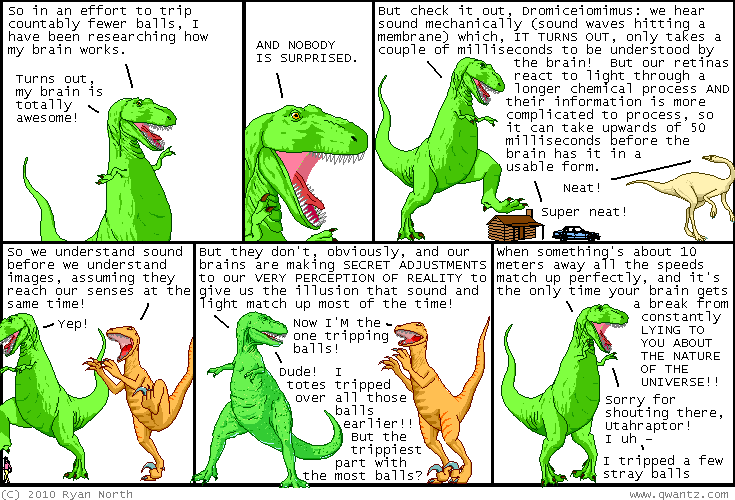

Somehow, I learned to never trust my ability to know the truth. I then internalized that belief into a deep-seated intuition that affects how I view cognition and perception. So, when I read a Dinosaur Comics strip like this one…

…I don’t trip that many balls, because they’ve mostly been tripped out.

That went on a bit of tangent. The point is, we’re lying to ourselves on a subconscious level. We all are. Discovering we’re lying to ourselves on a conscious level shouldn’t be much worse.

***

Let’s all take a moment to quietly mourn self-consistency, who died prematurely and never had a chance.

Then, let’s grab shovels and be gravediggers, because it turns out self-consistency is achievable after all.

Perfect self-consistency is never going to happen. It’s like a hydra. Chop down one contradiction and two grow in its place. Using an imperfect reasoning engine to reason about the world is a tragic comedy in itself. At the same time, it’s worth trying to be self-consistent, and our brains are the best tools we’re going to get.

The trick to achieving self-consistency is to find a nice, quiet place, and sit down to think. You let the thoughts come, sometimes consciously, sometimes subconsciously, and wait until you understand the implicit thoughts well enough to say them out loud. You refine them over time, until you gain a better understanding of yourself, failings and all.

There are a few ways you can do this. Writing is one of them. When you write, you’re forced to try words, again and again, until you hit upon words with perfect weight. Rinse, lather, repeat, until you understand why you act the way you do.

The only problem is that this process can take forever, especially on complicated topics. When I try to do this, my brain has an annoying habit of coming to a different conclusion than I expect it to.

I was never any good at writing essays in high school. Sometimes, I think it’s because I was never good at bullshitting an analysis of the text I didn’t believe. I’d write to the end of the essay, realize I was arguing a completely different point from my original thesis, and would feel obligated to rewrite the start of my essay. Which then led to a rewrite of the middle, and the end, by which point I’d sometimes diverged yet again…

Because I’m not made of time, and contradictions are never-ending, I can only resolve so many of them if I want to do other things, like watch YouTube videos and play video games. Thus, after a long circuitous story, we’ve ended at what I call prioritized contradiction resolution. Here’s a summary of my reasoning.

- I have contradictory thoughts. That’s fine.

- I have finite time and motivation. That kinda sucks, but okay.

- It costs time and motivation to resolve contradictions, and those are my limiting factors.

- Therefore, I should focus on fixing the contradictions most important to me.

It makes sense for me to think deeply about the AI safety debate. I do research in machine learning, so people are going to expect me to have a well-thought-out opinion on the topic. (I lost track of how many times people asked for my opinion at EA Global after hearing I worked in ML, but I think it was at least ten.)

In contrast, although it’s been part of my life for over five years, I don’t need to have well-thought-out reasons to like My Little Pony. I’d like to take the time to tease apart why I like the fandom so much, but the value I’d gain from doing so doesn’t seem as large. Thus, resolving that is further down on my internal todo pile.

So here we are. On looking back, I could have just started here, and skipped all that nonsense with Alice and Bob and rants about cognitive biases.

But somehow, I find myself a lot more at peace, for taking the time to resolve any lingering contradictions I have about the way I approach resolving contradictions. When I promise you a metapost, you’re damn well getting a metapost.

Enough navel-gazing. Next post is about AI. I swear. Otherwise, I’m donating $50 to the Against Malaria Foundation.

-

One Year Later

CommentsOver the past few days, I’ve set up the current site and ported over content from the old one. You should see the old blog posts if you scroll down. Out with the old, in with the new…

[…]

As for the title - although I still don’t know what I’m doing with my life, one of my goals is to be an interesting, insightful person. Unfortunately, I’m not one yet. But if I try, maybe I can get there.

Sorta Insightful turns one year old today. To whatever meager readership I have: thanks. It means more than you’d think.

It’s been six months since I wrote my last retrospective post. Let’s see what’s changed.

***

First off, I owe people an apology. Six months ago, I said the following.

I plan to start flushing my queue of non-technical post ideas. On the short list are

- A post about Gunnerkrigg Court

- A post on effort icebergs and criticism

- A post about obsessive fandoms

- A post detailing the hilariously incompetent history of the Dominion Online implementation

I haven’t written any of those posts, and I feel awful about it. I feel especially bad about not writing the Gunnerkrigg Court post. Writing that post has been on my shortlist ever since I started this blog.

Meanwhile, I’ve forgotten what the effort icebergs post was going to cover. Last I remember, I was planning to resolve the tension between constructive criticism of bad work and respect for the effort that went into it. And there was some infographic I really liked and wanted to use? Wow, it has been a while.

The obsessive fandom post is officially turning into a post about the My Little Pony fandom in particular, but it hasn’t passed the idea stage yet. As for the Dominion post, I at least have some words written down, but I haven’t touched them since March.

All these posts that want to be free, and they’re chained to the ground, not because I can’t write them, but because I’m too lazy to write them.

Yes, I know that there aren’t really people who hate me because I haven’t written these posts, but this is one of those things where I won’t feel better until I make it up to myself.

***

Next, the neutral news. My blog still gets basically no traffic. I didn’t expect it to. One year isn’t that long to build a readership, and I didn’t go into this expecting much popularity.

Yes, in theory I could be promoting my blog more, but there are two things stopping me. One, my blog has no coherent theme. It’s exactly what I want to write about and nothing more. If it was a purely technical blog, I could submit everything to Hacker News. If it was all about machine learning, I could be reaping r/MachineLearning karma. But it’s not all technical, and it’s not all machine learning. It’s fractured, just like me.

These blog posts aren’t just words on a page. They’re my words, on my page. Marketing something so personal feels wrong, on a level that’s hard to explain. Yes, I’d like the feeling of approval that comes with popularity, but I don’t need the validation to keep writing. Just having the thoughts out there is good enough.

***

Enough bittersweet stuff. What’s gone well?

Well, for one, I started working full time, and I still have time for blog posts. In the past six months, I wrote 38 blog posts, if you include this one. Now, to be fair, 31 of those posts are from the blogging gauntlet I did in May, which had a lot of garbage, but there are definitely a few pearls worth checking out. People seem to like Appearing Skilled and Being Skilled are Very Different, although my personal favorite is Fearful Symmetry. (I’d really like to revisit Fearful Symmetry, when I have time to.)

I think I’m getting better at articulating my thoughts well the first time. I don’t have any way to measure this quantitatively, but I feel like I’ve been stumbling over my own words less often.

I continue to be pleasantly surprised that people actually bother to read things I write. You could be anywhere on the Internet, and you chose to be here? Man, you’re nuts, and I love you for it.

The Future of Sorta Insightful

The future of Sorta Insightful is pretty simple: I’ll keep writing and see how it goes. Next post is me explaining my position on the existential risk of AI. I’ve had a bunch of conflicting feelings about the topic, but going to EA Global forced me to work through the bigger ones. I’m pretty excited to fully resolve my contradictions on the subject.

I wrote the start of a really, really stupid short story about dinosaurs. In the right hands, this story could be pretty neat, but unfortunately my hands aren’t the right hands. At the same time, I’m not going to get better at storytelling unless I try, so I’ll keep at it. I hope that story sees the light of day, because the premise is so stupid that it might just wrap back around to brilliant.

The post about Gunnerkrigg Court is going to happen. I swear it will. Just, not soon. After messing up the post on Doctor Whooves Adventures, I want to make sure I get this explanation right. I love this comic sooooooo much, and it deserves a good advertisement.

As always, I promise no update schedule beyond “soon”. Bear with me on this.

Here’s to another year! It’s going to be pretty great.