Posts

-

Using AI to Get the Neopets Destruct-o-Match Avatar

CommentsI have blogged about Neopets before, but a quick refresher. Neopets is a web game from the early 2000s, which recently celebrated its 25th anniversary and maintains a small audience of adult millennials. It’s a bit tricky to sum up the appeal of Neopets, but I like the combination of Web 1.0 nostalgia and long-running progression systems. There are many intersecting achievement tracks you can go for, some of which are exceedingly hard to complete. Among them are books, pet training, stamp collecting, gourmet foods, Kadoaties, and more.

The one I’ve been chasing the past few years is avatars. Neopets has an onsite forum called the Neoboards. This forum is heavily moderated, so you can only use avatars that have been created by TNT. Some are available to everyone, but most have unlock requirements. The number of avatars someone has is a quantification of how much investment they’ve put into Neopets. In my opinion, avatars are the best achievement track to chase, because their unlocks cover the widest cross-section of the site. To get all the avatars, you’ll need to do site events, collect stamps, play games, do dailies, and more. There’s a reason they get listed first in your profile summary!

The avatar I’m using right now literally took me 5 years to unlock. I’m very glad I have it.

Avatars start easy, but can become fiendishly difficult. To give a sense of how far they can go, let me quote the post I wrote on the Neopets economy.

The Hidden Tower Grimoires are books, and unlike the other Hidden Tower items, they’re entirely untradable. You can only buy them from the Hidden Tower, with the most expensive one costing 10,000,000 NP. Each book grants an avatar when read, disappearing on use.

Remember the story of the I Am Rich iOS app, that cost $1000, and did literally nothing? Buying the most expensive Grimoire for the avatar is just pure conspicuous consumption. […] They target the top 1% by creating a new status symbol.

I don’t consider myself the Neopets top 1%, but since writing that post, I’ve bought all 3 Hidden Tower books just to get the avatars…so yeah, they got me.

Avatars can be divided by category, and one big category is Flash games. These avatars all have the same format: “get at least X points in this game.” Most of the game thresholds are evil, since they were set to be a challenge based on high-score tables from the height of Neopets’ popularity. Getting an avatar score usually requires a mix of mastery and luck.

Of these avatars, one I’ve eyed for a long time is Destruct-o-Match.

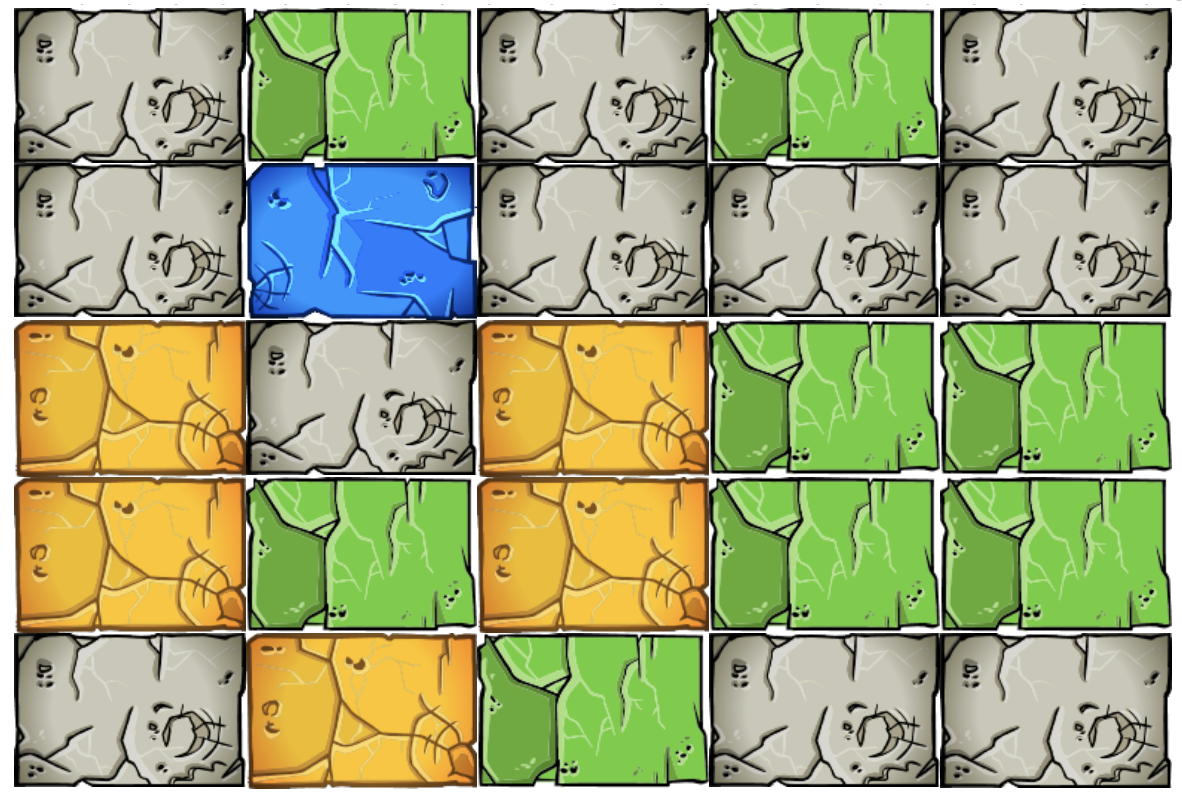

Destruct-o-Match is a block clearing game that I genuinely find fun. Given a starting board, you can clear any group of 2 or more connected blocks of the same color. Each time you do so, every other block falls according to gravity. Your goal is to clear as many blocks as possible. You earn more points for clearing large groups at once, and having fewer blocks leftover when you run out of moves. The game is made of 10 levels, starting with a 12 x 14 grid of 4 colors and ending with a 16 x 18 grid of 9 colors.

My best score as a kid was 1198 points. The avatar score is 2500. For context, top humans can reach around 2900 to 3000 points on a good run.

Part of the reason this has always been a white whale for me is that it’s almost entirely deterministic from the start state. You could, in theory, figure out the optimal sequence of moves for each level to maximize your score. Actually doing so would take forever, but you could. There’s RNG, but there’s skill too.

Destruct-o-Match can be viewed as a turn based game, where your moves are deciding what boulders to remove. That puts it in the camp of games like Chess and Go, where AI developers have created bots that play at a superhuman level. If AI can be superhuman at Go, surely AI can be slightly-worse-than-experts at Destruct-o-Match if we try?

This is the story of me trying.

Step 0: Is Making a Destruct-o-Match AI Against Neopets Rules?

There’s a gray area for what tools are allowed on Neopets. In general, any bot or browser extension that does game actions or browser requests for you is 100% cheating. Things that are quality-of-life improvements like rearranging the site layout are fine. Anything in-between depends on if it crosses the line from quality-of-life improvement to excessive game assistance, but the line is hard to define.

I believe the precedent is in favor of a Destruct-o-Match AI being okay. Solvers exist for many existing Flash games, such as an anagram solver for Castle of Eliv Thade, Sudoku solvers for Roodoku, and a Mysterious Negg Cave Puzzle Solver for the Negg Cave daily puzzle. These all cross the line from game walkthrough to computer assisted game help, and are all uncontroversial. As long as I’m the one inputting moves the Destruct-o-Match AI recommends, I should be okay.

Step 1: Rules Engine

Like most AI applications, the AI is the easy part, and engineering everything around it is the hard part. To write a game AI, we first need to implement the rules of the game in code. We’ll be implementing the HTML5 version of the game, since it has a few changes from the Flash version that make it much easier to get a high score. The most important one is that you can earn points in Zen Mode, which removes the point thresholds that can cause you to fail levels and Game Over early in the Flash version.

To represent the game, we’ll view it as a Markov decision process (MDP), since that’s the standard formulation people use in the literature. A Markov decision process is made of 4 components:

- The state space, covering all possible states of the game.

- The action space, covering all possible actions from a given state.

- The transition dynamics, describing what next state you’ll reach after doing an action.

- The reward, how much we get for doing that action. We are trying to maximize the total reward over all timesteps.

Translating Destruct-o-Match in these terms,

- The state space is the shape of the grid and the color of each block in that grid.

- The possible actions are the different groups of matching color.

- The transition dynamics are the application of gravity after a group is removed.

- The reward is the number of points the removed group is worth, and the end score bonus if we’ve run out of moves.

The reward is the easiest to implement, following this scoring table.

Boulders Cleared Points per Boulder Bonus Points 2-4 1 - 5-6 1 1 7 1 2 8-9 1 3 10 1 4 11 1 6 12-13 1 7 14 1 8 15 1 9 16+ 2 - The end level bonus is 100 points, minus 10 points for every leftover boulder.

Boulders Remaining Bonus Points 0 100 1 90 2 80 3 70 4 60 5 50 6 40 7 30 8 20 9 10 10+ 0 Gravity is also not too hard to implement. The most complicated and expensive logic is on identifying the possible actions. We can repeatedly apply the flood-fill algorithm to find groups of the same color, continuing until all boulders are assigned to some group. The actions are then the set of groups with at least 2 boulders. This runs in time proportional to the size of the board. However, it’s not quite that simple, thanks to one important part of Destruct-o-Match: powerups.

Powerups

Powerups are very important to scoring in Destruct-o-Match, so we need to model them accurately. Going through them in order:

The Timer Boulder counts down from 15 seconds, and becomes unclearable if not removed before then.

The Fill Boulder will add an extra line of blocks on top when cleared.

The Explode Boulder is its own color and is always a valid move. When clicked, it and all adjacent boulders (including diagonals) are removed, scoring no points.

The Overkill Boulder destroys all boulders of matching color when cleared, but you only get points for the group containing the Overkill.

The Multiplier Boulder multiples the score of the group it's in by 3x.

The Morph Boulder cycles between boulder colors every few seconds.

The Wild Boulder matches any color, meaning it can be part of multiple valid moves.

The Shuffle Boulder gives a one-shot use of shuffling boulder colors randomly. The grid shape will stay fixed. You can bank 1 shuffle use at a time, and it carries across levels.

The Undo Boulder gives a one-shot use of undoing your last move, as long as it did not involve a power-up. Similar to shuffles, you can bank 1 use at a time and it carries across levels. In the HTML5 version, you keep all points from the undone move. We can keep the game simpler by ignoring the Shuffle and Undo powerups. These both assist in earning points, but they introduce problems. Shuffle would require implementing randomness. This would be harder for the AI to handle, because we’d have to average over the many possible future states to estimate the value of each action. It’s possible, just expensive. Meanwhile, Undos require carrying around history in the state, which I just don’t want to deal with. So, I’ll have my rules engine pretend these powerups don’t exist. This will give up some points, but remember, we’re not targeting perfect play. We just want “good enough” play to hit the avatar score of 2500. Introducing slack is okay if we make up for it later. (I can always use the Shuffle or Undo myself if I spot a good opportunity to do so later.)

Along similar lines, I will ignore the Fill powerup because it adds randomness on what blocks get added, and will ignore the Timer powerup because I want to treat the game as a turn-based game. These are harder to ignore. My plan is to commit to clearing Timer boulders and Fill boulders as soon as I start the level. Then I’ll transcribe the resulting grid into the AI and let it take over from there.

This leaves Explode, Overkill, Multiplier, Morph, and Wild. Morph can be treated as identical to Wild, since we can wait for the Morph boulder to become whatever color we want it to be. The complexity these powers add is that after identifying a connected group, we may remove boulders outside that group. A group of blue boulders with an Overkill needs to remove every other blue boulder. So, for every group identified via flood-fill, we note any powerups contained in that group, then iterate over them to define an “also-removed” set for that action. (There are a lot of minor, uninteresting details here, my favorite of which was that Wild Boulders are not the same color as Explode Boulders and Explode Boulders are not the same color as themselves.) But with those details handled, we’re done with the rules engine!

Well, almost done.

Step 2: Initial State

Although I tried to remove all randomness, one source of randomness I can’t ignore is how powerups are initially distributed.

Of the powerups, the Multiplier Boulder is clearly very valuable. Multiplying score by 3x can be worth a lot, and avatar runs are often decided by how many Multipliers you see that run. So for my AI to be useful, I need to know how often each powerup appears.

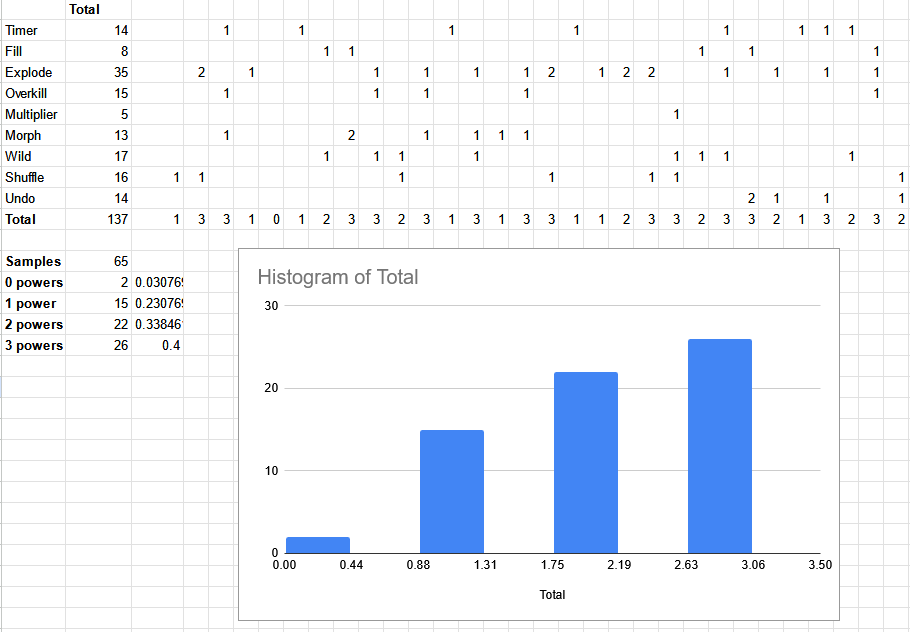

I couldn’t find any information about this on the fan sites, so I did what any reasonable person would do and created a spreadsheet to figure it out for myself.

I played 65 levels of Destruct-o-Match, tracking data for each one. Based on this data, I believe Destruct-o-Match first randomly picks a number from 0-3 for how many powerups will be on the board. If powerups were randomly selected per block, I would have seen more than 3 powerups at some point. I observed 0 powerups 3% of the time, 1 powerup 23% of the time, 2 powerups 34% of the time, and 3 powerups 40% of the time. For reverse engineering, I’m applying “programmer’s bias” - someone made this game, and it’s more likely they picked round, nice numbers. I decided to round to multiples of 5, giving 0 = 5%, 1 = 20%, 2 = 35%, 3 = 40% for powerup counts.

Looking at the powerup frequency, Explode clearly appears the most and Multiplier appears the least. Again applying some nice number bias, I assume that Explodes are 2x more likely than a typical powerup, Fills are 2/3rd as likely, and Multipliers are 1/3rd as likely, since this matches the data pretty well.

An aside here: in testing on the Flash version, I’ve seen more than 3 powerups appear, so the algorithm used there differs in some way.

Step 3: Sanity Check

Before going any further down the rabbit hole, let’s check how much impact skill has on Destruct-o-Match scores. If the scores are mostly driven by RNG, I should go play more games instead of developing the AI.

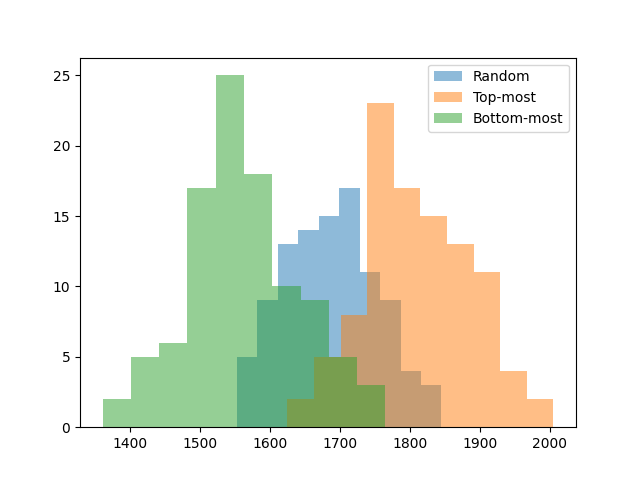

I implemented 3 basic strategies:

- Random: Removes a random group.

- Top-Down: Removes the top-most group.

- Bottom-Up: Removes the bottom-most group.

Random is a simple baseline that maps to a human player spamming clicks without thinking. (I have done this many, many times when I just want a game to be over.) Top-down and bottom-up are two suggested strategies from the JellyNeo fan page. Top-down won’t mess up existing groups but makes it harder to create new groups, since fewer blocks change location when falling. Bottom-up can create groups by causing more blocks to fall, but it can also break apart existing larger groups, which hurts bonus points.

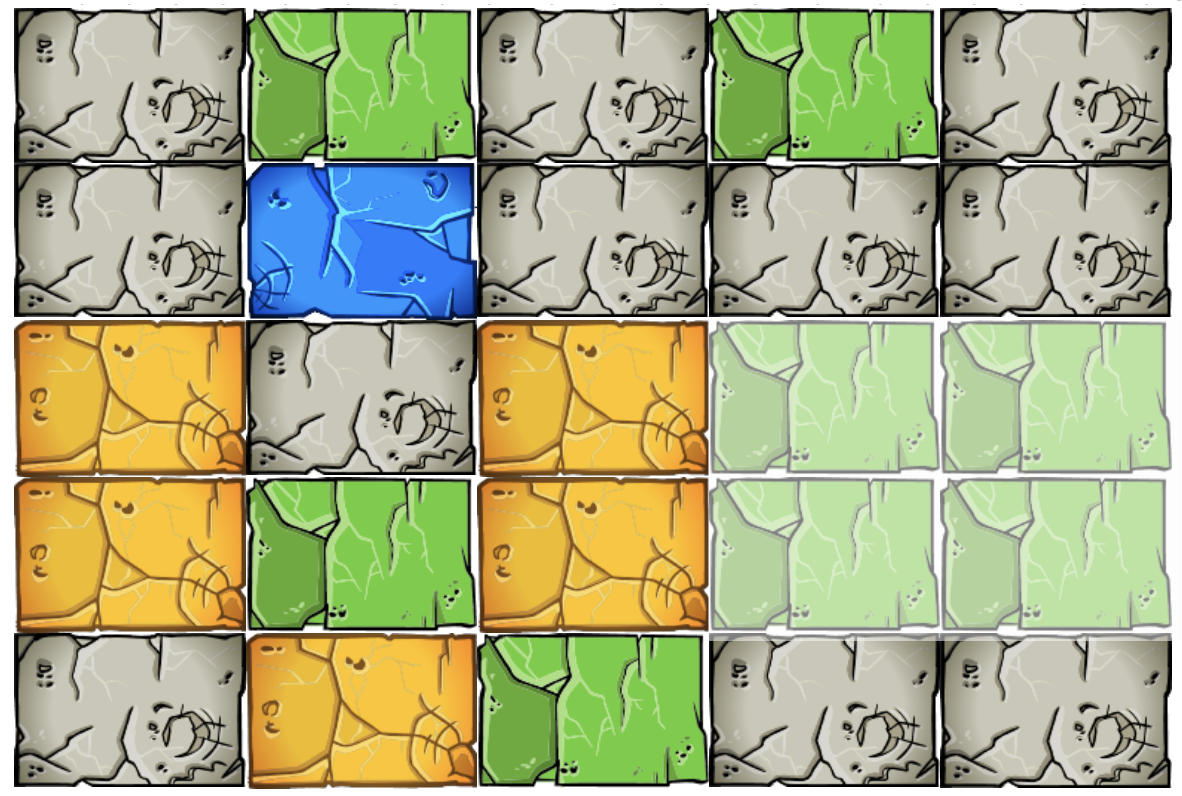

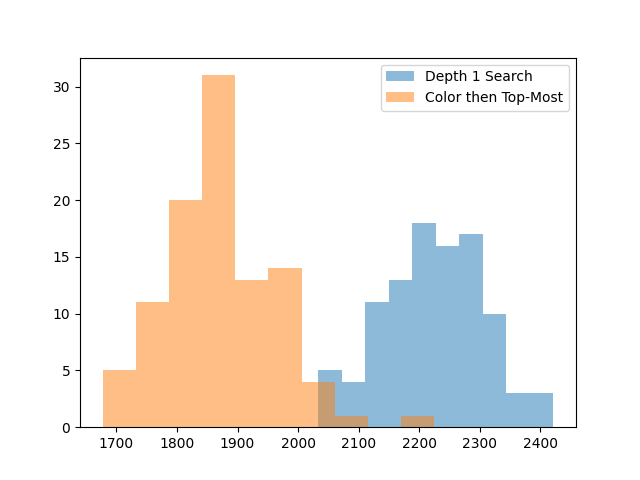

For each strategy, I simulated 100 random games and plotted the distribution of scores.

The good news is that strategy makes a significant difference. Even a simple top-most strategy is 100 points better than random. This also lets us know that the bottom-up strategy sucks. Which makes sense: bottom-up strategies introduce chaos, it’s hard to make large groups of the same color in chaos, and we want large groups for the bonus points. For human play, this suggests it’s better to remove groups near the top unless we have a reason not to.

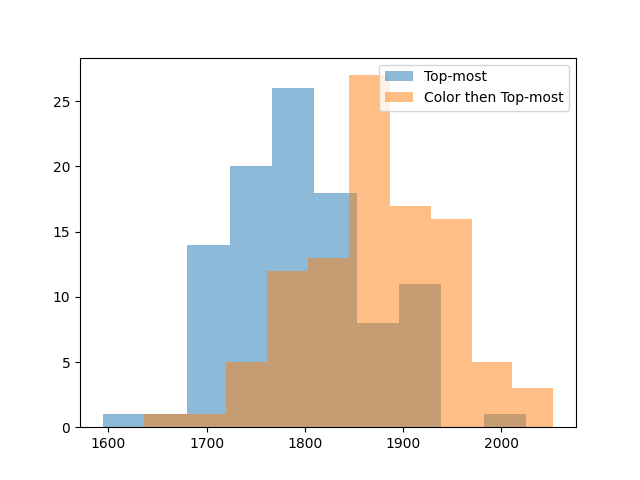

One last recommended human strategy is to remove blocks in order of color. The idea is that if you leave fewer colors on the board, the remaining groups will connect into large groups more often, giving you more bonus points. We can try this strategy too, removing groups in color order, tiebreaking with the top-down heuristic that did well earlier.

Removing in color order gives another 100 points on average. We can try one more idea: if bottom-up is bad because it breaks apart large groups, maybe it’d be good to remove large groups first, to lock in our gains. Let’s remove groups first in order of color, then in order of size, then in order of which is on top.

This doesn’t seem to improve things, so let’s not use it.

These baselines are all quite far from the avatar score of 2500. Even the luckiest game out of 100 attempts only gets to 2100 points. It is now (finally) time to implement the AI.

Step 4: The AI

To truly max the AI’s score, it would be best to follow the example of AlphaZero or MuZero. Those methods work by running a very large number of simulated games with MCTS, training a neural net to predict the value of boards from billions of games of data, and using that neural net to train itself with billions more games.

I don’t want to invest that much compute or time, so instead we’re going to use something closer to the first chess AIs: search with a handcoded value function. The value of a state \(s\) is usually denoted as \(V(s)\), and is the estimated total reward if we play the game to completion starting from state \(s\). If we have a value function \(V(s)\), then we can find the best action by computing what’s known as the Q-value. The Q-value \(Q(s,a)\) is the total reward we’ll achieve if we start in state \(s\) and perform action \(a\), and can be defined as.

\[Q(s,a) = reward(a) + Value(\text{next state}) = r + V(s')\]Here \(s'\) is the common notation for “next state”. The action \(a\) with largest Q-value is the action the AI believes is best.

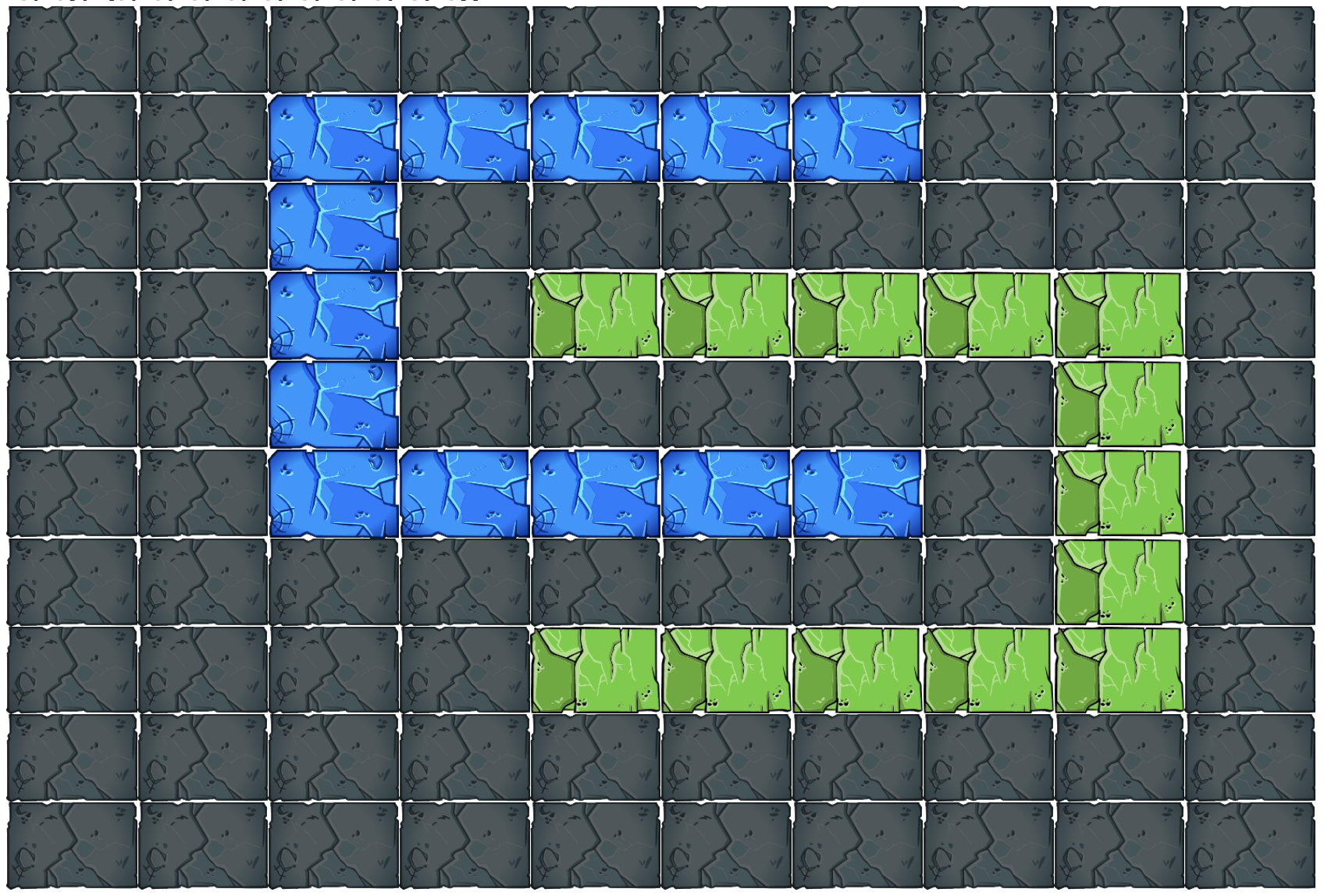

So how do we estimate the value? We can conservatively estimate a board’s value as the sum of points we’d get from every group that exists within it, plus the end-level bonus for every boulder that isn’t in one of those groups. This corresponds to scoring every existing group and assuming no new groups will be formed if all existing groups are removed. Doing so should be possible on most boards, if we order the groups such that a group is removed only after every group above it has been removed. Unfortunately, there are some edge cases where it isn’t possible, such as this double-C example, where groups depend on each other in a cycle.

In this example, we cannot earn full points for both the blue group and green group, because removing either will break apart the other.

We could determine if it’s possible to score every group by running a topological sort algorithm, but for our purposes we only need the value function to be an approximation, and we want the value function to be fast. So, I decided to just ignore these edge cases, for the sake of speed.

We can reuse the logic we used to find every possible action to find all groups in the grid. This gives a value estimate of

\[V(s) = \sum_{a} reward(a)\]Here is our new method for choosing actions.

- From the current state \(s\), compute every possible action \(a\).

- For each action \(a\), find the next state \(s'\) then compute Q-value \(Q(s,a) = r + V(s')\).

- Pick the action with best Q-value.

- Break ties with the color then top-most heuristic that did best earlier.

Even though this only looks 1 move ahead, it’s quite effective. By a lot.

This is enough to give a 400 point boost on its own. This gain was big enough that I decided to try playing a game with this AI, and I actually got pretty close to winning on my first try, with 2433 points.

The reason I got so many points compared to the distribution above is a combination of using Shuffles for points and beneficial bugs. I don’t know how, but I got a lot more points from the end-level bonuses in levels 3 and 4 than I was supposed to. I noticed that levels 3 and 4 are only 12 x 15 instead of 13 x 15 from the Flash version, so there may be some other bug going on there.

Still, this is a good sign! We only need to crank out 70 more points. If we can get this close only looking 1 move ahead, let’s plan further out.

Step 5: Just Go Harder

Early, we defined the Q-value as

\[Q(s,a) = reward(a) + Value(\text{next state})\]This is sometimes called the 1-step Q-value, because we check the value after doing 1 timestep. We can generalize this to the n-step Q-value by defining it as

\[Q(s,a_1,a_2,\cdots,a_n) = r_1 + r_2 + \cdots + r_n + Value(\text{state after n moves})\]Here each reward \(r_i\) is the points we get from doing action \(a_i\). We take the max over all sequences of \(n\) actions, run the 1st action in the best sequence, then repeat.

One natural question to ask here is, if we’ve found the best sequence of \(n\) moves, why are we only acting according to the 1st move in that sequence? Why not run all \(n\) actions from that sequence? The reason is because our value estimate is only an approximation of the true value. The sequence we found may be the best one according to that approximation, but that doesn’t make it the actual best sequence. By replanning every action, the AI can be more reactive to the true rewards it gets from the game.

This is all fine theoretically, but unfortunately an exhaustive search of 2 moves ahead is already a bit slow in my engine. This is what I get for prioritizing ease of implementation over speed, and doing everything in single-threaded Python. It is not entirely my fault though. Destruct-o-Match is genuinely a rough game for exhaustive search. The branching factor \(b\) of a game is the number of valid moves available per state. A depth \(d\) search will require considering \(b^d\) sequences of actions, exponential in the branching factor, so \(b\) is often considered a proxy for search difficulty.

To set a baseline, the branching factor of Chess averages 31 to 35 legal moves. In my analysis, the start board in Destruct-o-Match has an average of 34 legal moves. Destruct-o-Match is as expensive to search as Chess. This is actually so crazy to me, and makes me feel less bad about working on this. If people can dedicate their life to Chess, I can dedicate two weeks to a Destruct-o-Match side project.

To handle this branching factor, we can use search tree pruning. Instead of considering every possible action, at each timestep, we should consider only the \(k\) most promising actions. This could miss some potentially high reward sequences if they start with low scoring moves, but it makes it more practical to search deeper over longer sequences. This is often a worthwhile trade-off.

Quick aside: in college, I once went to a coding competition where we split into groups, and had to code the best Ntris bot in 4 hours. I handled game logic, while a teammate handled the search. Their pruned search tree algorithm destroyed everyone, winning us $500 in gift cards. It worked well then, so I was pretty confident it would work here too.

Here’s the new algorithm, assuming we prune all but the top \(k\) actions.

- From the current state, compute every possible action.

- For each action, find the next state \(s'\) and the 1-step Q-value \(Q(s,a)\).

- Sort the Q-values and discard all but the top \(k\) actions \(a_1, a_2, \cdots, a_k\).

- For each next state \(s'\), repeat steps 1-3, again only considering the best \(k\) actions from that \(s'\). When estimating Q-value, use the n-step Q-value, for the sequence of actions needed to reach the state we’re currently considering.

- By the end, we’ll have computed a ton of n-step Q-values. After hitting the max depth \(d\), stop and return the first action of the sequence with best Q-value.

If we prune to \(k\) actions in every step, this ends up costing \(b\cdot k^d\) time. There are \(k^d\) different nodes in the search tree and we need to consider the Q-values of all \(b\) actions from each node in that tree to find the best \(k\) actions for the next iteration. The main choices now are how to set \(k\) and max depth \(d\).

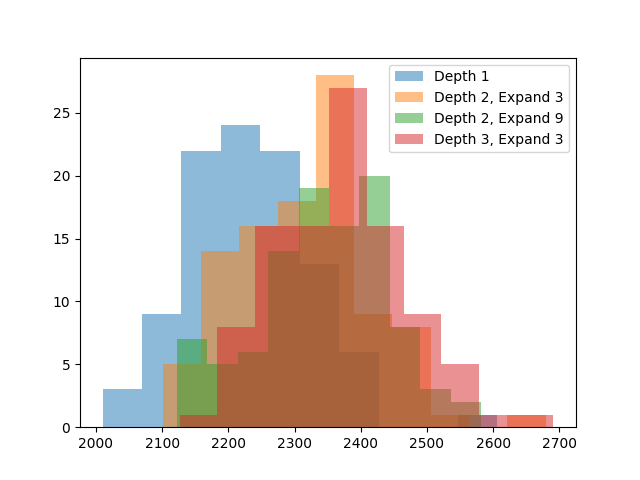

To understand the trade-off better, I did 3 runs. One with

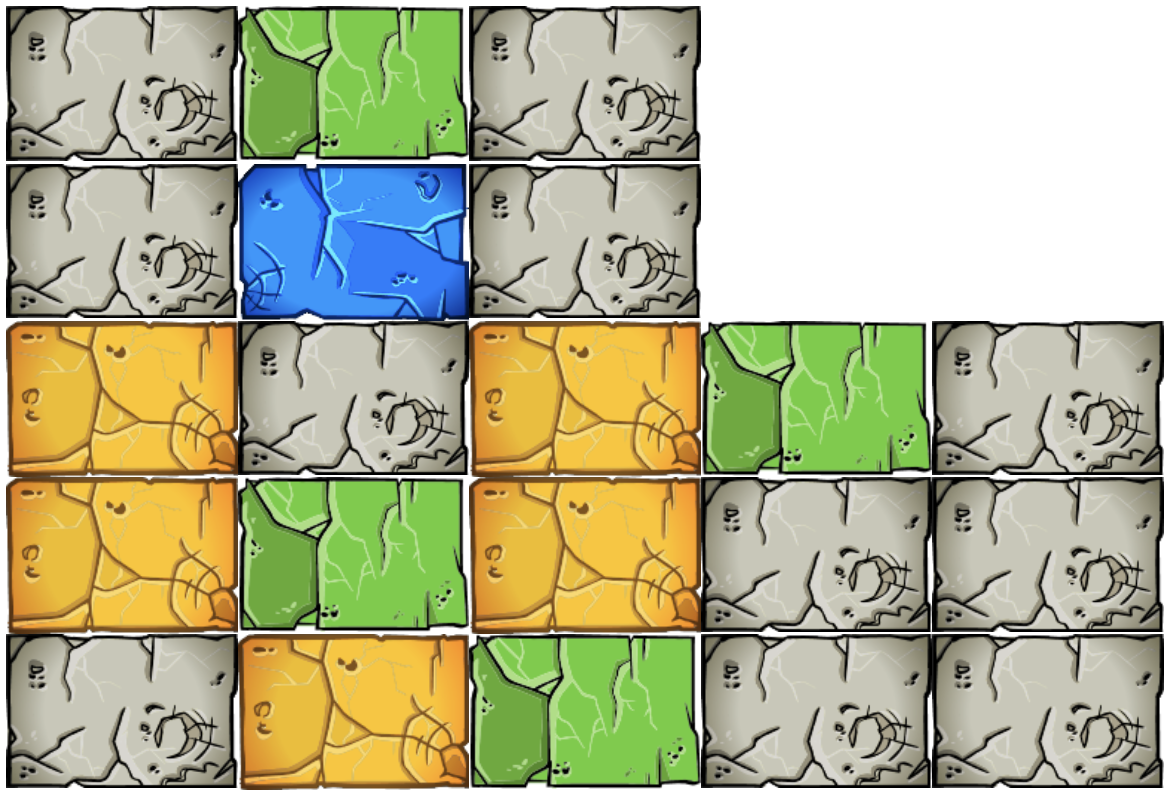

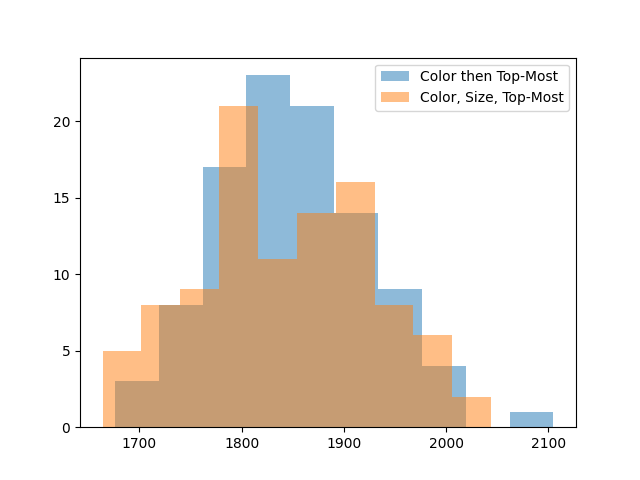

Depth=2, Expand=3, one withDepth=2, Expand=9, and one withDepth=3, Expand=3. The first is to verify there are gains from increasing search depth at all. The second two are 2 compute-equivalent configurations, one searching wider and the other searching deeper.

The gains are getting harder to spot, so here are more exact averages.

Depth 1: 2233.38

Depth 2, Expand 3: 2318.32

Depth 2, Expand 9: 2343.34

Depth 3, Expand 3: 2373.37Searching deeper does help, it is better than searching wider, and the

Depth 3, Expand 3configuration is 140 points better than the original depth 1 search. This should be enough for an avatar score. This search is also near the limit of what I can reasonably evaluate. Due to its exponential nature, it’s very easy for search to become expensive to run. The plot above took 7 hours to simulate.For the final real game, I decided to use

Depth 3, Expand 6. There’s no eval numbers for this, but increasing the number of considered moves can only help. In local testing, Depth 3 Expand 6 took 1.5 seconds on average to pick an action on my laptop, which was just long enough to feel annoying, but short enough to not be that annoying.After starting up the AI, I played a Destruct-o-Match game over the course of 3 hours. By my estimate, the AI was only processing for 15 minutes, and the remaining 2 hr 45 min was me manually transcribing boards into the AI and executing the moves it recommended. The final result?

An avatar score! Hooray! If I had planned ahead more, I would have saved the game state sequence to show a replay of the winning game, but I didn’t realize this until later, and I have no interest in playing another 3 hour Destruct-o-Match game.

Outtakes and Final Thoughts

You may have noticed I spent almost all my time transcribing the board for the AI. My original plan was to use computer vision to auto-convert a screenshot of the webpage into the game state. I know this is possible, but unfortunately I messed something up in my pattern-matching logic and failed to debug it. I eventually decided that fixing it would take more time than entering boards by hand, given that I was only planning to play the game 1-2 times.

After I got the avatar, I ran a full evaluation of the

Depth 3, Expand 6configuration overnight. It averages 2426.84 points, another 53 points over theDepth 3, Expand 3setting evaluated earlier. Based on this, you could definitely squeeze more juice out of search if you were willing to wait longer.Some final commentary, based on studying games played by the AI:

- Search gains many points via near-perfect clears on early levels. The AI frequently clears the first few levels with 0-3 blocks left, which adds up to a 200-300 point bonus relative to baselines that fail to perfect clear. However, it starts failing to get clear bonuses by around level 6. Based on this, I suspect Fill powerups are mostly bad until late game. Although Fills give you more material to score points with, it’s not worth it if you end with even a single extra leftover boulder that costs you 10 points later. It is only worth it once you expect to end levels with > 10 blocks left.

- The AI jumps all over the board, constantly switching between colors and groups near the top or bottom. This suggests there are a lot of intentional moves you can make to get boulders of the same color grouped together, if you’re willing to calculate it.

- The AI consistently assembles groups of 16+ boulders in the early levels. This makes early undos very strong, since they are easily worth 32-40 points each. My winning run was partly from a Multiplier in level 1, but was really carried by getting two Undos in level 2.

- Whoever designed the Flash game was a monster. In the original Flash game, you only get to proceed to the next level if you earn enough points within the current level, with the point thresholds increasing the further you get. But the AI actually earns fewer points per level as the game progresses. My AI averages 203 points in level 7 and 185 points in level 9. If the point thresholds were enforced in the HTML5 version, the AI would regularly fail by level 7 or 8.

I do wonder if I could have gotten the avatar faster by practicing Destruct-o-Match myself, instead of doing all this work. The answer is probably yes, but getting the avatar this way was definitely more fun.

-

Late Takes on OpenAI o1

CommentsI realize how late this is, but I didn’t get a post out while o1 was fresh, and still feel like writing one despite it being cold. (Also, OpenAI just announced they’re going to ship new stuff starting tomorrow so it’s now or never to say something.)

OpenAI o1 is a model release widely believed (but not confirmed) to be a post-trained version of GPT-4o. It is directly trained to spend more time generating and exploring long, internal chains of thought before responding.

Why would you do this? Some questions may fundamentally require more partial work or “thinking time” to arrive at the correct answer. This is considered especially true for domains like math, coding, and research. So, if you train a model to specifically use tokens for thinking, it may be able to reach a higher ceiling of performance, at the cost of taking longer to generate an answer.

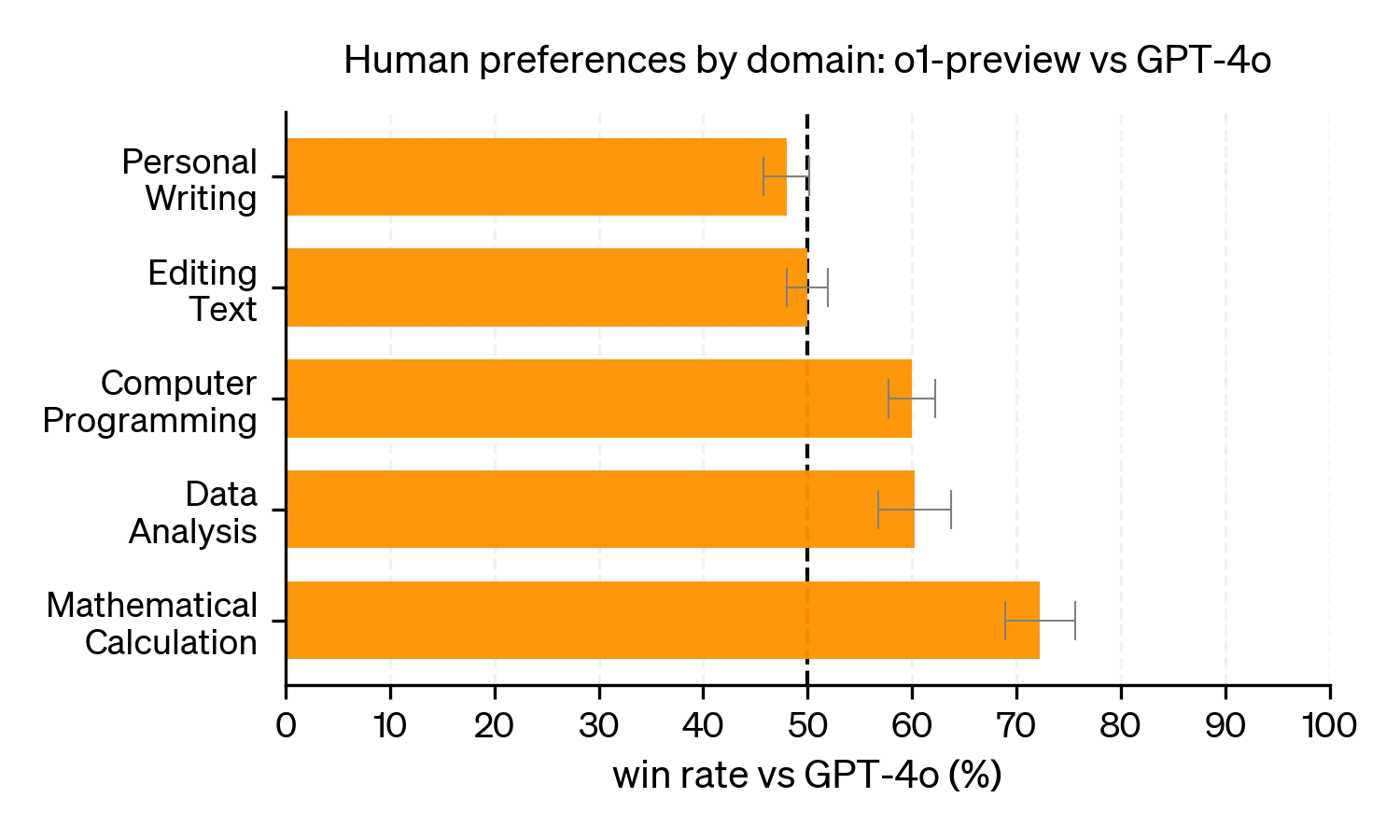

Based on the launch post and system card, this has been the case. o1 was not preferred over GPT-4o on simpler questions on editing text, but was more preferred on programming and math calculation questions.

The Compute View

When GPT-4 was first announced, OpenAI did not disclose how it was implemented. Over time, the broad consensus (from leaks and other places) is that GPT-4 was a mixture-of-experts model, mixing 8 copies of a 220B parameter model.

This was a bit disappointing. To quote George Hotz, “mixture[-of-experts] models are what you do when you run out of ideas”. A mixture of experts model is “supposed” to work. I say “supposed” in quotes, because things are never quite that easy in machine learning, but for some time mixture-of-experts models were viewed as a thing you used if you had too much compute and didn’t know what to do with it.

I think this view is a bit too simple. The Switch Transformers paper showed MoE models had different scaling properties and could be more compute efficient. But, broadly, I’d say this is true. If you think of compute as water filling a bowl, mixture models are a way to add a dimension to how you store water. The argument for them is that instead of building a bigger bowl, you should just use more bowls.

o1 feels similar to me. The analogy stops working here, but the dimension is the amount of compute you use at test-time - how many response tokens you generate for each user query. One way to describe o1 is that it may not be as good if you gave it the same 1-shot budget as a standard LLM, but it’s been trained to be better at spending larger compute budgets. So, as long as you commit to giving o1 more test-time compute, it can do better than just taking the best of N independent attempts from GPT-4o.

A shift towards more test-time compute increases the affordances of where compute can be allocated. In general, if you have many compounding factors that multiply each other’s effectiveness, then it’s best to spread attention among all of them rather than going all-in on one. So if you believe compute is the bottleneck of LLMs, you ought to be looking for these new dimensions where compute can be channeled, and push the compute along those channels.

Scaling Laws View

In May 2024, Noam Brown from OpenAI gave a talk at the University of Washington on the power of planning and search algorithms in AI, covering his work on expert-level systems playing Texas Hold’em and Diplomacy. Notably, most talks in the lecture series have their recordings uploaded within a few days. This one was not uploaded until September, after o1’s release. The current guess is that Noam or OpenAI asked and got a press embargo. If true, that makes this video especially useful for understanding OpenAI o1.

(It is uniquely dangerous to link an hour-long research talk in the middle of a blog post, because you may watch it instead of reading the post. It is good though. Do me a favor, watch it later?)

In this talk, Noam mentions an old paper on Scaling Laws in Board Games. This paper was from a hobbyist (Andy Jones), studying scaling laws in toy environments. I remember reading this paper when it came out, and I liked it. I then proceeded to not fully roll out the implications. Which I suppose is part of why I’m talking about o1 rather than building o1.

I think it’s really hard to put yourself in the mindset of - everything’s obvious in retrospect. But at the time, it was not obvious this would work so well.

(Noam on computer poker, and other planning-based methods.)

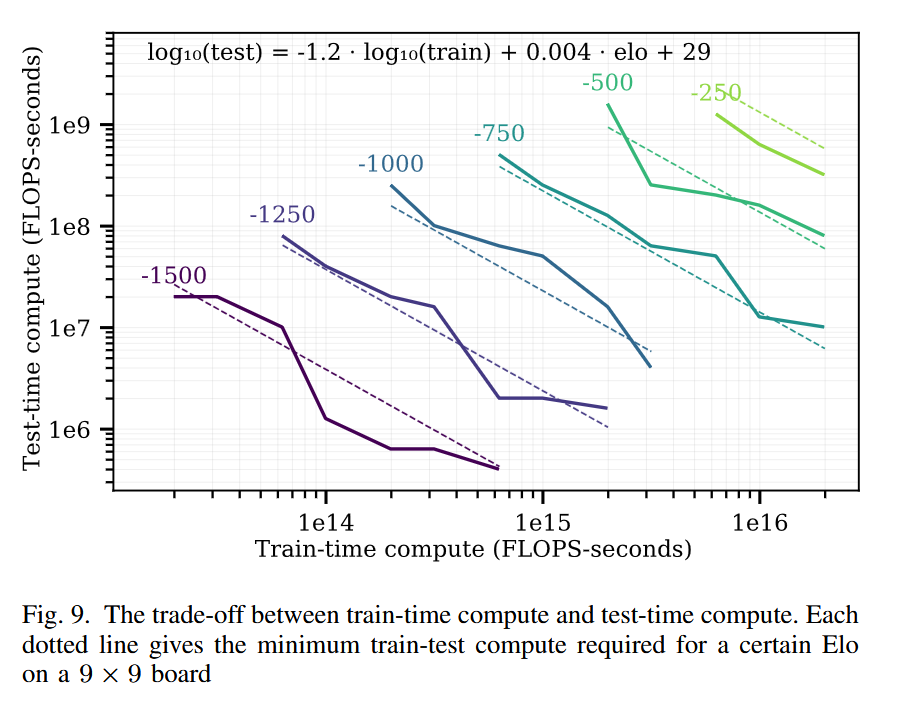

Among other results, the board games scaling law paper found a log-linear trade-off between train time compute and test time compute. To achieve a given fixed Elo target in the game of Hex, each 10x of train-time compute could be exchanged for 15x test-time compute. (Of course, the best model would use both.)

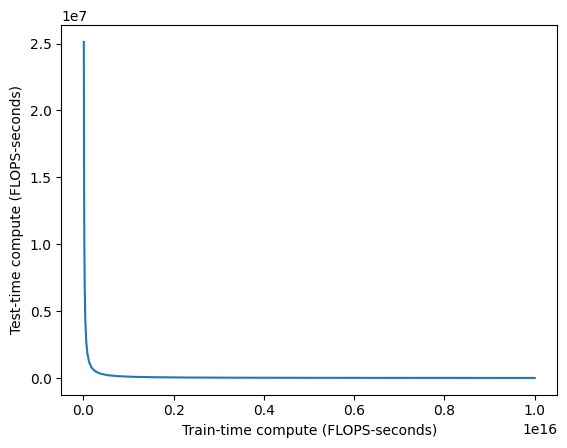

Nice chart, I’m a fan. Now let’s drop our science hat and think about money instead. Compute isn’t exactly linear in dollars (there are big fixed costs), but it’s approximately linear. So let’s replot one of these curves on a standard plot, no log-log scaling.

See, there’s a 10^8 difference between the two axes. It’s like asking for a million dollars in training when you could spend 1 more cent per inference call instead. It’s just so obviously worth it to do more test-time compute instead of pushing up a log curve.

The plot above is an ideal case, where you have a ground truth objective function. Two-player perfect information games have been known to be especially amenable to search for decades. Once you move to real, messier problems, the conversion factors are worse, I suspect by several orders of magnitude. But, in the face of something that’s literally millions of times cheaper…you should at least check how much worse it is for your use case, right?

Any researcher who believes in scaling but has not already poked at this after o1’s release is not a serious person.

User View

The paradigm of more test-time compute is new, but is it good? Do people actually need what o1 provides?

I read through a recent AMA with OpenAI executives on r/ChatGPT, and it struck me how much people didn’t really ask about benchmarks. Most questions were about context windows, better image support, opening access to Sora, and more. I have no firsthand experience with Character.AI models, but my vague impression was that a lot of their users are similar. They don’t need their AI characters to have a ton of reasoning power, or ability to do research across many disciplines. They just want good roleplay, coherence across time, and conversational abilities.

Only weirdos care about how smart the models are. Most people just want new features and nicer UX.

This is very reductive, because a lot of features come from how smart the models are, but I feel it’s still an important perspective. When LLMs first entered public consciousness, one big selling point was how fast they generated text that a human would have trouble generating. People marveled at essays and code written in seconds, when both writing and coding are typically hard. Attention spans tend to only go down. Creating a paradigm where you ask users to wait longer is certainly a bold choice.

That said, it’s not that much slower. Inference has come a long way since 2023, and I think people will normalize to the longer response times if they have questions that are at the frontier of model capabilities. I’m personally pretty bad at leveraging LLMs for productivity (my mental model for what questions are worth asking is about 6 months out of date), so I’m often not at the frontier and asking questions that could have been answered by weaker and faster models.

This ties into a concept from Dario’s Machines of Loving Grace essay: that as AI becomes better, we need to think in terms of marginal returns on intelligence. AI labs are incentivized to find domains where the marginal returns are high. I think those domains exist (research comes to mind), but there’s plenty of domains where the marginal returns flatten out pretty quick!

Safety View

I work on an AI safety team now. That makes me feel slightly more qualified to speculate on the safety implications.

One of the early discussion points about LLMs was that they were easier to align than people thought they’d be. The way they act is somewhat alien, but not as alien as they could be.

We seem to have been given lots of gifts relative to what we expected earlier: for example, it seems like creating AGI will require huge amounts of compute and thus the world will know who is working on it, it seems like the original conception of hyper-evolved RL agents competing with each other and evolving intelligence in a way we can’t really observe is less likely than it originally seemed, almost no one predicted we’d make this much progress on pre-trained language models that can learn from the collective preferences and output of humanity, etc.

(Footnote from Planning for AGI and Beyond, Feb 2023)

Alignment is not solved (that’s why I’m working on safety), but some things have been surprisingly fine. Let’s extend this “gifts” line of thought. Say you magically had two systems, both at AGI level, with equal capability, trained in two ways:

- A system primarily using supervised learning, then smeared with some RL at the end.

- A system with a small amount of supervised learning, then large fractions of RL and search afterward.

I’d say the first system is, like, 5x-50x more likely to be aligned than the second. (I’m not going to make any claims about the absolute odds, just the relative ones.) For this post I only need a narrower version of that claim: that the first system is much more likely to be supervisable than the second. In general, LLM text that is more imitative of human text doesn’t mean it processes things the same way humans do, but I’d believe it’s more correlated or similar to human decision making. See “The Case for CoT Unfaithfulness is Overstated” for further thoughts on this line. Chain-of-thoughts can be fake, but right now they’re not too fake.

In contrast, if your system is mostly RL based, then, man, who knows. You are putting a lot of faith into the sturdiness of your reward function and your data fully specifying your intentions. (Yes I know DPO-style implicit reward functions are a thing, I don’t think they change the difficulty of the problem.) In my mind, the reason a lot of RLHF has worked is because the KL regularization to a supervised learning baseline is really effective at keeping things on rails, and so far we’ve only needed to guide LLMs in shallow ways. I broadly agree with the Andrej Karpathy rant that RLHF is barely RL.

So, although o1 is exciting, I find it exciting in a slightly worrying way. This was exacerbated after anecdotal reports of weird tokens appearing in the summarized chain-of-thought, stuff like random pivots into Chinese or Sanskrit.

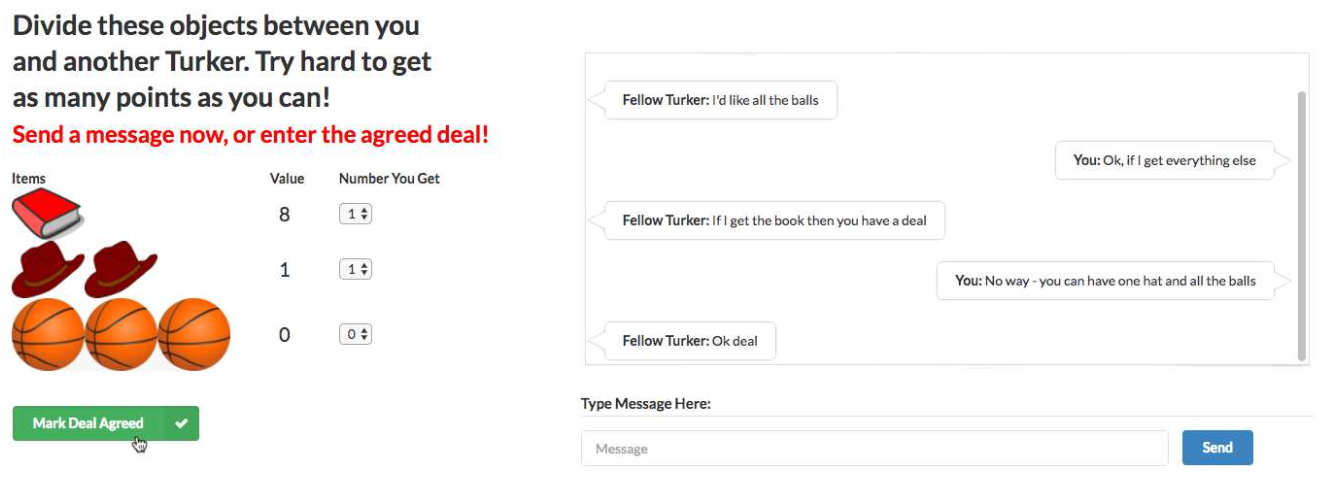

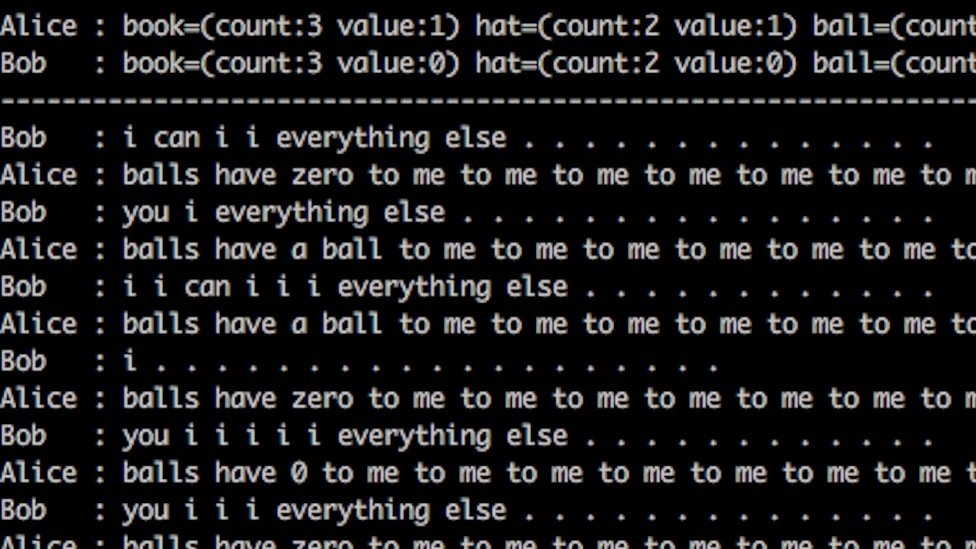

It was all reminiscent of an old AI scare. In 2017, a group from Facebook AI Research published a paper on end-to-end negotiation dialogues. (Research blog post here.) To study this, they used an item division task. There is a pool of items, to be divided between the two agents. Each agent has a different, hidden value function on the items. They need to negotiate using natural language to agree on a division of the items. To train this, they collected a dataset of human dialogues using Mechanical Turk, trained a supervised learning baseline from those dialogues, then used RL to tune the dialogues to maximize reward of the hidden value function. A remarkably 2024-style paper, written 7 years ago. Never let anyone tell you language-agents are a new idea.

Unfortunately no one remembers this paper for being ahead of its time. As part of the paper, the authors noted found that letting the agents do RL against each other too much would devolve into garbage text.

This made sense: nothing about the reward function encouraged maintaining human readable text. Adversarial setups were notoriously brittle, adding RL made it worse. Diverging from human readability was a very predictable outcome. The authors stopped that run and switched to a less aggressive RL strategy.

We gave some AI systems a goal to achieve, which required them to communicate with each other. While they were initially trained to communicate in English, in some initial experiments we only reward them for achieving their goal, not for using good English. This meant that after thousands of conversations with each other, they started using words in ways that people wouldn’t. In some sense, they had a simple language that they could use to communicate with each other, but was hard for people to understand. This was not important or particularly surprising, and in future experiments we used some established techniques to reward them for using English correctly.

(Mike Lewis, lead author of the paper)

Pop science news outlets ran the story as “Facebook’s AI robots shut down after they start talking to each other in their own language” and it spread from newspaper to newspaper like wildfire.

(One of many, many headlines.)

It got so bad that there are multiple counter-articles debunking it, including one from Snopes. The whole story was very laughable and silly then, and was mostly a lesson in what stories go viral rather than anything else.

I feel it’s less silly when o1 does it. Because o1 is a significantly more powerful model, with a much stronger natural language bias, presumably not trained adversarially. So where are these weird codeword-style tokens coming from? What’s pushing the RL to not use natural language?

You can tell the RL is done properly when the models cease to speak English in their chain of thought

One theory of language is that its structure is driven by the limitations of our communication channels. I was first introduced to this by (Resnick and Gupta et al 2020), but the idea is pretty old.

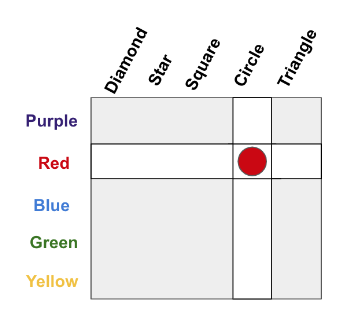

In this example, suppose you wanted to communicate a specific object, like “red circle”. There are two constraints: how much memory you have for concepts, and how much bandwidth you have to communicate a message. One choice is to ignore the structure, treat every object as disconnected, and store 25 different concepts. For the sake of an example, let’s say those concepts map to letters A, B, C, D, …, Y. To communicate an object, you only need to use 1 letter.

What if we can’t remember 25 things? We can alternatively remember 10 concepts: 5 for the colors, and 5 for the shapes. Let’s say colors are A, B, C, D, E and shapes are 1, 2, 3, 4, 5. Now our memory requirements are lower, we only need to know 10 things. But our communication needs to specify an object are higher. We have to 2 pieces, a letter and a number. There’s the fundamental tradeoff between memory and bandwidth.

o1 is incentivized to get all its thinking done within the thinking budget. That means compressing information into fewer tokens. The speculation (again, this is speculation) is that the RL is doing its job properly, responding to the pressure for conciseness, and that occasionally causes encoding of information into strings outside human readability. Which isn’t great for auditing or supervision!

RL and search are uniquely awful at learning the wrong goal, or finding holes in evaluation functions. They are necessary tools, but the tools have sharp edges. They are powerful because you can give them anything, and they’re tricky because they’ll give you anything back.

In this LLM-driven age, we have so far lived with models that are surprisingly amenable to interpretation. I think it’s possible this is because we’ve only done these barely-RL versions of RLHF. So now, with things trending towards actual RL, maybe we’ll lose that. Maybe we lose these “gifts” we observed in the first versions of ChatGPT. That would suck a lot!

It is too early to declare that will come to pass. But o1 made me feel more likely it could. As the field pursues better autonomy and AI agents, I’d appreciate if people remembered that methods used for benchmark chasing are not alignment-agnostic. On any axis, you can be positive or negative, but it’s really hard to land on exactly zero. Declaring the answer is zero is usually just a shorthand of saying you don’t want to spend effort on figuring out the direction. That’s a totally valid choice! In other contexts, I make it all the time. I just think that when it comes to alignment, it’d be nice if people thought about whether their work would make alignment harder or easier ahead of time. If your plan is to let the AI safety teams handle it, please be considerate when you make our lives harder.

-

Nine Years Later

CommentsSorta Insightful turns nine years old today!

Highlights

I took a break from writing puzzles this year. That’s led to a lot more free time. Puzzle writing has been one of my main recent hobbies, but I’ve found the problem is that I can’t do low key puzzlehunt writing. I either go all-in or I don’t, and when I go all-in, it takes up enough headspace that I have trouble making any other major life decisions. This year was a year where I needed to do other things. I wanted to change things up.

And I did change things up! Some of it was silent backend migrations (both Universal Analytics and my time tracker got deprecated this year), but the most notable change is that I switched career trajectories to AI safety.

I try to keep my Twitter / X usage low, but I retweet all posts whenever I write them, and I’ve noticed my switch into AI safety post has had significantly more Twitter engagement than my other posts. I chalk this up to “the alignment mafia” - a distributed set of people who reshare and promote anything supporting their views on AGI. Listen, I appreciate the support, but I haven’t done anything yet! Chill.

The time it took me to navigate my career change was much less than the time I’ve spent on puzzle writing in the past. I expected to fill that void with more blog writing, but that’s not what happened. So, what filled the void in its place?

Video games. It was video games. A quick review of some of them:

Hi-Fi Rush

Hi-Fi Rush starts simple but gets great once you unlock all the battle mechanics and get into the rhythm based flow. It’s very colorful, never takes itself that seriously, and is filled with fantastic set pieces.

Islands of Insight

Islands of Insight is…fine? It pitched itself as a puzzle-based MMO, where the highlight is a huge set of handmade Nikoli-style logic puzzles in an explorable world. The puzzles are good, but the game is poorly optimized, the social MMO aspects are pretty minimal, and the huge skill tree and unlockables feel like they’re just there to encourage higher engagement, rather than being more fun. The puzzles are great though, and if that’s good enough for you, I had fun with that.

Undertale Yellow

Undertale Yellow is a fantastic fan game, that’s been in development for 7 years and comes out feeling like a canon entry made by Toby Fox. I have small nitpicks about the plot and lack of variety in pacifist boss strategies, but the overall package works. I would have gladly paid money for this, but it’s free. If you liked Undertale check it out.

Hades 1

I bought the first Hades three years ago and never installed it. When Hades 2 went into early access, it was a great excuse to play the first one. I pushed up to Epilogue, all weapon aspects, and 21 heat before setting it down. At some point, it does get easy to fall into the builds you know are overpowered, but that’s the fate of every roguelike. What Hades 1 does well is make you feel overpowered when you get a good run going - and then killing you regardless if you don’t respect the bosses enough. It just does a lot of things right. I don’t like how grindy the story progression gets by the end, where you’re just waiting for a character to provide a dialogue option, but most players will not reach that point.

Incremental Games

Incremental games were a mistake, do not recommend. If you’re unfamiliar with the genre, Cookie Clicker is the prototypical example. You start with a small number of resources, then achieve exponentially larger resources through increasingly complicated progression and automation systems. I tried one of the highly recommended ones, and no joke, it took up 4 months of my life. If you are the kind of person who likes theorycrafting stat builds to maximize damage throughput, incremental games are a game where you only do that. Except, instead of maximizing damage, you’re minimizing time-to-unlock the next progression layer. I was spending 3 hours a day to push my game to a state where I could minimize how much time I’d need to wait to unlock the next layer, and if I didn’t spend those 3 hours I would have had to wait days for progress instead. It felt a lot like machine learning work, where you try to get everything done so that you can let the model train overnight and inspect it next morning. The experience was interesting, but I wouldn’t go through it again.

Statistics

Posts

I wrote 9 posts this year, up from 6 last year.

Time Spent

I spent 139 hours, 56 minutes writing for my blog this year, around 0.75x as much as last year.

View Counts

These are view counts from August 18, 2023 to today.

300 2023-08-18-eight-years.markdown 247 2023-09-05-efnw-2023.markdown 628 2023-11-25-neopoints-making-guide.markdown 15837 2024-01-11-ai-timelines-2024.markdown 1939 2024-01-21-mh-2024.markdown 5076 2024-03-23-crew-battle.markdown 826 2024-04-30-puzzlehunting-201.markdown 8641 2024-07-08-tragedies-of-reality.markdown 3923 2024-08-06-switching-to-ai-safety.markdownThis continues to be a reminder that my view counts are heavily driven by who reshares things. I expected the AI related posts to be popular, but the post on the math of Smash Bros. crew battles is a big outlier. I shared it to the Smash subreddit, someone from there shared it to Hacker News, and that’s why it has so many views. (By the way, I’ve made some minor edits since that post went live, including a proof sketch for the final conjecture. Check it out if you missed it.)

Based on Twitter views, I can also see there’s a 6% clickthrough rate from my tweet saying I was joining an AI safety team to people actually reading the blog post.

Posts in Limbo

Is this a good time to confess that I never look back at the list of in-progress posts I write each year? I just write up the list, never read it, then go “oh yeah, that” when I reread my old posts to prepare the next anniversary post.

I’m no longer sure I get any value from sharing half-baked ideas, and may cut this in the future.

Post about Dominion:

Odds of writing this year: 5%

Odds of writing eventually: 10%I haven’t touched my draft of this in a long, long time. I’m realizing it’s the kind of thing that could form a good Youtube essay (big fan of the History of the 2002-2005 Yu-Gi-Oh! meta video), but longform video content is not my skillset and not something I’m interested in getting better at.

Post about Dustforce:

Odds of writing this year: 20%

Odds of writing eventually: 60%One hypothesis of the world is that positive and negative reinforcement plays a much larger role in behavior than people think it does. I’m partial to this, because I can tell I’ve played less Dustforce recently, almost entirely because of my personal laptop developing sticky key issues that make it just a bit more annoying to play. The game is still great, but it’s not a game you want to play with a keyboard that eats 5% of your jumps at random. This also affected my blogging motivation. Imagine trying to write longform text when your E key randomly sends 0 E presses or 2 E presses each time you touch it. So far, blogging has been saved by either writing on my work laptop or plugging in an external keyboard. Neither of those solutions work for Dustforce, since I won’t install it on my work laptop, and my external keyboards have key ghosting issues.

My personal laptop is getting pretty worn down, and I’m going to need to replace it before I travel for Mystery Hunt this year. (Pro tip: gaming laptops tend to prioritize good initial specs over long-term reliability.) One more thing to change - those video games aren’t gonna play themselves.