Prioritized Contradiction Resolution

I know I said my next post was going to be my position on the existential risk of AI, but I love metaposts too much. I’m going to explain why I’m writing that post, through the lens of how I view self-consistency. Said lens may be obvious to the kinds of people who read my posts, but oh well.

At a party several months ago, Alice, Bob, and I were talking about effective altruism. (Fake names, for privacy.) Alice self-identified as part of the movement, and Bob didn’t. At one point, Bob said he approved of EA, and agreed with its arguments, but didn’t actually want to donate. From his viewpoint, diminishing marginal utility of money meant the logical conclusion was to send all disposable income to Africa. He wasn’t okay with that.

Alice replied like so.

I agree that’s the conclusion, but that doesn’t mean you have to follow it. The advice I’d give is to give up on achieving perfect self-consistency. For EA in particular, you can try to live up to that conclusion by donating some money, which is better than none, and then by donating to effective charities, which is a lot better than donating to ineffective ones. That being said, you should only be donating if it’s something you really want to do. It’s more sustainable that way.

If you’re like me, you want to have consistent beliefs that lead to consistent actions.

If you’re like me, you don’t have consistent beliefs, and you don’t execute consistent actions.

Instead you have a trainwreck of a brain that manages to squeak by every day.

Let me vent for a bit. Brains are pretty awesome. It’s pretty amazing what we can do, if you stop to think about it. Unfortunately, in many ways our brains are also complete garbage. Our intuitive understanding of probability is good at making quick decisions, but awful at solving simple probability questions. We double check information that challenges our ideas, but don’t double check information that confirms them. And if someone else does try to challenges our world view, we may double down on our beliefs instead of moving away from them, flying in the face of any reasonable model of intelligence.

What’s worse is when you get into stuff like Dunning-Kruger, where you aren’t even aware your brain is doing the wrong thing. There’s no way I actually remembered what Alice and Bob said at that party several months ago. Sure, that conversation was probably about EA, but it’s pretty likely I’m misremembering it in a way that validates one of my world views.

As a kid, I once realized I knew how to add small numbers, but had no memory of ever learning addition. Having an existential crisis at the age of seven is a fun experience, I’ll say that much.

Recently, someone I know pointed out that schizophrenics often don’t know they’re schizophrenic, and there was a small chance I was schizophrenic right now. The most surprising thing was that I wasn’t surprised.

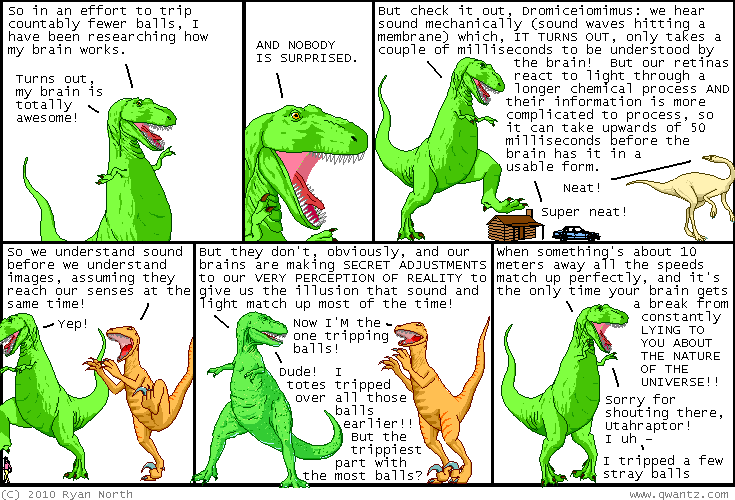

Somehow, I learned to never trust my ability to know the truth. I then internalized that belief into a deep-seated intuition that affects how I view cognition and perception. So, when I read a Dinosaur Comics strip like this one…

…I don’t trip that many balls, because they’ve mostly been tripped out.

That went on a bit of tangent. The point is, we’re lying to ourselves on a subconscious level. We all are. Discovering we’re lying to ourselves on a conscious level shouldn’t be much worse.

***

Let’s all take a moment to quietly mourn self-consistency, who died prematurely and never had a chance.

Then, let’s grab shovels and be gravediggers, because it turns out self-consistency is achievable after all.

Perfect self-consistency is never going to happen. It’s like a hydra. Chop down one contradiction and two grow in its place. Using an imperfect reasoning engine to reason about the world is a tragic comedy in itself. At the same time, it’s worth trying to be self-consistent, and our brains are the best tools we’re going to get.

The trick to achieving self-consistency is to find a nice, quiet place, and sit down to think. You let the thoughts come, sometimes consciously, sometimes subconsciously, and wait until you understand the implicit thoughts well enough to say them out loud. You refine them over time, until you gain a better understanding of yourself, failings and all.

There are a few ways you can do this. Writing is one of them. When you write, you’re forced to try words, again and again, until you hit upon words with perfect weight. Rinse, lather, repeat, until you understand why you act the way you do.

The only problem is that this process can take forever, especially on complicated topics. When I try to do this, my brain has an annoying habit of coming to a different conclusion than I expect it to.

I was never any good at writing essays in high school. Sometimes, I think it’s because I was never good at bullshitting an analysis of the text I didn’t believe. I’d write to the end of the essay, realize I was arguing a completely different point from my original thesis, and would feel obligated to rewrite the start of my essay. Which then led to a rewrite of the middle, and the end, by which point I’d sometimes diverged yet again…

Because I’m not made of time, and contradictions are never-ending, I can only resolve so many of them if I want to do other things, like watch YouTube videos and play video games. Thus, after a long circuitous story, we’ve ended at what I call prioritized contradiction resolution. Here’s a summary of my reasoning.

- I have contradictory thoughts. That’s fine.

- I have finite time and motivation. That kinda sucks, but okay.

- It costs time and motivation to resolve contradictions, and those are my limiting factors.

- Therefore, I should focus on fixing the contradictions most important to me.

It makes sense for me to think deeply about the AI safety debate. I do research in machine learning, so people are going to expect me to have a well-thought-out opinion on the topic. (I lost track of how many times people asked for my opinion at EA Global after hearing I worked in ML, but I think it was at least ten.)

In contrast, although it’s been part of my life for over five years, I don’t need to have well-thought-out reasons to like My Little Pony. I’d like to take the time to tease apart why I like the fandom so much, but the value I’d gain from doing so doesn’t seem as large. Thus, resolving that is further down on my internal todo pile.

So here we are. On looking back, I could have just started here, and skipped all that nonsense with Alice and Bob and rants about cognitive biases.

But somehow, I find myself a lot more at peace, for taking the time to resolve any lingering contradictions I have about the way I approach resolving contradictions. When I promise you a metapost, you’re damn well getting a metapost.

Enough navel-gazing. Next post is about AI. I swear. Otherwise, I’m donating $50 to the Against Malaria Foundation.