Posts

-

MIT Mystery Hunt 2019

CommentsHere is a lesson that should be obvious: if you are trying to hit a paper deadline, going to Mystery Hunt the weekend before that deadline is a bad idea. In my defense, I did not think we were submitting to ICML, but some last-minute results convinced us it was worth trying.

Combined with the huge airport troubles getting out of Boston, I ended up landing in California at 11 AM the day before the deadline, with the horrible combination of a ruined sleep schedule and a lack of sleep in the first place. But, now I’ve recovered, and finally have time to talk about Hunt.

I hunted with teammate this year. It’s an offshoot of ✈✈✈ Galactic Trendsetters ✈✈✈, leaning towards the CMU and Berkeley contingents of the team. Like Galactic, the team is pretty young, mostly made of people in their 20s. When deciding between Galactic and teammate, I chose teammate because I expected it to be smaller and more serious. We ended up similar in size to Galactic. No idea where all the people came from.

Overall feelings on Hunt can be summed up as, “Those puzzles were great, but I wish we’d finished.” Historically, if multiple teams finish Mystery Hunt, Galactic is one of those teams, and since teammate was of similar size and quality, I expected teammate to finish as well. However, since Hunt was harder this year, only one team got to the final runaround. I was a bit disappointed, but oh well, that’s just how it goes.

I did get the feeling that a lot of puzzles had more grunt work than last time Setec ran a Hunt, but I haven’t checked this. This is likely colored by hearing stories about First You Visit Burkina Faso, and spending an evening staring at Google Maps for Caressing and carefully cutting out dolls for American Icons. (I heard that when we finally solved First You Visit Burkina Faso, the person from AD HOC asked “Did you like your trip to Burkina Faso?”, and we replied “Fuck no!”)

What I think actually happened was that the puzzles were less backsolvable and the width of puzzle unlocks was smaller. Each puzzle unlocked a puzzle or a town, and each town started with 2 puzzles, so the width only increased when a new town was discovered. I liked this, but it meant we couldn’t skip puzzles that looked time-consuming, they had to be done, especially if we didn’t know how the meta structure worked.

For what it’s worth, I think it’s good to have some puzzles with lots of IDing and data entry, because these form footholds that let everybody contribute to a puzzle. It just becomes overwhelming if there’s too much of it, so you have to be careful.

Before Hunt

Starting from here, there are more substantial puzzle spoilers.

A few weeks before Hunt, someone had found Alex Rosenthal’s TED talk about Mystery Hunt.

We knew Alex Rosenthal was on Setec. We knew Setec had embedded puzzle data in a TED talk before. So when we got INNOVATED out of the TED talk, it quickly became a teammate team meme that we should submit INNOVATED right as Hunt opened, and that since “NOV” was a substring of INNOVATED, we should be on the lookout for a month-based meta where answers had other month abbreviations. Imagine our surprise when we learn the hunt is Holiday themed - month meta confirmed!

The day before Hunt, I played several rounds of Two Rooms and a Boom with people from Galactic. It’s not puzzle related (at least, not yet), but the game’s cool enough that I’ll briefly explain. It’s a social deduction game. One person is the President, another person is the Bomber, and (almost) everyone’s win condition is getting the President and Bomber in the same or different rooms by the end of the game.

Now, here’s the gimmick: by same room, I mean the literal same room. People are randomly divided across two rooms, and periodically, each room decides who to swap with the other room. People initially have secret roles, but there are ways to share your roles with other players, leading to a game of figuring out who to trust, who you can’t trust, and concealing who you do trust from people you don’t, as well as deciding how you want to ferry information across the two rooms. It’s neat.

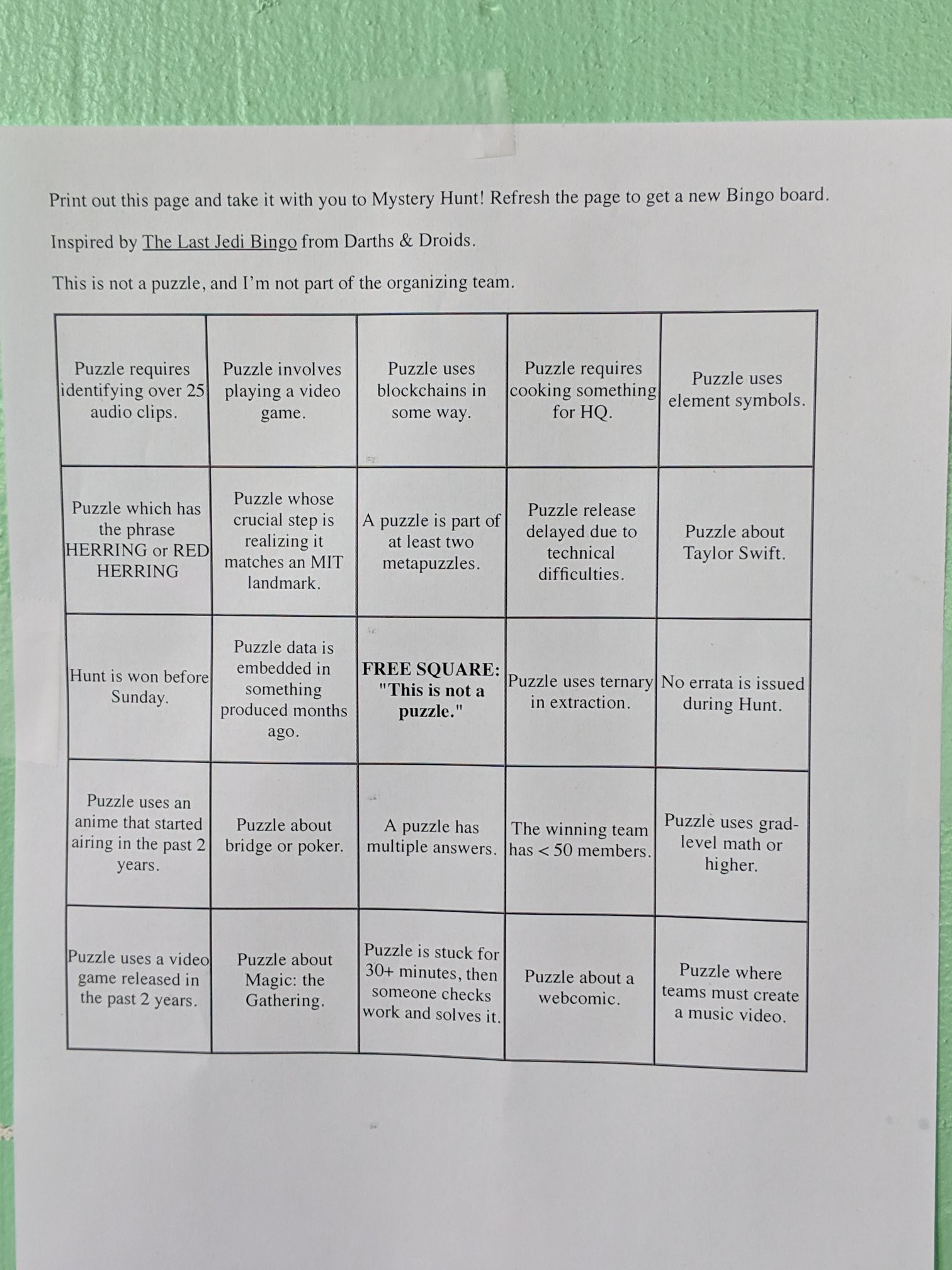

Right before kickoff, I learned about the Mystery Hunt betting pool one of my friends was running, thought it was fun, and chipped in, betting on Palindrome. While waiting for puzzles to officially release, we printed our official team Bingo board.

Puzzles

Every Hunt, I tend to look mostly at metapuzzles, switching to regular puzzles when I get stuck on the metas. This Hunt was no different. It’s not the worst strategy, but I think I look at metas a bit too much, and make bad calls on whether I should solve regular puzzles instead.

I started Hunt by working on GIF of the Magi and Tough Enough, which were both solid puzzles. After both of those were done, we had enough answers to start looking at Christmas-Halloween. We got the decimal-octal joke pretty quickly, and the puzzle was easy to fill-in with incomplete info, then gave massive backsolving fodder. Based on the answers shared during wrap-up, several other teams had a similar experience.

Backsolving philosophy is different across teams, and teammate borrows a culture from Galactic - backsolve as much as you want, as long as you wait for your answer to be confirmed wrong before trying it for the next puzzle, and try to have only 1 pending answer per puzzle. This makes our solve accuracy relatively terrible since our frequent backsolve attempts drag down the average. For example, we guessed several random words for Moral Ambiguity, because we knew it thematically and mechanically had to be the Holi Day prank answer.

We got feedback to backsolve less aggressively and toned down our backsolve strategy by a lot. In fact, we completely forgot to backsolve Making a Difference. This was especially embarassing because we knew the clue phrase started with “KING STORY ADAPTED AS A FILM”, and yet we completely forgot that “RITA HAYWORTH AND THE SHAWSHANK REDEMPTION” was still unclaimed.

I did not work on Haunted but I know people had a good laugh when the cluephrase literally told them “NOT INNOVATED, SORT FIRST”. teammate uses Discord to coordinate things. Accordingly, our

#innovated-shitpostingchannel was renamed to#ambiguous-shitposting.I did not work on Common Flavors, and in fact, we backsolved that puzzle because we didn’t figure out they were Celestial Seasonings. At some point, the people working on Common Flavors tried brewing one of the teas, tried it, and thought it tasted terrible. It couldn’t possibly be a real tea blend!

Oops.

Eventually they cut open the tea bags and tried to identify ingredients by phonelight.

We See Thee Rise was a fun puzzle. The realization of “oh my God, we’re making the Canadian flag!” was great. Even if our maple leaf doesn’t look that glorious in Google Sheets…

I did not work on The Turducken Konundrum, but based on solve stats, we got the fastest solve. I heard that we solved it with zero backtracking, which was very impressive.

For Your Wish is My Command, our first question was asking HQ if we needed the game ROMs of the games pictured, which would have been illegal. We were told to “not do illegal things.” I did…well, basically nothing, because by the time I finished downloading an NES emulator, everyone else had IDed the Game Genie codes, and someone else had loaded the ROM on an NES emulator they had installed before Hunt. That emulator had a view for what address was modified to what value, and we solved it quickly from there.

I want to call out the printer trickery done for 7 Little Dropquotes. The original puzzle uses color to mark what rows letters came from, but if you print it, the colors are removed for Roman numerals, letting you print the page with a black-and-white printer. Our solve was pretty smooth, we printed out all the dropquotes and solved them in parallel. For future reference, Nutrimatic trivializes dropquotes, because the longer you make the word, the more likely it is that only one word fits the regex of valid letters. This means you can use Nutrimatic on all the long clues humans find hard, and use humans on all the short clues Nutrimatic finds hard. When solving this puzzle, I learned I am really bad at solving dropquotes, but really good at typing regexes into Nutrimatic and telling people “that long word is INTELLECTUAL”.

Be Mine was an absurd puzzle. I didn’t have to do any of the element IDing, which was nice. The break-in was realizing that we should fill in “night” as “nite” and find minerals, at which point it became a silly game of “Is plumbopalladinite a mineral? It is!” and “Wait, cupromakopavonite is a thing?” I had a lot of fun finding mineral names and less fun extracting the puzzle answer. I was trying to find the chemical compounds word-search style. The person I was solving with was trying to find overlaps between a mineral name and the elements in the grid. I didn’t like this theory because some minerals didn’t extract any overlaps at all, but the word search wasn’t going great. It became clear that I was wrong and they were right.

If you like puzzlehunt encodings, The Bill is the puzzle for you. It didn’t feel very thematic, but it’s a very dense pile of extractions if you’re into that.

Okay, there’s a story behind State Machine. At some point, we realized that it was cluing the connectivity graph of the continental United States. I worked on implementing the state machine in code while other people worked on IDing the states. Once that was IDed, I ran the state machine and reported the results. Three people then spent several minutes cleaning the input, each doing so in a separate copy of the spreadsheet, because they all thought the other people were doing it wrong or too slowly. This led to the answer KATE BAR THE DOOR, which was…wrong. After lots of confusion, we figured out that the clean data we converged on had assigned 0 to New Hampshire instead of 10. They had taken the final digit for every state and filtered out all the zeros, forgetting that indicies could be bigger than 9. This was hilarious at 2 AM and I broke down laughing, but now it just seems stupid.

For Middle School of Mines, I didn’t work on the puzzle, but I made the drive-by observation that were drawing a giant 0 in the mines discovered so far, in a rather literal case of “missing the forest of the threes”.

I had a lot of fun with Deeply Confused. Despite literally doing deep learning in my day job, I was embarrassed to learn that I didn’t have an on-hand way to call Inception-v3 from my laptop. We ended up using a web API anyways, because Keras was giving us the wrong results. Looking at the solution, we forgot to normalize the image array, which explains why we were getting wrong adversarial class.

Chris Chros was a fun puzzle. I never realized so many people with the name “Chris” were in Infinity War.

He’s Out!! was a puzzle that went from “huh” to “wow, neat!” when we figured out that a punchout in baseball means a strikeout. I’ve never played Punch-Out!!, but I recognized it from speedruns. A good intro is the blindfolded run by Sinister1 at AGDQ 2014.

Tree Ring Circus was neat. I felt very clever for extracting the ring sizes by looking up their size in the SVG source. I then felt stupid when I realized this was intentional, since it’s very important to have exact ring sizes for this puzzle.

Cubic was cute. It’s fune to have a puzzle where you go from, “ah, Cubic means cubic polynomials”, and see it go to “ohhhh, cubic means cubic graphs”. I didn’t really do anything for this but it was fun to hear it get solved.

Somewhere around Sunday 2 AM, we got caught by the time unlock and starting unlocking a new puzzle every 15 minutes. I’m not sure what exactly happened, but the entire team somehow went into beast mode and kept pace with the time unlock for several hours. We ended up paying for it later that morning, when everyone crashed until noon. One of the puzzles solved in that block was Divine the Rule of Kings, which we just…did. Like, it just happened. Really weird. There were some memes about “can someone pull up the US state connectivity graph, again?”. Turns out puzzle authors really like that graph.

Of the regular puzzles I solved, I’d say my favorite was Getting Digits. We didn’t hit all the a-has at once, it was more like a staggered process. First “ON and OFF!”, then “Ohhhh it’s START”, then “ohhh it’s a phone number”. It’s a simple extraction but there’s still something cool about calling a phone number to solve a puzzle.

Metapuzzles

Despite looking at a lot of metas, I didn’t contribute to many of them. The one where I definitely helped was figuring out the turkey pardon connection for Thanksgiving-President’s Day. Otherwise, it was a bunch of observations that needed more answers to actually execute.

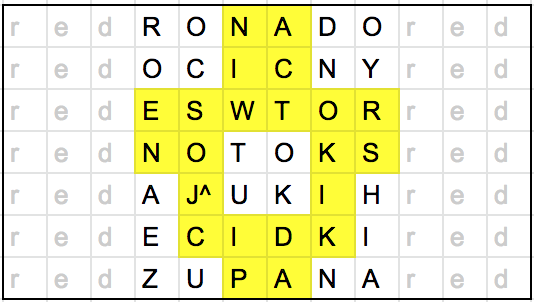

This section really exists for two reasons. The first is the Halloween-Thanksgiving meta. We were stuck for quite a while, assuming that the meta worked by overlaying three Halloween town answers with three Thanksgiving answers with food substrings, and extracting using blood types in some way. This was a total red herring, since the food names were coming from turkey names. However, according to the people who solved it, the reason they finally tried ternary on the blood types was because we had a bingo square for “Puzzle uses ternary in extraction”, and they wanted to get that square. I’m officially claiming partial credit for that metapuzzle.

The second reason is the Arbor-Pi meta. We had the core idea the whole time - do something based on digits after the Feynman point. The problem was that we horribly overcomplicated it. We decided to assign numbers to each answer, then substitute numbers based on answer length. So far, so good. We then decided that since there were two boxes, the answer had to be a two digit number, so we took everything mod 100. Then, instead of extracting the digit N places after the Feynman point, we thought we needed to find the digits “N” at some place after the Feynman point, noting how many digits we had to travel to find N. Somehow, of the 8 boxes we had, all of them gave even numbers, so it looked like something was happening. This was all wrong and eventually someone did the simple thing instead.

Post-Hunt

Since Palindrome didn’t win, I lost my Mystery Hunt bet. It turns out the betting pool only had four participants, we were all mutual friends, and we all guessed wrong. As per our agreement, all the money was donated to Mystery Hunt.

Continuing the theme of not winning things, we didn’t get a Bingo, but we got very close. At least it’s symmetric.

After Hunt ended, we talked about how we didn’t get the Magic: the Gathering square, and how it was a shame that Pi-Holi wasn’t an MTG puzzle, since it could have been about the color pie. That led to talking about other games with colored wedges, and then we got the Trivial Pursuit a-ha. At this rate, I actually might print out the phrase list to refer to for extraction purposes.

I didn’t do too much after Hunt. It was mostly spent getting food with friends, complaining about the cold, and waiting in an airport for 12 hours. It turned out a bunch of Bay Area Hunters were waiting for the same flights, so it wasn’t as bad as it could have been. We talked about Hunt with other stranded passegners, and a few people from my team got dinner at Sbarro.

It felt obligatory.

-

Quick Opinions on Go-Explore

CommentsThis was originally going to be a Tweetstorm, but then I decided it would be easier to write as a blog post. These opinions are quick, but also a lot slower than my OpenAI Five opinions, since I have more time.

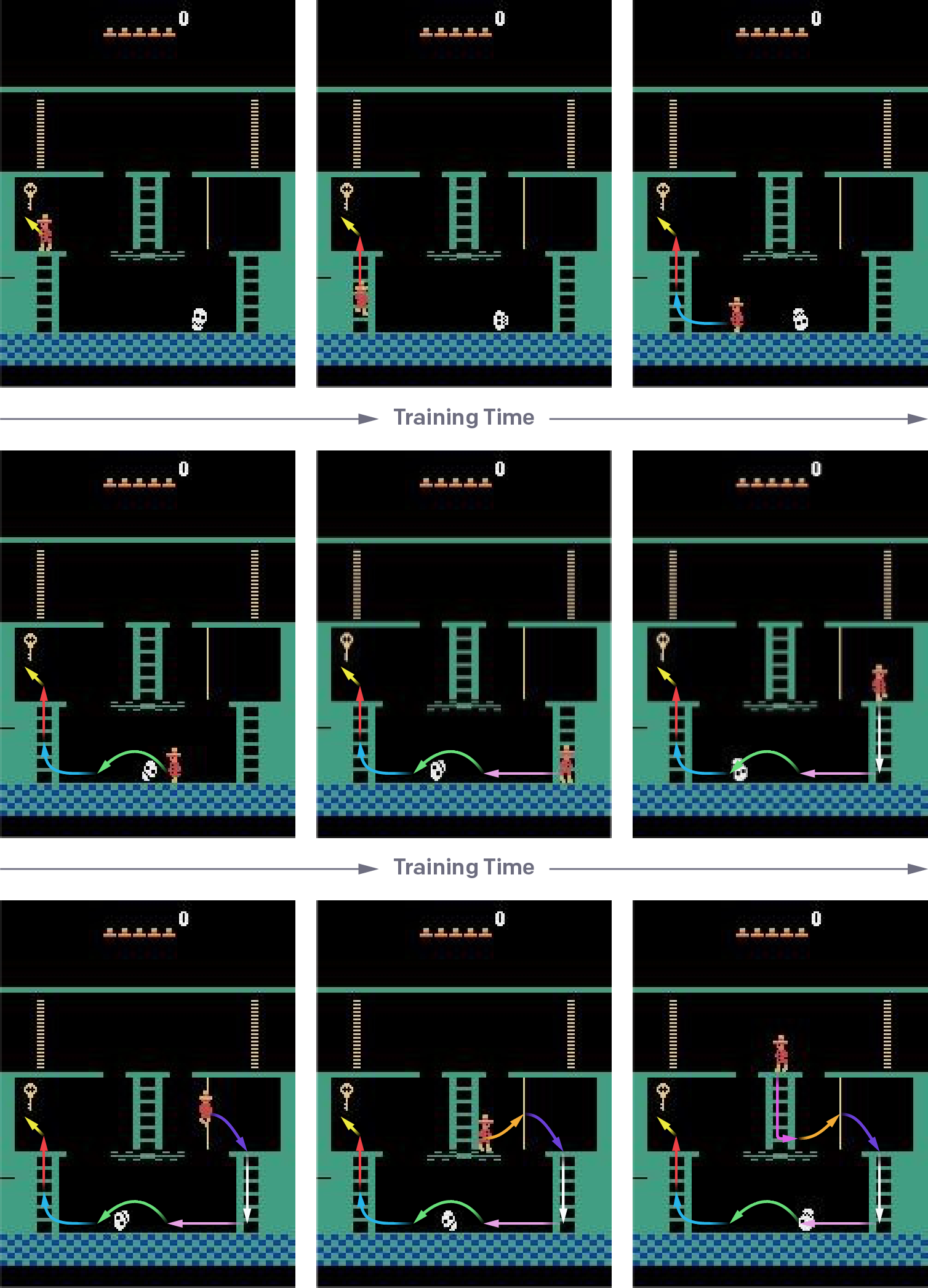

Today, Uber AI Labs announced that they had solved Montezuma’s Revenge with a new algorithm called Go-Explore. Montezuma’s Revenge is the classical hard example of difficult Atari exploration problems. They also announced results on Pitfall, a more difficult Atari exploration problem. Pitfall is a less popular benchmark, and I suspect that’s because it’s so hard to get a positive score at all.

These are eye-popping headlines, but there’s controversy around the results, and I have opinions here. For future note, I’m writing this before the official paper is released, so I’m making some guesses about the exact details.

What is the Proposed Approach?

This is a summary of the official release, which you should read for yourself.

One of the common approaches to solve exploration problems is intrinsic motivation. If you provide bonus rewards for novel states, you can encourage agents to explore more of the state space, even if they don’t receive any external reward from the environment. The detail is in defining novelty and the scale of the intrinsic reward.

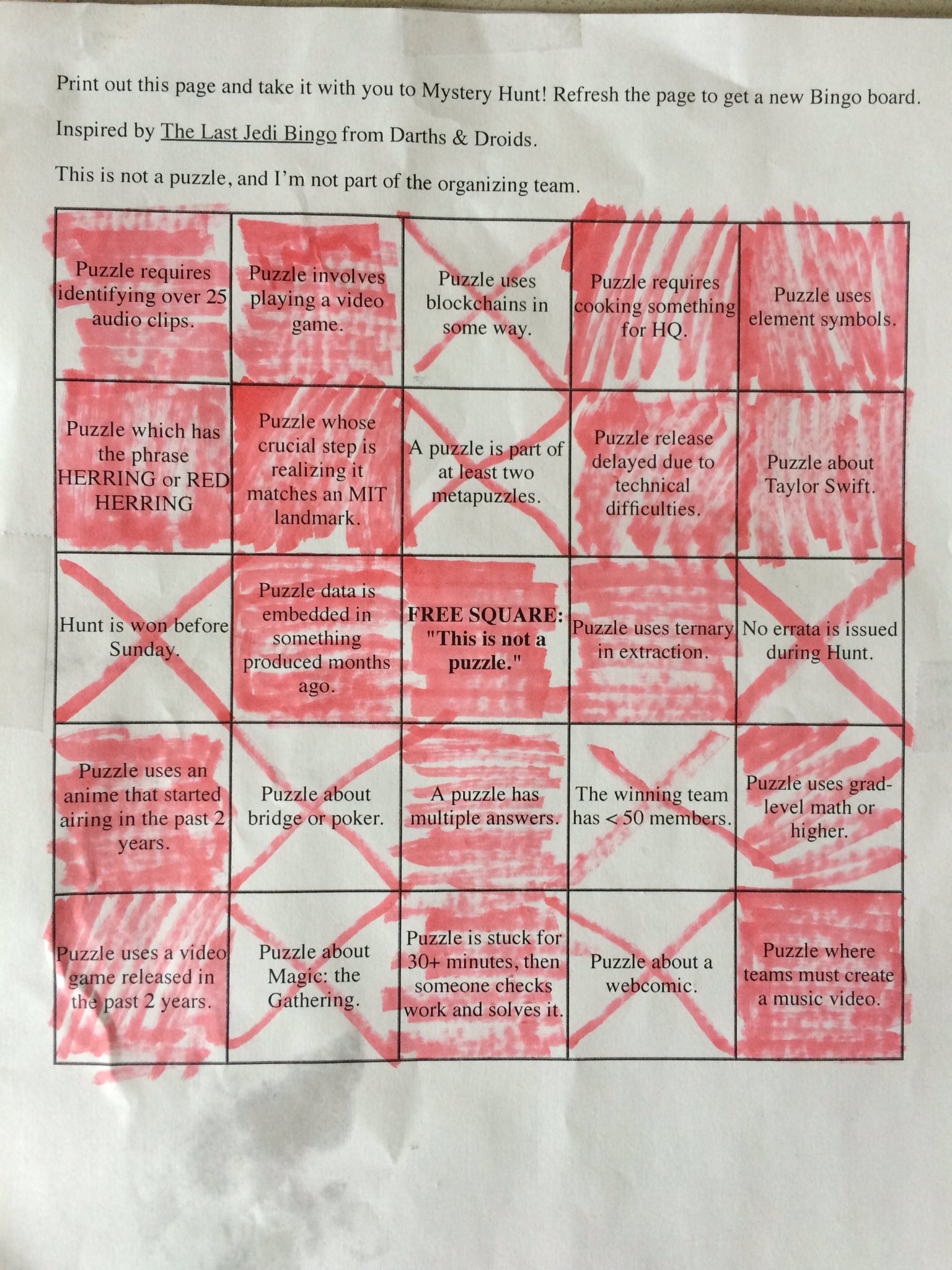

The authors theorize that one problem with these approaches is that they do a poor job at continuing to explore promising areas far away from the start state. They call this phenomenon detachment.

(Diagram from original post)

In this toy example, the agent is right between two rich sources of novelty. By chance, it only explores part of the left spiral before exploring the right spiral. This locks off half of the left spiral, because it is bottlenecked by states that are no longer novel.

The proposed solution is to maintain a memory of previously visited novel states. When learning, the agent first randomly samples a previously visited state, biased towards newer ones. It travels to that state, then explores from that state. By chance we will eventually resample a state near the boundary of visited states, and from there it is easy to discover unvisited novel states.

In principle, you could combine this paradigm with an intrinsic motivation algorithm, but the authors found it was enough to explore randomly from the previously visited state.

Finally, there is an optional robustification step. In practice, the trajectories learned from this approach can be brittle to small action deviations. To make the learned behavior more robust, we can do self-imitation learning, where we take trajectories learned in a deterministic environment, and learn to reproduce them in randomized versions of that environment.

Where is the Controversy?

I think this motivation is sound, and I like the thought experiment. The controversy lies within the details of the approach. Specifically,

- How do you represent game states within your memory, in a way that groups similar states while separating dissimilar ones?

- How do you successfully return to previously visited states?

- How is the final evaluation performed?

Point 1 is very mild. They found that simply downsampling the image to a smaller image was sufficient to get a good enough code. (8 x 11 grayscale image with 8 pixel intensities).

(Animation from original post)

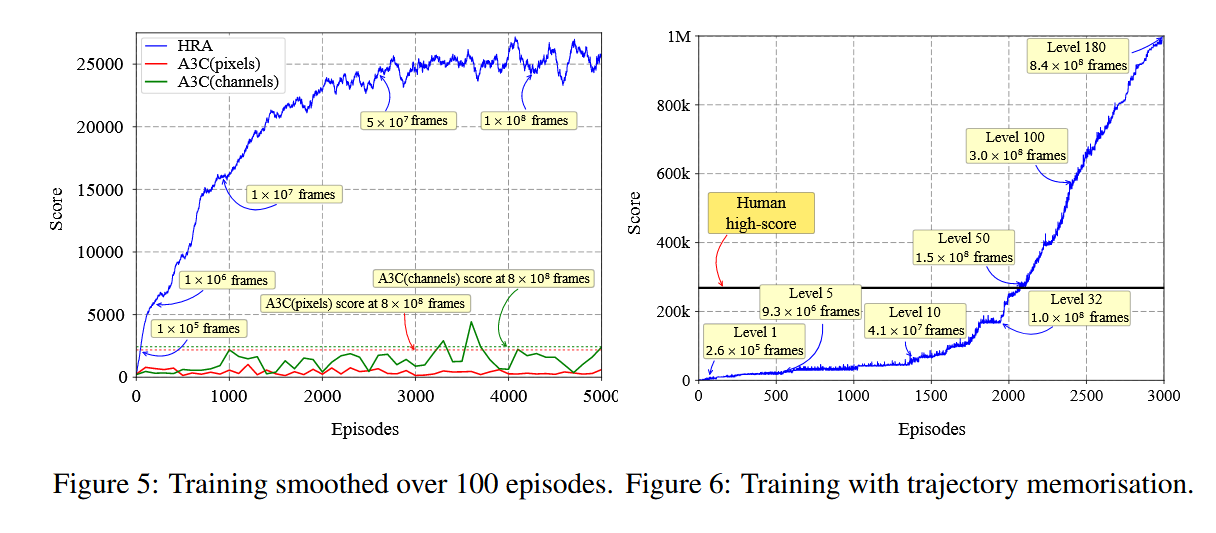

Downsampling is enough to achieve 35,000 points, about three times larger than the previous state of the art, Random Network Distillation.

However, the advertised result of 2,000,000 points on Montezuma’s Revenge includes a lot of domain knowledge, like:

- The x-y position of the character.

- The current room.

- The current level.

- The number of keys held.

This is a lot of domain-specific knowledge for Montezuma’s Revenge. But like I said, this isn’t a big deal, it’s just how the results are ordered in presentation.

The more significant controversy is in points 2 and 3.

Let’s start with point 2. To return to previous states, three methods are proposed:

- Reset the environment directly to that state.

- Memorize and replay the sequence of actions that take you to that state. This requires a deterministic environment.

- Learn a goal-conditioned policy. This works in any environment, but is the least sample efficient.

The first two methods make strong assumptions about the environment, and also happen to be the only methods used in the reported results.

The other controversy is that reported numbers use just the 30 random initial actions commonly used in Atari evaluation. This adds some randomness, but the SOTA they compare against also uses sticky actions, as proposed by (Machado et al, 2017). With probability \(\epsilon\), the previous action is executed instead of the one the agent requests. This adds some randomness to the dynamics, and is intended to break approaches that rely too much on a deterministic environment.

Okay, So What’s the Big Deal?

Those are the facts. Now here are the opinions.

First, it’s weird to have a blog post released without a corresponding paper. The blog post is written like a research paper, but the nature of these press release posts is that they present the flashy results, then hide the ugly details within the paper for people who are more determined to learn about the result. Was it really necessary to announce this result without a paper to check for details?

Second, it’s bad that the SOTA they compare against uses sticky actions, and their numbers do not use sticky actions. However, this should be easy to resolve. Retrain the robustification step using a sticky actions environment, then report the new numbers.

The controversy I care about much more is the one around the training setup. As stated, the discovery of novel states heavily relies on using either a fully deterministic environment, or an environment where we can initialize to arbitrary states. The robustification step also relies on a simulator, since the imitation learning algorithm used is the “backward” algorithm from Learning Montezuma’s Revenge from a Single Demonstration, which requires resetting to arbitrary states for curriculum learning reasons.

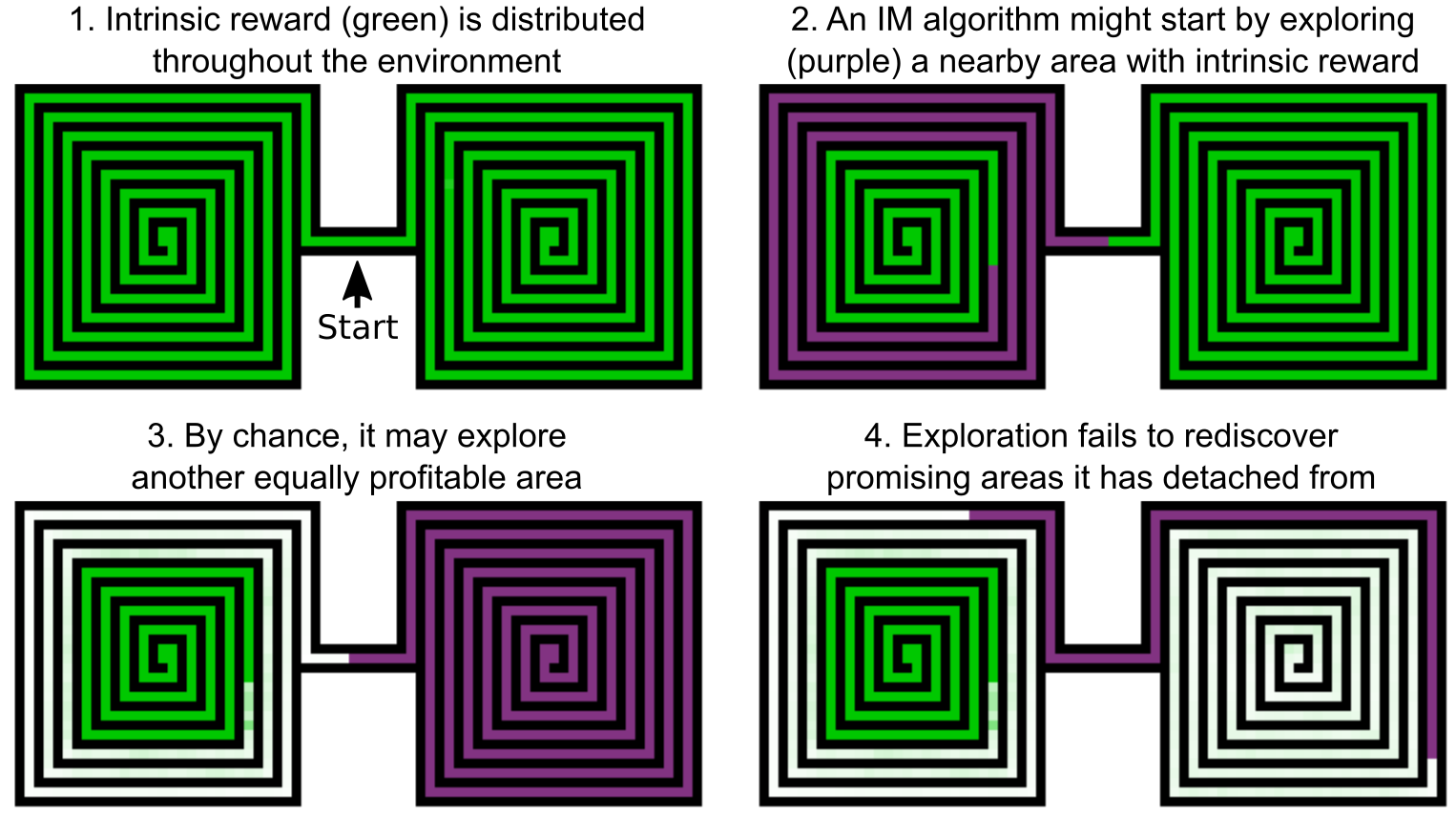

(Diagram from original post. The agent is initialized close to the key. When the agent shows it can reach the end state frequently enough, it is initialized further back in the demonstration.)

It’s hard to overstate how big of a deal these simulator initializations are. They can matter a lot! One of the results shown by (Rajeswaran & Lowrey et al, 2018) is that for the MuJoCo benchmarks, wider state initialization give you more gains than pretty much any change between RL algorithms and model architectures. If we think of simulator initialization as a human-designed state initialization, it should be clear why this places a large asterisk on the results when compared against a state-of-the-art method that never exploits this feature.

If I was arguing for Uber. I would say that this is part of the point.

While most interesting problems are stochastic, a key insight behind Go-Explore is that we can first solve the problem, and then deal with making the solution more robust later (if necessary). In particular, in contrast with the usual view of determinism as a stumbling block to producing agents that are robust and high-performing, it can be made an ally by harnessing the fact that simulators can nearly all be made both deterministic and resettable (by saving and restoring the simulator state), and can later be made stochastic to create a more robust policy

This is a quote from the press release. Yes, Go-Explore makes many assumptions about the training setup. But look at the numbers! It does very, very well on Montezuma’s Revenge and Pitfall, and the robustification step can be applied to extend to settings where these deterministic assumptions are less true.

To this I would reply: sure, but is it right to claim you’ve solved Montezuma’s Revenge, and is robustification a plausible fix to the given limitations? Benchmarks can be a tricky subject, so let’s unpack this a bit.

In my view, benchmarks fall along a spectrum. On one end, you have Chess, Go, and self-driving cars. These are grand challenges where we declare them solved when anybody can solve them to human-level performance by any means necessary, and where few people will complain if the final solution relies on assumptions about the benchmark.

On the other end, you have the MountainCar, Pendulums, and LQRs of the world. Benchmarks where if you give even a hint about the environment, a model-based method will instantly solve it, and the fun is seeing whether your model-free RL method can solve it too.

These days, most RL benchmarks are closer to the MountainCar end of the spectrum. We deliberately keep ourselves blind to some aspects of the problem, so that we can try our RL algorithms on a wider range of future environments.

In this respect, using simulator initialization or a deterministic environment is a deal breaker for several downstream environments. The blog post says that this approach could be used for simulated robotics tasks, and then combined with sim-to-real transfer to get real-world policies. On this front, I’m fairly pessimistic on how deterministic physics simulators are, how difficult sim-to-real transfer is, and whether this gives you gains over standard control theory. The paradigm of “deterministic, then randomize” seems to assume that your deterministic solution doesn’t exploit the determinism in a way that’s impossible to reproduce in the stochastic version of the environment

A toy example here is something like an archery environment with two targets. One has a very tiny bullseye that gives +100 reward. The other has a wider bullseye that gives +50 reward. A policy in a deterministic environment will prefer the tiny bullseye. A policy in a noisy environment will prefer the wider bullseye. But this robustification paradigm could force the impossible problem of hitting a tiny bullseye when there’s massive amounts of unpredictable wind.

This is the main reason I’m less happy than the press release wants me to be. Yes, there are contributions here. Yes, for some definition of the Montezuma’s Revenge benchmark, the benchmark is solved. But the details of the solution have left me disillusioned about its applicability, and the benchmark that I wanted to see solved is different from the one that was actually solved. It makes me feel like I got clickbaited.

Is the benchmark the problem, or are my expectations the problem? I’m sure others were disappointed when Chess was beaten with carefully designed tree search algorithms, rather than “intelligence”. I’m going to claim the benchmark is the problem, because Montezuma’s Revenge should be a simple problem, and it has analogues whose solution should be a lot more interesting. I believe a solution that uses sticky actions at all points of the training process will produce qualitatively different algorithms, and a solution that combines this with no control over initial state will be worth paying attention to.

In many ways, Go-Explore reminds me of the post for Hybrid Reward Architecture paper (van Seijen et al, 2017). This post also advertised a superhuman result on Atari: achieving 1 million points on Ms. Pac-Man, compared to a human high score of 266,330 points. It also did so in a way relying on trajectory memorization. Whenever the agent completes a Pacman level, the trajectory it executed is saved, then replayed whenever it revisits that level. Due to determinism, this guarantees the RL algorithm only needs to solve each level once. As shown in their plots, the trick is the difference between 1 million points and 25,000 points.

Like Go-Explore, this post had interesting ideas that I hadn’t seen before, which is everything you could want out of research. And like Go-Explore, I was sour on the results themselves, because they smelled too much like PR, like a result that was shaped by PR, warped in a way that preferred flashy numbers too much and applicability too little.

-

Stories From the Neopets Economy

CommentsWhat is Neopets?

Neopets is a website about, well, Neopets, which are cute animals you can own as pets. The main currency of Neopets is Neopoints, abbreviated NP. You earn NP from playing Flash games, getting lucky from random events, and playing the market.

It was biggest in the early 2000s, then faded from relevance, but it’s still around. I like to check it out now and then, mostly for nostalgia reasons. It’s pretty interesting. Depending on where you look you can see the evolution in web design between the old and new parts of the site. (I should warn you that Neopets currently doesn’t support HTTPS and it sends passwords in plaintext, so be careful.)

What you do in Neopets is up to you. There’s no clear goal to the game, and I think that’s why it manages to stick around. Everyone who’s stuck around has found something that pulls them in. Some just like the Flash games. Others become collectors of weird items. A few (like myself) get into the battling scene, leveling up their pet at the Neopets training schools and saving up Neopoints for powerful weapons.

Like I said. It’s all up to you.

What isn’t up to you are the laws of supply and demand. In the end, most goals cost Neopoints, and any game that’s run for 18 years is bound to have its share of economic stories.

That Time Neopets Changed Pet Art, Creating a New Scarce Resource

In 2007, the Neopets team standardized Neopet artwork. All Neopets of a species would have the same pose, regardless of color. This was done to reduce the art load for features like clothing. With a standard pose, the clothes can simply be overlaid on top of the Neopet, reducing the combinatorial complexity of the art assets. Many outfits are only buyable with NeoCash, the pay-to-win currency, so there are some pretty clear monetary reasons involved.

Some users got angry. Many were drawn to their Neopets because of what they looked like, and some felt that the new artwork lost some of the charm and individuality of the old art. As a comparison, here is some old artwork for the Darigan color.

Here is the new artwork for the same set of Neopets.

You can see that the style is preserved, but the poses are more homogenized. Personally, I don’t think this is a big deal, but I’m not big on pet aesthetics.

Not all the new art was ready at launch time, so some pets kept their old art. As an apology of sorts, the Neopets team made conversion of these pets optional. These pets became known as UC pets, for unconverted.

Since new UC pets are impossible to create, there’s a fixed supply, and where there’s a fixed supply, there’s speculators. Owning a UC pet is a big deal. Some genuinely prefer the old art, whereas others simply treat them as a valuable bargaining chip in the pet trading market.

You can read more about this topic from this Reddit thread and the Neopets wiki. When researching this, I even found a page where you can buy UC pets, with classifications based on how well the Neopet is named. I find this ridiculous, yet excellent.

That Time People Abused a Duplication Glitch and Crashed the High-End Economy

In 2014, Neopets was sold from Viacom to JumpStart. During the transition period, the site was lagging horribly, and a bunch of things…just stopped working.

Games: broken (HSTs not reset)

Restocking: broken (only restocking once an hour or so)

User shops: a little broken (overstocking)

Battledome: broken forever (not really, it’s just a little glitchy)

Keyquest: still broken (broken since transition)

Contests (Art Gallery, Random Contest, etc.): broken (submissions don’t work)

Galleries: broken (you can add/remove but you can’t rank)

Petpages: NEIN NEIN NEIN (you cannot edit petpages. at all. no petpages for you.)

Neomail: bit messy (neomails displaying as “read” instead of “replied”)

Wishing Well: worst genie ever (has not granted wishes in days)

Employment Agency: everyone is on state benefits (no job listings)

Guilds: pretty broken (rank names and layouts can not be edited)

Pound: having numerous issues. (adopting neopets is not a good idea right now)

Our hearts: broken (TNT WHYYYYYYYY)

During the lag, a few users discovered that if you sent a bunch of requests at a fast enough rate, the servers would get confused and duplicate items. Several Neopets items cost hundreds of millions of Neopoints, and a few trolls started duplicating items and selling them at deliberately low prices.

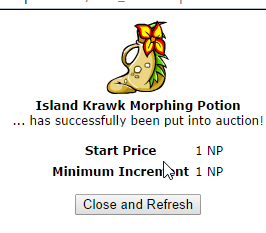

Credit to this Reddit thread and this article for documenting some of the madness. First they auctioned some morphing potions for cheap.

(Current price: 820,000 NP)

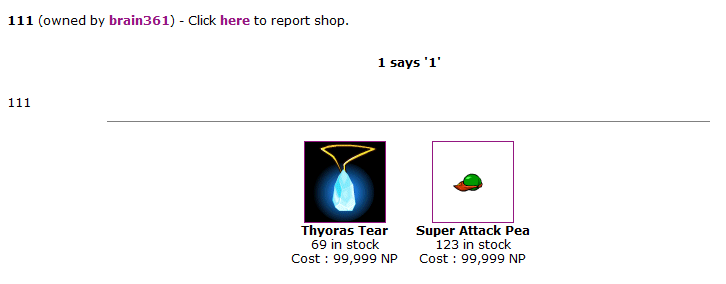

Then, they duplicated powerful Battledome items.

(Current price: 28.5 million NP for Thyoras Tear, hundreds of millions for Super Attack Pea)

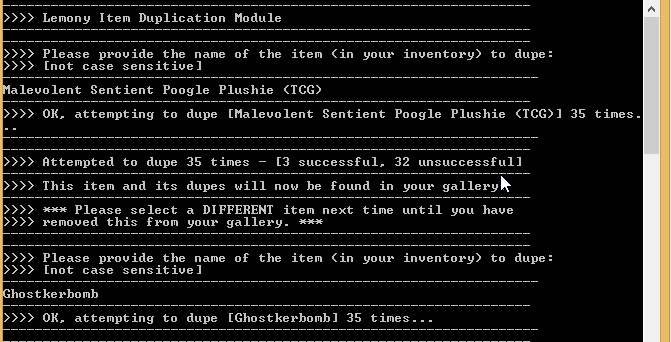

It got worse when people started releasing scripts to automate dupe attempts.

You have to imagine how disappointed some cheaters were. Here they are, trying to silently exploit a glitch to make millions, and then the trolls show up and ruin everything by making the exploit super obvious. Like, ugh, couldn’t they exploit their MMO-breaking bug in peace? The glitch got patched immediately.

And by immediately, I mean 4 days later.

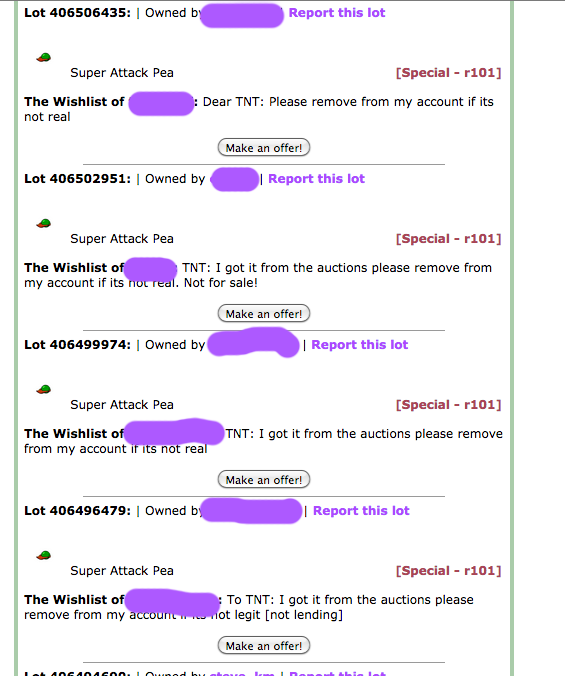

Also, they didn’t rollback the servers. Instead, they banned the abused, removed all the obvious-looking duplicates, and warned that anyone taking advantage of the glitch may be banned. It can take months to hear back from Neopets support to appeal your ban, thanks to understaffing and a massive backlog. There was some paranoia.

There are reasons not to do a rollback. It’s a nuclear option that’s very disruptive for other users. However, it’s also one of the few ways to guarantee invalid items are no longer in circulation. Users adopted smell tests, based on whether the price was too good to be true, or if the account seemed too young to own a valuable item. This only goes so far. Once a dupe gets laundered through a few users, it’s hard to identify it as a fake.

Ironically, by avoiding the nuclear option, the Neopets team ended up creating a radioactive fallout around high-end items, since buying them came with the risk of losing your account. It took several months before people felt safe buying these items. If you check prices, it’s obvious that some dupes are still out there, but what can you do? Super Attack Peas used to be over 1 billion NP and now they’re about one-fourth of the price. That’s just a fact of life now.

That Time Neopets Decreased Sale Friction and Prices Dropped Accordingly

For a long, long, long, looooooooooong time, the max price of items in user shops was 99,999 NP. For anything more expensive, you had to sell on the Auction House or Trading Post. In practice, the Auction House is only used to close already agreed-upon deals from the Trading Post, leaving the Trading Post as the de-facto selling ground.

When I played more actively, I found this very annoying, because it takes so much longer to buy items from the Trading Post. Here’s how you buy items from shops.

- Go to the Shop Wizard and search for your item.

- It gives you listings in order of lowest price.

- Buy cheapest one. The transaction is instant.

Here’s how the Trading Post works.

- Go to the Trading Post and search for your item

- It gives you listings where people write how much they want, except most people just say “neomail me offers”, which makes it hard to figure out the market price.

- You haggle with the seller in an NeoMail back-and-forth.

- You get the item a few hours or days later, depending on how much your activity overlaps with the other user.

In short, there’s a lot of friction. There’s also information asymmetry. When a deal closes, it isn’t publicized. Only the people involved in that transaction know the final terms. Sellers ask for more NP than the item is actually worth, because it’s hard to prove them wrong, and it’s up to the buyer to propose the right price. The Shop Wizard is buyer-favored. The Trading Post is seller-favored.

Recently, the max shop price was increased from 99,999 NP to 999,999 NP. It was a great change that improved accessibility of several items, leading to price drops across the board.

I think the thing most people don’t realize is that honestly, it hasn’t caused items to go down in value, these are what the values truly should have been.

With the trading post, you don’t see the true scarcity / supply of the item because people have to keep posting trade lots, if they have a bunch of items to sell they take it down to put up other items, items sitting in SDBs, etc.

Upping the shop price limit essentially meant that we will finally see closer to the real supply of the item(s) and thus, the market will determine the price.

The TP is abused a lot to hide the true supply of the item and make it appear as if there is less of something than there is, resulting in an inflated price. (Like when people buy up all of an item off the SSW before the price increase and then say “Oh give me 300k+”. The price difference between shops and trading post allowed for this manipulation)

So honestly, all it did was allow the market to actually determine values instead of letting users manipulate the perceived supply and inflate prices.

I’m all for it

I was selling Turned Tooths at the time, and it was pretty amazing to see all the Turned Tooths disappear from the Trading Post, then reappear in the Shop Wizard at 60% of the asking price. I knew they weren’t as valuable as everyone wanted them to be, but it was something else to see it happen live.

That Time a Book Got 1000 Times More Expensive Because of Charity

Here’s a secret about Neopets: 99% of the items are useless.

Despite this, some useless items still have high prices, because of their rarity. Neopets has a built-in rarity system, explained here. For our purposes, the important rarities are rarities 1-99. These items are sold form NPC shops, and the rarity defines how often that item is in stock. Rarity 99 items restock very rarely, giving them an allure of their own. Many users like filling out their Galleries with all the rarity 99 items they can get.

Let’s talk about the Charity Corner. It’s a yearly event where you can donate gifts to Neopians who need it. Awwwww.

The way it works changes each year, but here’s how it worked in 2015 and 2017. Donatable items fell in three rarity bands: r70-79, r80-89, and r90-99. The Charity Corner only accepted a few category of items, one of which was books. For every five books you donate, you get a random item sampled from the rarity band you donated. For example, you donate five rarity 90 books, and you get a random item from rarity 90 to 99.

This sampling wasn’t weighted by rarity, so this gave decent chances of getting a rarity 99 item, and a lot of cheap items have rarities in the low 90s.

No surprises for what happened next: users began buying out the cheapest rarity 90 items they could find, swarming to the next cheapest item once users adjusted their prices accordingly.

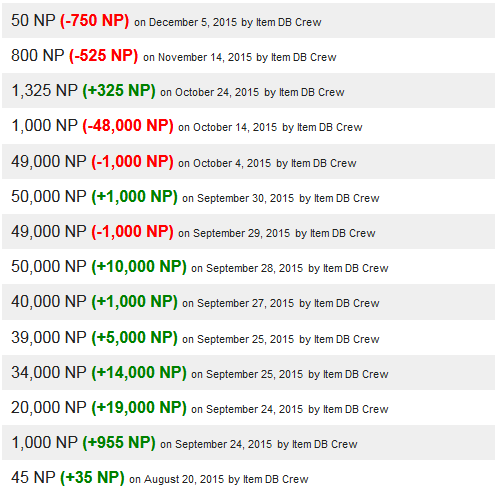

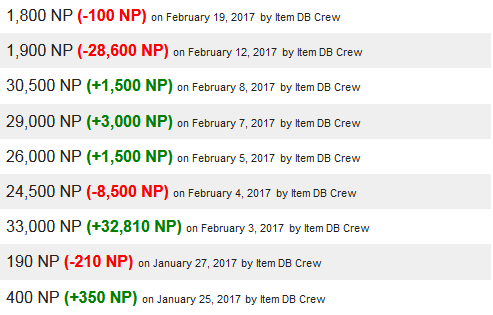

JellyNeo tracks historical logs of item prices. Here’s the 2015 price log for Realm of the Water Faeries, a rarity 92 book. Can you guess when Charity Corner ran that year?

A price spike form 45 NP to 50,000 NP. No big deal, just a 1000x increase. Here’s the 2017 spike, with a more modest spike of 100x from 300 NP to 30,000 NP.

I didn’t participate in Charity Corner, but it ended up affecting me when I was shopping for Battledome weapons. I wanted to buy a Wand of the Dark Faerie. It used to cost 350 million NP, way more than I ever thought I could achieve. These wands are given out for reaching level 50 in the Jhudora quests. Part of the reason they’re so expensive is because you need several rarity 99 items to reach level 50. The increase in rarity 99 items from Charity Corner ended up decreasing the price to 40 million NP. Still a lot, but a lot more manageable.

That Time A User Donated a Billion Neopoints to Help Save the Economy

All MMO economies are defined by their sources and sinks. How does in-game currency get created, and how does it get deleted?

Take MapleStory, a game I played a lot in my youth. Monsters create money when they die. NPC shops remove money when they sell you healing potions. Whenever you trade with a user, a small percentage of the transaction is taken as tax.

Monsters and NPCs are parts of all RPGs. But why is there a user tax? The more monsters killed, the more money there is in the economy. In MapleStory, the in-game currency is called Mesos. When there are more Mesos, each individual Mesos has less buying power. The tax helps keep inflation in check by removing Mesos from the overall economy.

In Neopets, Neopoints enter the system through Flash games, and leave from NPC shops. There’s no transaction tax, so inflation is only held in check by whether users spend money at NPC shops or not.

Remember that NPC shops restock items of varying rarities. The NPC price for rare items is much lower than the true market price, leading to a practice called restocking. You refresh a shop page until rare items appear in stock, buy them for cheap, then sell them to users at a much higher price.

As a kid, I thought restocking was magical. I played Neopets with my friends at the local library, because my local library had a super fast Internet connection. In a Neopets restock war, milliseconds matter. It was basically high-frequency trading, except performed by millions of children instead of bots.

As an adult, I now recognize the profit from restocking is basically the equivalent of scalping. There’s arbitrage between the NPC market and the user market and you’re getting compensated for the time spent.

From a game design point, scalping is actually good for the game, since it gives new players another way to earn Neopoints. But the low NPC shop prices meant that NP wasn’t leaving as quickly as it needed.

Users with enough economics knowledge to recognize the problem petitioned the Neopets team to add more NP sinks to the game. They obliged. Here are two I like a lot.

The Lever of Doom: Pulling this lever makes you lose 100 NP. That’s all it does.

Why would you even pull this lever? Well, every pull has a small probability of giving you a Lever of Doom avatar you can use on the Neopets message boards. That was enough to get avatar hunters to spend millions of NP chasing the avatar.

Would you pay for this? I wouldn’t. But you do you.

The Hidden Tower Grimoires: The Hidden Tower is the most expensive NPC shop in the game, selling items for millions of NP. Unlike other shops, all Hidden Tower items are always in stock. This reverses the dynamic - most Hidden Tower items are buyable from users at a slight discount from the base price. Bad for inflation, since users don’t spend money at the NPC store.

The Hidden Tower Grimoires are books, and unlike the other Hidden Tower items, they’re entirely untradable. You can only buy them from the Hidden Tower, with the most expensive one costing 10,000,000 NP. Each book grants an avatar when read, disappearing on use.

Remember the story of the I Am Rich iOS app, that cost $1000, and did literally nothing? Buying the most expensive Grimoire for the avatar is just pure conspicuous consumption. I think these avatars were actually a pretty smart move. They target the top 1% by creating a new status symbol.

Alone, these two weren’t enough, so in 2010, the team decided to go all out, with a month-long Save the Wheels Campaign.

The Wheels are a site feature where you pay a small NP fee to spin a wheel to get prizes. They’ve been around since the start of the game, and they needed a visual rework.

Seeing an opportunity, the rework was branded as a renovation project. Users who donated Neopoints would receive thank-you gifts from the Neopian Conservation Society, and the top 10 donaters would be listed on a public leaderboard. The most expensive thank-you gift required donating 2,000,000 NP at once.

There are lots of user stories about people who come back to the site, dig through their storage, and discover an item is now worth millions of Neopoints. For example, it could be a stamp from some random event, and now it’s part of a stamp album that stamp collectors want, and they were only given out from that random event ten years ago. Any time Neopets runs something that gives out items which will never be given again, you can be sure that some users will get as many as they can as a long-term investment strategy.

You can see the final tally at the official Neopets page for the event. Users donated 12,371,694,534 NP in total, with the top user donating over a billion NP by themselves.

And okay, sure, it’s not like they asked users to donate NP for the good of the economy. It was in exchange for stuff that speculators wanted. But I still find the entire event very amusing.

* * *

There isn’t really a point to this post. It was mostly an excuse for me to dig up Neopets history. But if I had to make one, it would be that it’s very, very hard to escape economics. It has this nasty habit of showing up everywhere you look.

There have been other posts about the Neopets economy. There’s this one about inflation, and this one about the dangers of unrestricted capitalism.

In one sense, yes, there’s definitely a Neopets top 1%, that barters in millions while others are earning 5000 NP a day. As noted by this editorial, this top 1% barely interacts with the Neopets proletariat. (I certainly never expected to write about the Neopets proletariat, but here we are.) It’s definitely disheartening to realize how much more the wealthiest users have.

On the other hand, Neopets doesn’t cost anything to play. Feeding your Neopets costs 5 NP a day, and they don’t die if you don’t feed them. The Flash games are entirely free to play. The strongest criticism of unrestricted capitalism is that some are forced into terrible conditions to earn enough to live, but this just isn’t a thing in Neopets, since there isn’t any cost to living.

Sure, not everyone on Neopets has what they want. But Neopets is a game. It only works as a game because it requires effort to get many of things it offers. If there’s no challenge in getting things in the game, why would anyone play it?

A similar sentiment was explained in this profanity-filled, slightly NSFW Zero Punctuation review of Minecraft.

If you can just clap your hands and summon fifty explosive barrels, the spectacle is as fulfilling as eating your own snot. Minecraft is a responsible parent. It knows you’ll swiftly get bored if you just get whatever you want, so it pays you five dollars a week to wash its cars so that you can gain an appreciation for value.

I’d hesitate to generalize any lessons from the Neopets economy to the real world economy. There are too many differences for the comparison to have value. If anything does transfer, it’s that we like having goals, something to aim for that takes work to achieve. And, it’s that not everyone can get what they want. Not the happiest ending, but what do you expect out of the dismal science?