Posts

-

A Puzzlehunt Tech Checklist

CommentsLast updated March 29, 2020

I have played my fair share of online puzzlehunts, and after helping run Puzzles are Magic, I’ve learned a lot of things about what makes a good hunt.

There are several articles about puzzle design, but as the tech person for Puzzles are Magic, I haven’t seen any articles about building a hunt website. I suspect that everyone builds their setup from intuition. That intuition is usually correct, I’ve yet to see an entirely broken tech set-up, but there are a lot of details to keep track of.

This is a list of those details. My aim is to be extensive about the lessons I learned when building the Puzzles are Magic site.

If you are reading this, I’m assuming that you know what puzzlehunts are, you’ve done a few puzzlehunts yourself, and are either interested in running an online puzzlehunt, or are just curious what the tech looks like.

(Fair warning: I’ll be making a lot of references to Puzzles are Magic, as well as other puzzles, and there will be minor spoilers.)

Hunts are Web Apps

The most important thing to remember about a puzzlehunt is that a puzzlehunt is essentially a full web application. There’s a front-end that solvers interact with, there’s a back-end that stores submission and solve info, and the two need to send the right information to each other for your hunt to work.

If you want to run an online puzzlehunt with teams, a public facing leaderboard, and so on, you’ll need to know how to set up a website. Or, you’ll need to be eager to learn.

If you don’t know how to program, and can’t enlist the help of someone who can, then your job will get a lot harder, but it won’t be impossible. If worst comes to worst, you can always just release a bunch of PDFs online, and ask people to email you if they want to check answers. The PI HUNT I worked on this weekend followed this model, and I still had fun.

The standard rules of software development apply, and the most important one is this: you don’t have to do everything! You’re going to run out of time for some things. That’s always how it goes. Keep your priorities in order, and do your best.

Using Existing Code

If you are planning to make minor changes on top of someone else’s hunt code, think again.

Puzzlehunt coding is like video game coding. It starts sane and well-designed, and becomes increasingly hacky closer to the hunt deadline. This is especially true if the hunt has interactive puzzles or is trying to do something new with its puzzle structure. In the end, functionality matters more than code quality, and since hunts are one-time events, there usually isn’t much incentive to invest in clean code.

Additionally, much of the work for a puzzlehunt is about styling the puzzles and site to fit what you want, and that’s work you have to do yourself, no matter what code base you use.

As far as I know, the only open-source puzzlehunt code base is Puzzlehunt CMU’s codebase, which can be found at this GitHub. Expect most other people to be hesitant about sharing their hunt code. Or at least, I’m hesitant to share the Puzzles are Magic code. I’m happy with the core, but there are a ton of hardcoded puzzle IDs and other things to make the hunt work as intended. I’ve considered releasing the code as-is, warts and all, but even then I’d need to audit the code to verify I’m not leaking any hardcoded passwords, secret keys, user data, puzzle ideas I want to use again, and so on.

The Puzzles are Magic code is forked from the Puzzlehunt CMU code, with several modifications. It’s written in Django, and served using Apache. Both libraries are free and very well tested, so they’re solid picks.

Authentication

You will need to decide whether you want logins to be person-based, or team-based.

In a person-based setup, each person makes their own account. People can create teams, or join existing teams by entering that team’s randomly generated hunt code. Puzzlehunt CMU’s code does this, as did Microsoft College Puzzle Challenge. The pro is that you get much more accurate estimates of how many participants you have. Both Puzzlehunt CMU and Microsoft CPC are on-campus events where the organizers provide free food, and they need to know how much food to order.

The con is that by requiring every solver to make an account, you increase friction at registration time. Everyone on a team must make an account to participate, and anyone the team wants to recruit during hunt must do the same. Also, some people are generally wary of entering emails and passwords into an unfamiliar website.

In a team-based setup, each team has a single account. There is a shared username and password, chosen by the person who creates the team, and their email is the main point of contact. They share the team’s login credentials around, and team members can optionally add their names and emails on that team’s profile page. This is the setup used by MIT Mystery Hunt, Galactic Puzzle Hunt, and Puzzles are Magic.

Puzzles are Magic went with team-based logins because our main goal was getting as many people as possible to try our puzzles. That meant reducing sign-up friction as much as we could, and redirecting all logins to the puzzle page to get solvers to puzzles as fast as possible.

The downside of team-based logins is that your participant counts will be off. In our post-hunt survey, we asked about team size, and based on those replies, we think only 50-75% of people who played Puzzles are Magic entered their information into the site.

Whatever login setup you go for, make sure you support the following:

- Unicode in team names and person names. I’d go as far as saying this is non-negotiable. Several teams have emoji as part of their team culture, and some teams will use languages besides English. Puzzles are Magic got a few teams from Chinese MLP fans (still not sure how), and they used Chinese characters in their team names.

- Password resets. People forget their password. It happens. This is especially important if you go with team-based logins. Lots of people sign up without realizing they’re creating an account for their entire team.

- HTTPS. There’s really no excuse to not use HTTPS. You may think that no one will try to hack your puzzlehunt website. You’ll be correct. However, people reuse passwords when they shouldn’t, and you don’t want packet sniffers to discover any re-used passwords. Just use HTTPS. If you don’t, people will complain, and I will be one of them. Let’s Encrypt is a free certificate signing authority you can use for this.

Admin Sites

Not everyone working on your Hunt will know how to program, and even those that do may not want to pull out a computer terminal every time they want to change something. You’ll want admin sites that let you directly modify your database from a web interface.

Django has a default admin site, and Puzzlehunt CMU’s code comes with custom add-ons to this site. We found they covered our use cases with minimal changes.

This is necessary to running an online hunt. We used it to fix typos in team names, delete duplicate teams created by people who clearly didn’t read our rules saying they shouldn’t this, and do live-updates to issue errata for puzzles.

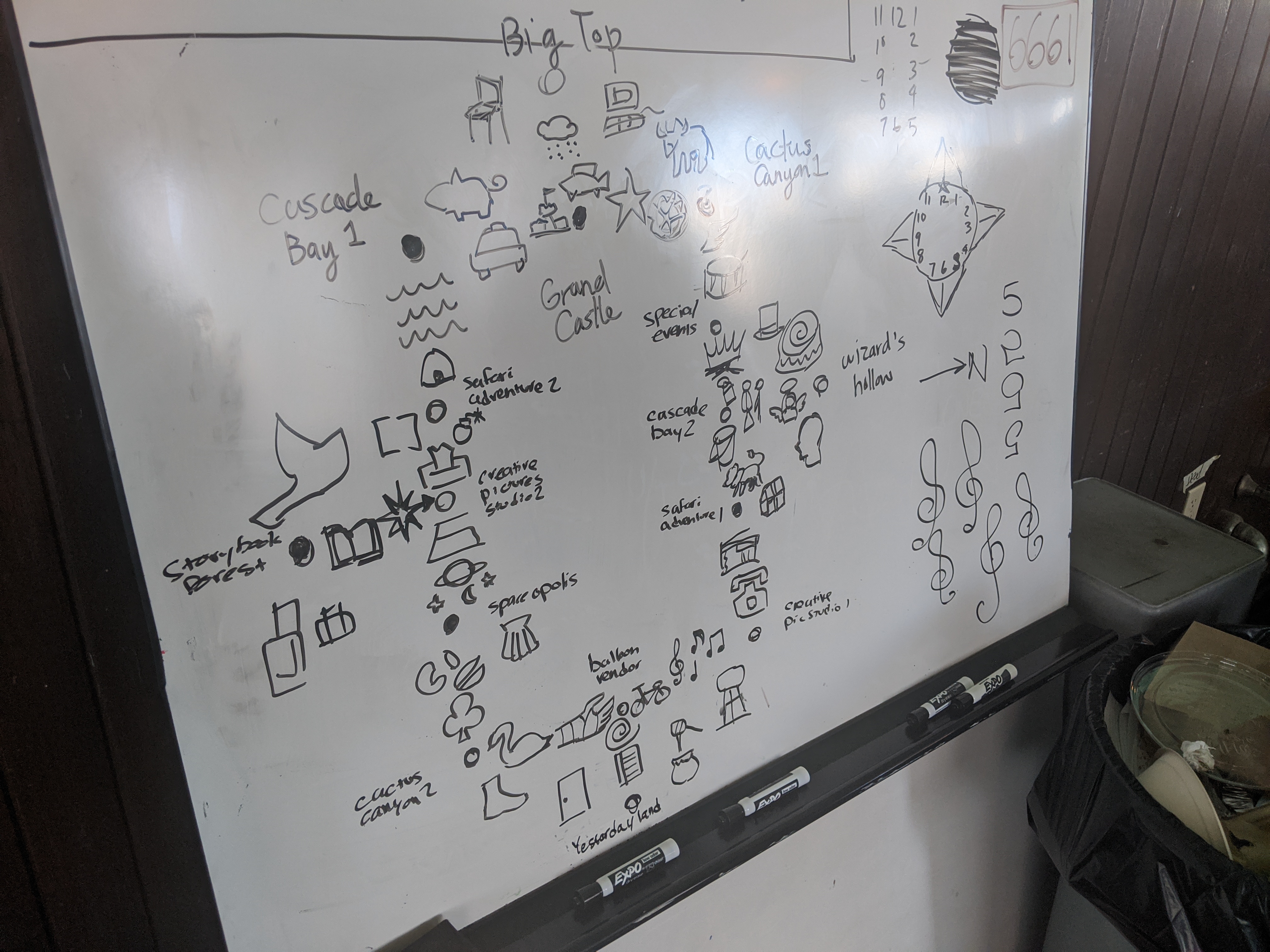

You’ll also want to have a Big Board (a live-updating team progress page), and a Submission Queue (a live-updating list of recent submissions). These are necessary as well. Not only are they incredibly entertaining to watch, they also give you a good holistic view of the health of your hunt - which puzzles do teams get stuck on, how fast are the top teams moving, are there common wrong answers that indicate a need for errata, etc.

Unlock System

In an Australian style hunt, puzzles are unlocked at a fixed time each day, with hints releasing over time. In a Mystery Hunt style hunt, puzzles are unlocked based on previously solved puzzles, potentially with time unlocks as well.

Supporting both time unlocks and unlocks based on solving K out of N input puzzles is enough to cover both cases. You may also want to support manual overrides of the unlock system, for special cases like helping teams that aren’t having fun. See Wei-Hwa’s post about the Tyger Pit for another example where manual unlocks would have helped.

Answer Submission and Replies

Unless you expect and want to have someone awake 24/7 during Hunt, you’ll want an automatic answer checking system.

For guess limits, you can either give each team a limited number of guesses per puzzle, or you can give unlimited guesses with rate limiting. Both are valid choices. Whatever you do, please, please don’t make your answer checker case-sensitive, and have it strip excess characters before checking for correctness.

Whether you want to confirm partials or not is up to you, but even if you don’t, it’s good to support replies besides just “correct” and “incorrect”, since it opens up new puzzle design space. Example puzzles that rely on custom replies are Art of the Dress from Puzzles are Magic, and The Answer to This Puzzle Is… from GPH 2018.

(Tangent: it is generally good for every puzzle hunt to have 1 or 2 puzzles where the answer tells teams to do something, and they get the real answer after doing so. This gives you a back-up in case one of your puzzles breaks before hunt. Most of these puzzles require an answer checker that can send a custom reply.)

If you decide to confirm partials, you’ll encourage teams to guess more, so make sure you aren’t unduly punishing teams for trying to check their work.

Submissions should be tagged with the team that submitted them, and what time they did so, since this will let you reconstruct team progress and submission statistics after-the-fact.

Errata

Everyone tries to make a puzzlehunt without errata, but very few people succeed. Make sure you have a page that lists all errata you’ve issued so far, including the time that errata came out, and have the errata visible from the puzzle page as well.

If you are building an MIT Mystery Hunt style puzzlehunt, where different teams have different puzzles unlocked, your errata should only be visible to teams that have unlocked the puzzle it corresponds to.

When you issue errata, you’ll want to notify teams, which brings me to…

Email

If you have fewer than 100 participants, you can get away with emailing everyone at once, putting their emails into the BCC field. Above 100 participants, you’ll want an email system.

Puzzles are Magic used Django’s built in email system. Whenever we emailed people, we generated a list of emails, split them into chunks of 80 emails each, then sent emails with a 20 second wait time between each email send. Without the wait time, we found Gmail refused to send our emails, likely because of a spam filter.

As of this writing, we had to turn off a few security settings and manually unlock a CAPTCHA to get Gmail to be okay with Django sending emails from our hunt Gmail address. None of this was necessary to send emails from the hunt Gmail to itself, so it took us a long time to discover our email setup was broken. Make sure you check sending to email addresses you don’t own!

You may want to integrate a dedicated mailing service, like SendGrid or MailChimp, which will deal with this nonsense for you. I haven’t checked how hard that would be.

You’ll want the ability to email everyone (for hunt wide announcements), everyone on a specific team (if you want to talk to that team), and everyone who has unlocked but not solved a specific puzzle (to notify for errata). You may also want the ability to email everyone who hasn’t reached a certain point in the hunt, to talk to less competitive teams.

Puzzle Format

For puzzles, you can either go for a PDF-by-default format, or HTML-by-default format.

The upside of PDF-by-default is that you can safely assume PDFs will appear the same to all users. You don’t have to worry about different browsers or operating systems messing up the layout of your puzzle.

Browser compatibility is a huge pain, but if you’re running an online hunt, I still advocate for an HTML-by-default puzzlehunt. It requires more work, but comes with these advantages.

- You can more easily support “online-only” experiences. In my opinion, if you’re making an online hunt, you should create something that only works online, to differentiate it from in-person events.

- You reduce the number of clicks between a solver and the puzzle. Click link, see puzzle, vs click link, click download, open download, see puzzle.

- If you have several constructors, it’s easier to force consistent fonts and styles across puzzles, by using a global CSS file. With PDFs, you have to coordinate this yourself.

- HTML pages are inherently re-downloaded whenever a solver refreshes or reopens the page. That means if you issue errata, solvers will notice your errata faster than if it’s part of a PDF they have to re-download. It’s also possible solvers will accidentally look at their old downloaded PDF, instead of the new PDF.

In total, I believe these benefits are worth the extra work required. Of course, you should use PDFs in cases where doing so is easier. There was no way A to Zecora was ever going to be in HTML format. Meanwhile, use PDFs when you need the strengths of that format (such as a puzzle where the precise alignment of text is important).

If you plan to have your puzzles be HTML-by-default, you’ll want to have tools that make HTML conversion easier. You can write HTML manually, it’s just incredibly tedious. I personally like Markdown, since it’s lightweight, builds directly to HTML, and you can add raw HTML to Markdown if you need to support something special.

This is off topic, but if you know how, I highly, highly recommend writing scripts that automate converting puzzle data into HTML. Doing so reduces typo risk, and makes it easier to update a puzzle. For Puzzles are Magic, when constructing Recommendations, I wrote a script that took a target cluephrase, auto-generated extraction indices, and outputted the corresponding HTML. For Endgame, I did the same thing. Both those puzzles had 3 drafts, one of which was made the night before hunt, and those scripts saved a ton of time.

Access Control

Teams should not have access to a puzzle before they have unlocked it. This is obvious, but what’s less obvious is that they also shouldn’t have access to any static resources that puzzle uses. Any puzzle specific images, JavaScript, CSS, PDFs, and so on should be blocked behind a check of whether the team has unlocked that puzzle yet.

Assume that solvers will find any file that isn’t gated behind one of these unlock checks, and check whether doing so would break anything about your hunt.

The 100% foolproof way to avoid puzzle leaks is to only put the puzzle into the website right before it would unlock. This works for Australian-style hunts, where puzzles are unlocked on a fixed schedule.

However, for testsolving, you’ll want to have testsolvers use your hunt website, to test both the puzzles and your site. To do so, the puzzles need to be in your website, with access control to restrict them to playtesters. And if you’re going to support that, then you’re 90% of the way there to access control based on team unlock progress, and you might as well go all the way.

File Metadata

There are many ways your puzzle can have side channels that leak information you may not want to leak. For example, the puzzle Wanted: Gangs of Six from MIT Mystery Hunt 2020 involved identifying gangs of six characters from several series, extracting cluephrases using numbers written in fonts matching each series. The solution mentions that testsolvers were able to extract the font names from the PDF properties, and many of the font names were named after the series they were taken from. The final puzzle uses remastered fonts with less spoiler-y names. As another example, for A Noteworthy Puzzle from the 2019 MUMS Puzzle Hunt, the original sheet music and color names could be retrieved from inspecting the PDF file.

Whatever format you use, double check if that format comes with metadata, and if it does, inspect it to see if it leaks anything you don’t want to leak. If you make a music ID puzzle, and forget to clear the artist and album metadata, then, well, that’s on you.

Note that “side channel” implies you don’t want puzzle info to leak this way. Sometimes, leaking info this way is okay. In Tree Ring Circus from MIT Mystery Hunt 2019, one circle has a labeled size, and you need the size of the other circles to solve the puzzle. The circles were drawn in SVG, and to aid solving, the distances used in the SVG file exactly matched the distances you wanted.

Filenames

Make your filenames completely non-descriptive. If you have 10 images, name them “1.png”, “2.png”, “3.png”, and so on, in order of the webpage. Or better yet, give each of them randomly generated names. Anthropology from Puzzles are Magic used random names, since solvers needed to pair emoji pictures to numbers, and I didn’t want the emoji in “1.png” to be confused for the number 1.

If you have an interactive puzzle where hidden content only appears after the solvers reach a midway point, hide that content appropriately. If you have a secret 5th image, and you name your first four images “1.png” to “4.png”, you don’t want solvers to shortcut the puzzle by trying to load “5.png”.

Again, like the file metadata mentioned above, sometimes leaking info in filenames is okay. An example puzzle that does this is p1ctures from MIT Mystery Hunt 2015. Yes, almost anything can be part of a puzzle if you try hard enough.

Interactive Puzzles

Interactive puzzles are usually more work to make, but are also often the most memorable or popular puzzles. (My theory is that interactive puzzles naturally let you hide complex rules behind a simple surface, and the emergent complexity this creates is the entire reason that people like solving puzzles.)

The rule-of-thumb in online video games is to never, ever trust the client. Puzzlehunts are the same. In MIT Mystery Hunt 2020, teammate solved 2 or 3 interactive puzzles by inspecting the client-side JavaScript, finding a list of encrypted strings, searching for the function that decrypted them, and running the decryption until we found strings that looked like the answer.

Assume that teams will decode any local JavaScript, even if you minify and obfuscate it. The only guaranteed way to close these shortcuts is to move all key puzzle functionality to server-side code. Server-side confirmation has higher latency than client-side confirmation, so if you’re worried about responsiveness, only confirm the most important parts of the puzzle on the server. The interactive puzzles for Puzzles are Magic were essentially turn-based, so we didn’t care about latency and had all the logic be server-side.

For example, in Applejack’s Game, all the JavaScript does is take the entered message, send it to the server, and render the server’s response. That’s it. There’s nothing useful to retrieve from the client-side JavaScript, so there’s no need to hide it.

If possible, try to avoid repeating logic across the client and server. The GPH 2019 AMA mentions that one puzzle (Peaches) had a bug where client-side logic didn’t match the server-side verification, which caused some correct solutions to get marked as incorrect.

As mentioned in that AMA, if your server side code fails, make sure to give teams an obvious error, perhaps one that tells them to contact you so that you learn about the problem.

Accessibility

You can generally assume that most solvers will be on either Windows or Mac OS X, they will normally use one of Chrome, Safari, or Firefox, and they’ll be solving from a laptop-sized or monitor-sized screen.

The key word here is most. Accessibility issues will always affect a minority of your users, but there are a lot of minorities. If you want more people to solve your puzzlehunt, you’ll need to be inclusive when possible.

Some of your solvers will be color blind. Some of your solvers will be legally blind. Some of your solvers will heavily prefer printing puzzles versus solving them online. Of those solvers, not all of them will have access to color printers.

You may not be able to please all of these people. If your puzzle is based on image identification, the legally blind solver is not going to be able to solve it. You can’t even provide alt text that describes the image, because non-blind solvers will use the alt text as a side channel. But, try to do the best you can. As mentioned from the 2019 MIT Mystery Hunt AMA, “Keep in mind what the puzzle actually needs in terms of skills and abilities, and what you’re presuming the solver/s will have. Wherever there’s a mismatch, try to make the puzzle suit the smallest set of abilities without stomping on data the puzzle needs.”.

For color blind solvers, try to avoid colors that are too visually similar to one another. If you need to use a large number of colors, consider providing a color blind alternative.

For people who prefer printing puzzles, make sure that your puzzles print well. By default, printers ignore all CSS, so by default, your puzzles probably print poorly. You’ll need to define specific print CSS files to get your puzzles to print correctly.

Some solvers will be solving from smartphones or tablets, rather than desktops or laptops. It’s okay if your site is worse from mobile, but make sure your site isn’t completely broken on mobile. Even solvers with laptops will appreciate being able to solve puzzles on the go.

Watch out for slow Internet connections or computers. In Number Hunting from Puzzles are Magic, we used MathJax to render the math equations for flavor reasons, but we found this sometimes took a while, and rendered poorly on mobile because MathJax equations don’t support line wrap. So, we included a plaintext version as well.

The one big accessibility failure from Puzzles are Magic I’m still annoyed about is The Key is Going Slow and Steady. One lesson we learned during hunt construction was that puzzle presentation really matters. If a puzzle looks big, it’s intimidating, and solvers are less likely to start it. So, if you can find a way to make a puzzle look smaller, you should. Recommendations testsolved a lot better when I made all the images 4x smaller, even though this changed nothing about its difficulty.

For The Key is Going Slow and Steady, we used Prezi to bundle the flowchart into a smaller space. The problem this introduced from an accessibility standpoint is that Prezis load really, really slowly, and Prezis don’t work on mobile. At all. We were aware of these issues, but didn’t have time to build a lightweight alternative, so it had to go as-is.

Hardware and Load Testing

To run your hunt, you’re going to need a server. If you work at a university, check if your university provides free computing services. If you don’t have a server on hand, or you don’t want to trust your personal Internet, you’ll probably use a cloud computing service.

For cloud services, you can either go for a raw machine, or you can use app creation services like Google App Engine or AWS Elastic Beanstalk. App services will give you less flexibility, will require writing code to fit their API, and are more expensive. However, they’ll handle more things for you, like auto-scaling your site when you get more users.

Puzzles are Magic was hosted from a raw machine we purchased through Google Compute Engine. We did this because it required the fewest changes from the Puzzlehunt CMU code we started from, and we had some GCE free trial credit to play with. Unfortunately, our free trial expired about 6 weeks before the hunt started. We spent about $120 in total. Of that total, $80 was spent in the 4 weeks around hunt, and $40 was spent in the remaining time. We’re currently spending about $16/month to run the hunt in maintenance mode.

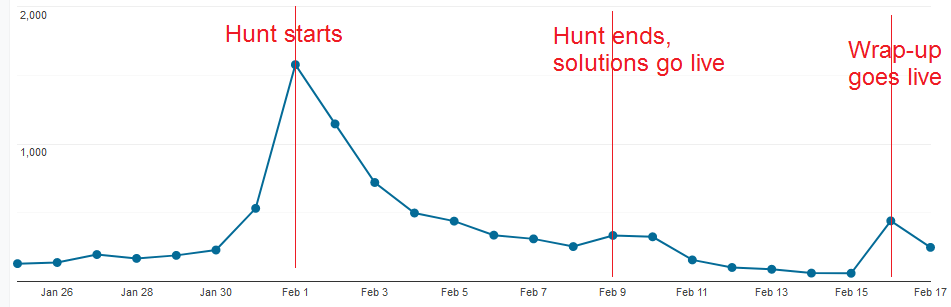

Here’s a chart for number of users per day, with annotations for key dates.

User counts started increasingly rapidly the week before hunt, peaked the first weekend, then decayed over time. Make sure you get a large enough machine a few weeks before your hunt starts.

We load tested our website using Locust. Our target was supporting 750 active users using the site at the same time. We picked that number based on registration counts and our estimates for how many teams would start working the moment puzzles released.

Make sure you test from a fast Internet connection, or split your load test across a few networks! We had trouble getting more than 250 active users to work, and eventually debugged it to load testing from a slow connection that had trouble pretending to be 250 users. The site was fine the entire time.

If your server is too busy to respond to solvers, it’s also going to be too busy to respond to your remote login attempts to fix it. Err on the side of too large. The money is worth your mental health.

More Exact Hardware Details

This part is only interesting if you care about webserver configuration.

We used a

n1-standard-4machine. Based on GCE specs, this corresponds to 4 hardware hyper-threads on a Skylake-based Intel Xeon Processor. The machine had 15 GB of RAM, and used the following Apache config (added to/etc/apache2/apache2.conf).<IfModule mpm_event_module> StartServers 10 ServerLimit 110 MinSpareThreads 25 MaxSpareThreads 75 MaxRequestWorkers 1000 ThreadsPerChild 5 AsyncRequestWorkerFactor 2 </IfModule>StartServers is the number of processes to start. It should satisfy

MinSpareThreads < StartServers * ThreadsPerChild < MaxSpareThreads.ServerLimit is the maximum number of processes to start. It should satisfy

ServerLimit * ThreadsPerChild > MaxRequestWorkers. (This isn’t true for our config because of a config error.)MinSpareThreads and MaxSpareThreads and the number of spare threads to prepare for new incoming connections. We didn’t change this.

MaxRequestWorkers is the maximum number of threads (requests) you want your server to support. Any requests over this limit will be blocked, until existing requests are fulfilled.

ThreadsPerChild is the number of threads to spawn per process. Most configurations online use

ThreadsPerChild 25. We found using more processes and fewer threads per process made our site load a bit faster, at the cost of more CPU usage and RAM usage. UsingThreadsPerChild 25with 5x smaller StartServers and ServerLimit should be good enough for you.We’re not sure what AsyncRequestWorkerFactor is, but all the guides we found online recommended keeping it at the default of 2.

Caching

Adding caching to your website can heavily speed up its performance. However, it also opens your site to caching errors, which can be extra dangerous when you’re trying to add errata to a puzzle.

We tried to avoid caching, and were willing to pay the costs that came with it, but part of our Apache config cached static resources we didn’t want to cache. We never debugged why, but it did mean that when we added errata to a PDF, it took 5 minutes for the errata to propagate to solves. Luckily, the errata was minor.

Hiding Spoilers from Web Crawlers

Adding

<meta name="robots" content="noindex">to the header of an HTML file prevents most search engines from indexing that page. To avoid spoiling anyone who looks through your archive later, that header should be added to the solutions for each puzzle, as well as any other page that could spoil that puzzle’s answer.Static Conversion

As mentioned earlier, running Puzzles are Magic in maintenance mode currently costs about $16/month. For archiving purposes, sometime after the hunt, you’ll want to convert your website into a fully static site. This brings your maintenance costs to almost zero. Amazon S3 costs just a few cents per GB of storage and data transfer, and GitHub Pages lets you host a static site for free.

Try to have a static answer checker ready to go before hunt ends. Even if all your solutions are ready the instant hunt ends, you want to give solvers the ability to check their answers spoiler-free.

As for converting the rest of the site, well, that can take some time. Remember how I said interactive puzzles should have all their logic be server-side? Well, making them work client-side means you have to reproduce all the server-side code to run in-browser. Puzzles are Magic still hasn’t been converted yet, and it’s been over a month since the hunt finished. I’m the one who wrote the code for all the interactive puzzles, so it’s on me to fix it.

If you’d rather not deal with these headaches, you can pay the server costs forever and leave the site as-is. Personally, I’d rather not pay $16/month for the rest of my life.

For non-interactive portions, the command mentioned here worked for me, but you should double check the links yourself, especially if your paths include Unicode characters, or are case sensitive.

March 29, 2020 update: Just finished static conversion of Puzzles are Magic, hosting it through Github Pages. I didn’t have Unicode issues, but did hit other problems.

- Some non-HTML files were not getting served with the correct file type in their

header. This still worked for solvers, but files like

1.pngwere crawled and saved as1.png.html. This broke some of the dynamic puzzles, and I had to rename all those files, and fix the generated links to each, which I did with some small shell scripts. - Files loaded through dynamic JavaScript were not crawled by

wget, and had to be added manually. - Files that relied on absolute paths broke in local testing, and needed to be changed to use relative paths.

- In the dynamic version of the site, trailing slashes did not matter to the

URL. Going to

/hunt/current/was equivalent to visiting/hunt/current. In the static version, this was different. The path/hunt/current/is shorthand for “load hunt/current/index.html”, and/hunt/currentis short for “load hunt/current.html”. Unfortunately, the links indexed by search engines all used trailing slashes. I was using Github Pages, which uses Jekyll, so I used jekyll-redirect-from to generate redirects from trailing slashes to the intended page. One gotcha was thatredirect_from: /hunt/current/had to be added as the very start of the file, no whitespace allowed at the top, or else it would be treated as part of the HTML file.

* * *

I am probably missing some things, but those are all the big ones.

This is likely my last puzzle-related post for a while. Almost all my 2020 blog posts have been about puzzles, but I do have other interests! Stay tuned for more.

-

MIT Mystery Hunt 2020, Part 2

CommentsNow that My Little Pony: Puzzles are Magic has wrapped up, I have time to finish my posts about MIT Mystery Hunt 2020. And only well over a month after Hunt too!

This post is long. I decided to bundle all my remaining thoughts about Hunt into one post, to motivate me to finish it once and for all. This post will have spoilers for both MIT Mystery Hunt and Puzzles are Magic.

Before Hunt

Before MIT Mystery Hunt officially starts, people on our team like trying to identify hidden Mystery Hunt data before the hunt officially starts. This year, we had two targets: the emails from Left Out, and the wedding invitations.

Every email we got from Left Out had a different signature. There was “Like Pluto, Left Out”. Another said “Like J in a Playfair Cipher, Left Out”. Then there was “Like a cake in the rain, Left Out”. Some people felt this was just Left Out’s gimmick (every signature is about something that’s left out), but maybe they were a puzzle, so we kept track of all of them.

When we got all the emails about the overnight situation, all without custom signatures, I argued this was weak evidence in favor of the emails being a puzzle. The argument went like this: for the emails to be a puzzle, Left Out would need to make sure they sent out N exact signatures for some number N. If the signatures were a puzzle, any surprising emails they needed to send (like the ones for the overnight situation) shouldn’t have custom signatures, and none of them did.

Someone else pointed out that given the seriousness of the situation, Left Out wasn’t likely to include a jokey signature, whether or not the emails were a puzzle. That convinced me the emails weren’t a puzzle.

The wedding invitations, on the other hand…

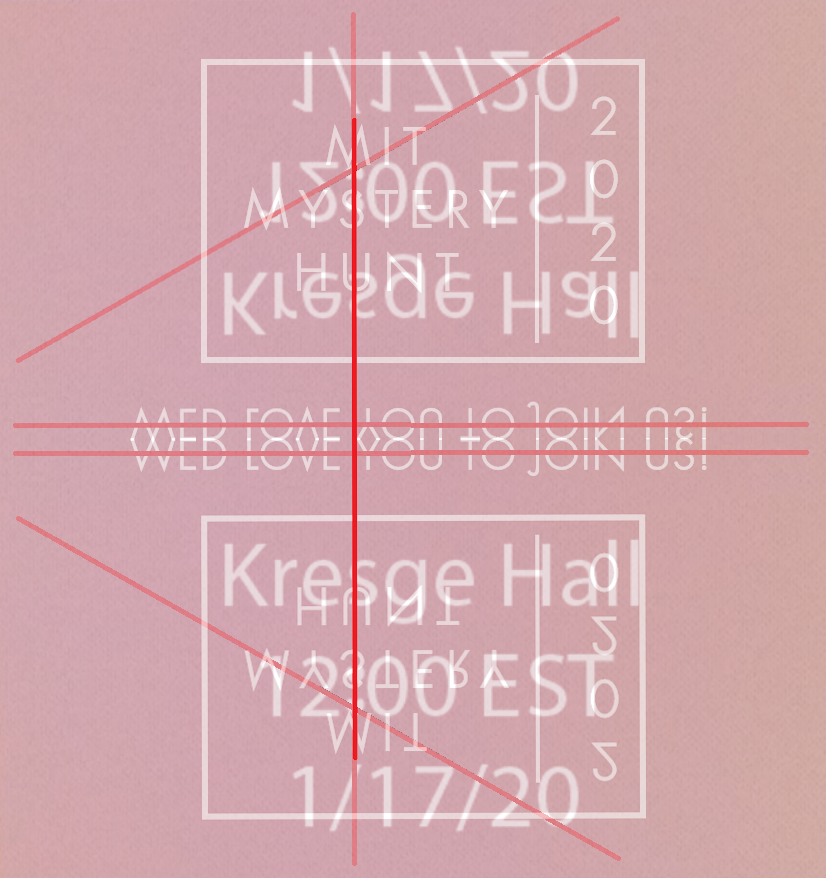

We recognized the first invite was a reference to the 2011 MIT Mystery Hunt invitation, but the second invite looked weirder. Why did the alignment look a little off center? Why was WED LOVE YOU TO JOIN US! missing an apostrophe in WE’D? Was it just “WED” for “WEDDING”, or was it something more? And so on.

Last year, our “is this a puzzle” theories were all in a channel named

#innovated-shitposting(later renamed#ambiguous-shitposting). This year, it was#wed-love-u-2-shitpost. The working theory was that “join us” meant “draw connecting lines”, “to” meant “connect the 2s”, “love” meant “connect the hearts in 12:00 EST”, and “you” mean “connect the letter Us”.So, uh, yeah, that’s where all those red lines come from. We also thought it might have involved folding, so we tried that too.

As I was boarding my flight to Boston, my position was that the invite was more-likely-than-not a puzzle (say, 60% chance), but it would come with a shell that we wouldn’t see until Hunt, so until then I wasn’t going to try anything.

Meanwhile, two teammates on teammate had a $20 bet on whether the email signatures were a puzzle. One was adamant they were, the other was adamant they weren’t. They agreed to a bet, and to quote the loser, “I got a lot less confident in my bet after I read all the emails.”

The day before hunt was spent socializing, figuring out who would get power strips (me), and figuring out our Mystery Hunt Bingo for the year. One teammate refreshed until they got a board that would give a bingo if it had puzzles we wanted to see. (“I like this board, because we’ll get a bingo if there’s a puzzle about video games and a puzzle about anime.”) But, was cherry-picking a bingo board ethical? We discussed it at the social, and Rahul proclaimed “It’s okay to cheat at bingo”. Cherry-picked bingo it is.

We did get the bingo line that used video games and anime, although it was a close call. We needed an anime that came out in the past 2 years, and Wanted: Gangs of Six came through with JoJo’s Bizarre Adventure: Golden Wind.

A Tangent About Guessing

In the minutes before Hunt, we learned there would be an automatic checker this year. That’s as blunt a segue as any to talk about guess rates.

As has been said many times elsewhere, teammate is an outlier on submitting tons of guesses during Mystery Hunt. Speaking personally (and definitely not for others on the team), here’s why I tend to guess a lot during Hunt.

To me, good puzzle solvers are distinguished by their ability to correctly generalize from limited data, solve around mistakes they’ve made in data collection, and rapidly form and discard hypotheses about how a puzzle works. More concretely, if you can reliably solve puzzles from 80% of the clues, it takes 80% of the time per puzzle. Except, in practice, it works out to faster than 80%, because the last 20% of clues are the hardest ones. The same principle holds for metapuzzles. If you can solve a meta from 60% of the feeders, then you get to skip the hardest 40% of that round, so you save more like 50% or 60% of the time you’d spend in a 100% forward solve.

Because of this, if you want to solve hunts faster, you should shortcut as much as you can, and that includes making low confidence guesses that rely on a few of your assumptions being true. The answer checker is a binary signal between “all your generalizations were correct”, or “at least one of your generalizations was wrong”, and although it’s a very low-bandwidth signal, it’s a signal that’s there. So if the hunt lets you be more aggressive on guesses, I’ll naturally submit a lot of aspirational guesses. It’s not personal towards the puzzle author - their puzzle is one of many puzzles within the cohesive whole that makes up MIT Mystery Hunt. I’m not going to abuse OneLook to solve a cryptic crossword by itself, but I may abuse OneLook to solve a cryptic crossword that’s part of a larger hunt, once I’ve gotten through all the clues that look fun.

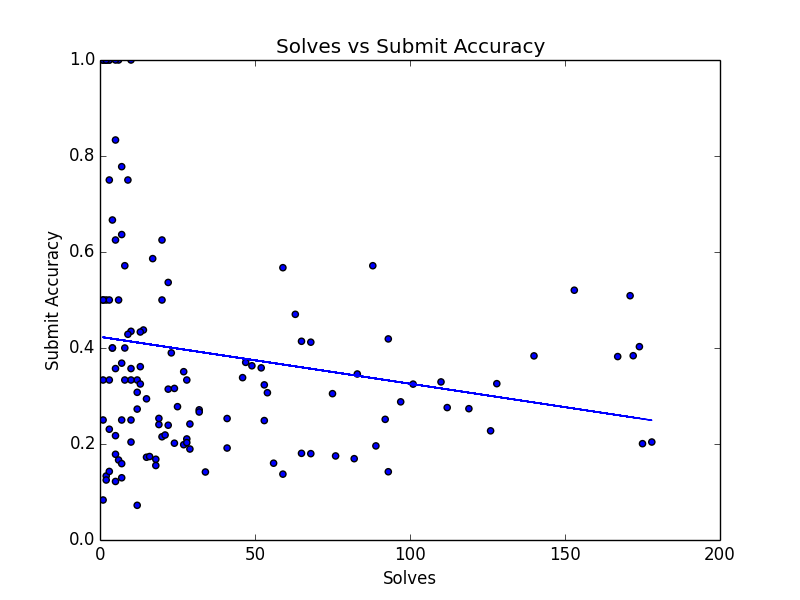

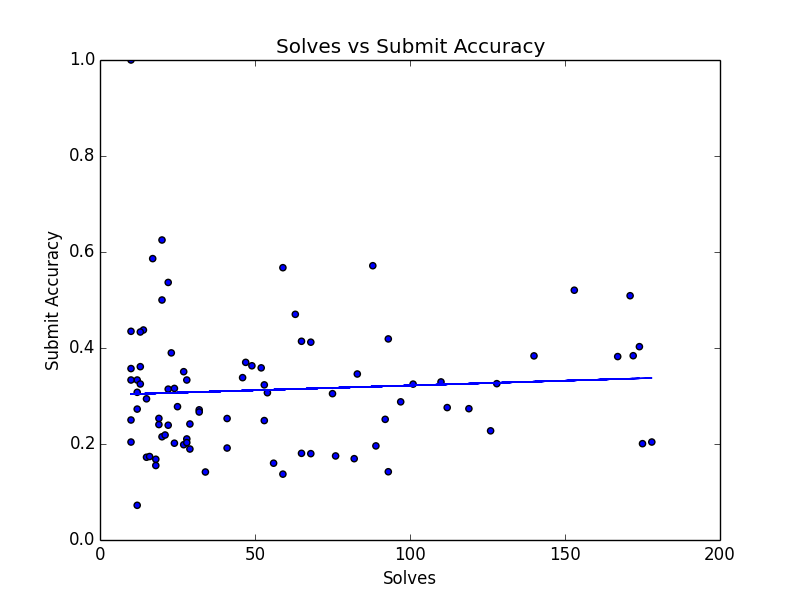

Did this pay off? Here’s a chart of puzzles solved versus guess accuracy of that team.

The trend line of this chart definitely suggests that teams with lower guess accuracy solved more puzzles. But hang on a second: there are bunch of teams with 100% success rate that only solved a few puzzles. Here’s the same plot, if we remove all teams that solved fewer than 10 puzzles.

Now, the downward trend line is gone.

My feeling is that yes, teams that guess more did do better this Hunt. However, the size of that effect is small, and puzzles solved is still dominated by general solver ability and luck in how fast a team figures out the metapuzzles.

One other tangent is that I feel a lot of the discussion about guessing has focused too much on backsolves. I’m worried people will think high guess rates come mostly from backsolves. This isn’t true - crazy guesses come from both forward solves and backward solves.

During Puzzles are Magic, I got curious about forward solve vs backsolve rates. I went through the guess log, made a list of all answer guesses that looked like backsolves, and computed forward guesses vs backward guesses for each puzzle.

The results are linked on the full stats page. Excluding meta puzzles and endgame puzzles (puzzles that couldn’t be backsolved), across the entire hunt there were 4.38 guesses per forward solve, and 5.36 guesses per backward solve. So yes, backsolves did have more guesses on average that hunt, but it wasn’t by a big margin.

Admittedly, because Puzzles are Magic confirmed partials, and had very minimal rate limiting, we encouraged teams to submit more guesses than they would in a Mystery Hunt.

I don’t think Mystery Hunt should confirm partials, and I do think you want a strong rate limit if you’re doing automatic answer checking. MIT Mystery Hunt shouldn’t need the guardrails that partials give you. We added those guardrails to Puzzles are Magic just because we wanted an easier hunt.

Puzzle Stories

These stories are roughly told in chronological order, but I’ve grouped puzzles I worked on by round. During the Hunt itself, I jumped between rounds much more than mentioned here, so some stories are slightly out of order.

Grand Castle and Storybook Forest

I looked around a few puzzles at the beginning, but the one I started first was Goldilocks. I filled in a few clues, then moved to setting up indexing when I failed to get any more clues.

The next one I worked on was The Trebuchet, and here it was a similar story. By the time I got to the puzzle, they had Boggled a few words (the voice actors from Bolt), and after we realized the ammunition were all puns, the rest of the walls fell pretty fast. I identified a few more words (wtf SHAQ LIFE is a show??), then constructed the final wall. We expected the blocks to fall into place automatically, instead of acting like a dropquote. I noted we were a few swaps from GEORGE BAILEY, and someone solved it from FOE OF GEORGE BAILEY.

With that puzzle done, I jumped back to Storybook Forest. I got excited by Hackin’ the Beanstalk, immediately recognizing the tortoise-and-hare algorithm when typing random letters. I opened the sheet for it…and every single algorithm had already been IDed. Our team has too many CS majors. We had the ordering, and were a bit stuck on extraction, until someone threw a regex at it and saw SOURCE FILES was the first option. It was right.

I looked at Toddler Tilt, which no one had touched yet. However, I had trouble getting the toddlers to tilt in the right order. I noticed the people who joined me were getting it much faster than I was, and I decided my time would be better spent on the meta.

For the Storybook Forest meta, we had some tic-tac-toe theories. We had a lot of 9 letter answers, and the puzzle art was arranged in a 3 x 3 grid.

(9 puzzles, 3 x 3 grid, three animals in a row in the meta’s art. It must mean something!)

I tried extracting the same way the Dolphin meta worked from MIT Mystery Hunt 2015. This sort of worked, but some answers extracted 2 letters instead of one, and there weren’t great ways to use the 11 letter answers. I started looking for tic-tac-toe variants that might use 11 in an interesting way, and in the middle of doing so, someone who didn’t believe any of our tic-tac-toe work solved the meta.

With that finished, I went back towards The Grand Castle meta. We had 4 answers in that round, and after some false starts based on “stately” and “capital”, someone discovered the Texas panhandle. I immediately noted that the panhandle had 26 counties, but out of the final answer of OILMEN, A-to-Z conversion of our answers gave LMNO, which made us think it was an ordering. Each answer in this round was a two word phrase, and reading the word that wasn’t the county gave “MISTER RACHEL UNCERTAIN SIX”. I proposed that the 2nd words were going to form a cryptic whose answer was six letters. Someone noticed that “Rachel uncertain” might mean anagram RACHEL, giving CHARLE, which could complete to a famous ruler, CHARLEMAGNE. That sounded great! We tried it, and it was wrong.

The next feeder we solved was the E from OILMEN, and the second letters gave this grid.

CRISPIN MISTER RACHEL UNCERTAIN SIX Ah, so clearly, we were going to fill in the box between CRISPIN and SIX, get two cryptics that shared SIX for the enumeration, and we’d combine them to get a 12 letter answer! We tried CHARLEMAGNES (plural), and that was wrong too. Someone found Six Flags Over Texas, which provided an ordering on the clues, and now that we had an ordering, A-Z letters looked much better, and we solved it.

Spaceopolis, Wizard’s Hollow, Balloon Vendor

With those rounds done, it was time to look at Spaceopolis. I watched our teammates sing karoake. I did data entry and indexing for the Masked Singer a-ha, but the extraction looked bad. While puzzling over the sheet, someone walked by and asked for volunteers to solve Magic Railway, the konundrum we had just unlocked. Two of us agreed. I played a mean house elf master for about an hour, briefly tried chemical elements instead of classical elements, and got the answer, GRACE UNDER FIRE. Checking the status sheet, we saw Karoake had been solved The two of us who left checked to see what we had missed. It turned out we had extracted the right letters, and just failed to read properly.

We unlocked TEAMWORK TIME: Hat Venn-dor, and joined the effort for that. In general, I really liked the TEAMWORK TIME puzzles, except for the ones where you only wanted 3 or 4 people interacting with the puzzle at once. But Hat Venn-dor was well-suited to lots of manic guessing, and I liked that. After that, some of us had a good laugh at No Clue Crossword, but we didn’t get it and had to backsolve the puzzle. We thought it might have been words that appeared in a crossword clue lookup when you entered “No clue” as the clue, but that didn’t seem very good. I forget exactly when we got the Balloon Vendor meta, but it was cute.

YesterdayLand

The next puzzle I did major work on was Food Court. The main thing this puzzle taught me was that I was horribly out of practice on probability. Back in undergrad, I definitely would have solved all of the math questions without issue. After solving one of the problems, I saw we had answers for most questions, so I started transcribing the probabilities into code to compute the steady state of the Markov chain. Another teammate had found an online tool for this, but said “it’s garbage, it’s only accurate to the hundredths place”. That actually would have been good enough for the puzzle, but due to incorrect data, we weren’t getting the right steady states. Given how the puzzle worked, it seemed clear we needed to check our work. I loudly went around asking people to help us do math, and was told three times that someone was on it. I wasn’t really listening (my mistake), and on the third time, they sat me down and explained that

- They knew someone who made Nationals of MATHCOUNTS.

- They weren’t doing Hunt, so it was okay to ask them for help.

- They solved probability problems in their spare time for fun.

- They were very fast and very accurate.

About 30 minutes later, they got back to us, saying “I believe three of your answers are wrong, I got this.” We fixed them, reran the Markov chains, and saw the steady states were all exact hundredths from \(0.01\) to \(0.26\), with small errors from floating-point approximation. So, that was that.

A Digression on All-or-Nothing Extraction Mechanisms

One big lesson I learned from making Puzzles are Magic is that you always want to check how robust your puzzle design is to errors. Solvers will always, always make errors in solving, even if your clues are 100% unambiguous. So, if possible, you want to make your puzzle robust to a few errors. For example, crosswords are naturally robust, because each letter is checked twice, once by the Across clue and once by the Down clue.

The less robust your puzzle is, the more likely it is that solvers will do 90% of the work for a puzzle without finishing, or they’ll apply the right idea and get garbage due to their mistakes. Either one feels bad. Things that make a puzzle less robust are partial cluephrases using uncommon letters, and all-or-nothing extraction mechanisms, where a single error cascades into complete junk, even with the correct extraction mechanism.

Food Court had an all-or-nothing extraction mechanisms, because changes to any transition probability must influence state probabilities everywhere else. Otherwise, Markov chain theory says it literally can’t have a unique steady state.

I don’t like mechanisms like this in a vacuum, but not every puzzle can be made robust without compromising its idea. The core idea of “you solve a bunch of math problems that seed a Markov chain” is compelling. So, in short, I liked this puzzle. I think you just have to accept the all-or-nothing extraction is a consequence of its design, and move on.

Back to YesterdayLand

Now we come to Change Machine. Oh boy.

I moved to this puzzle because I was told there was a lot of mindless drudgery that needed to be done to the spreadsheet, and I was too tired to do any real solving. The puzzle was all about the Omnibus podcast, and everything was IDed, but they had been IDed independently. Now that the Omnibus connection had been found, they wanted help merging all the data by Omnibus episode.

I was still confused, and at some point I said “Okay, it’s 3 AM and I’m very tired. I need you to give me idiot-proof instructions for what you want me to do.” Which they did, so props. After everything was ordered, I suggested indexing the KJ number into the Bible verse (treating KJ as short for King James, instead of Ken Jennings). Reading in episode order gave AD AMUSEMENT COMPANY IS KEY.

We were confused. During this process, one person had been reading the Ken Jennings subreddit, and discovered a Reddit post where someone asked what the hell was up with the RIDE ENHANCERS COLLECTIVE?

We had a mini mental breakdown. Was this seeded information from Left Out? Did AD COMPANY mean Reddit? Was this actually how we were supposed to solve the puzzle? I dug into the post history of the Redditor, to see if they posted on other puzzle subreddits, but all I found were posts on r/ottawa and r/Curling, dating back several months. This was made extra confusing because another Redditor had made Ride Enhancers Collective fanart in the middle of Hunt.

Applying the left-over offset numbers to RIDE ENHANCERS COLLECTIVE was giving good letters, but we were still confused about the life choices we’d made that led us to that moment. Solving the puzzle with ENCYCLOPAEDIA BRITANNICA, we were still incredibly confused and on the verge of a bigger mental breakdown. (Remember, this is at 3 AM, when the universe is a magical place and everything is funny in a cosmic sort of way.) After a bunch of “WHAT DID WE JUST DO AND WHY DID IT WORK”, we figured out that RIDE ENHANCERS COLLECTIVE was mentioned in an ad from the Omnibus episode that came out the weekend of Mystery Hunt, and we must have skipped a bunch of steps that would have told us to listen to the Omnibus.

Reading the solution, there were three cluephrases, and we only got the last one. So thank you, Omnibus fans. Your confusion about the RIDE ENHANCERS COLLECTIVE was not in vain, and your Reddit post provided just enough search engine signal for us to shortcut the puzzle.

Big Top Carnival

With that done, I looked at the new Big Top Carnival puzzles. I didn’t do much, until someone said, “Hey, so all our answers are Kentucky Derby horses”. We piled onto the meta and got to *I*R*D*R*, which we guessed was “something RIDER”. Noticing that horse names had mostly unique enumerations, we asked if anyone was working on a puzzle in Big Top with known answer length. The group working on Weakest Carouselink said they had (4 4). Finding PINK STAR as a horse option, someone proposed PINK SLIP. The Weakest Carouselink solvers said “that seems bad”, and I pointed out it was thematic to Weakest Link (you get fired), so we called it in and it was right. That gave us *S*I*R*D*R*, and within 30 seconds we went from that to “STIRRUP?” to “STIRRUP DRAMA!” to “AHHHHHH WE SOLVED A META WITH 5 OUT OF 12 ANSWERS”.

The really good backsolvers were all asleep, but we had 7 puzzles to backsolve, of course those of us awake were going to try. We got 3 in the next hour, got stuck on the rest, and left the backsolves for others to finish up as we went back to the YesterdayLand meta.

YesterdayLand Meta

One rather boring puzzle skill I’ve always been proud of is my diligence in filling out spreadsheets. When Jukebox first unlocked, I opened it, and carefully transcribed the given blanks, their arrangement, and the colored numbers into the meta spreadsheet. I made some notes about how I wanted the puzzle to extract, and went off to solve other puzzles.

After the Big Top Carnival flurry died down, we looked back at Jukebox. Someone got the modernized answer break-in, and I got a warm fuzzy feeling when my due diligence meant we could jump straight into filling everything out. I had DUBSTEP for modernized DISCO, which seemed okay. That changed to EDM MUSIC, which was worse, but then someone noticed we had the blanks for ELECTRONIC DANCE MUSIC, freeing up the seven-long slot for the modernized PENTHOUSE, PORNHUB. After a brief “oh no they went there”, we had enough to get to the READ UNDER COLOR NUMBERS cluephrase. We didn’t have enough feeders to get the meta, but I was getting pretty tired, and decided to get some sleep, trusting it would be done by the time I woke up.

Saturday’s Safari Adventure

After I woke up, I caught up on what had happened.

- The remaining Big Top Carnival puzzles had been backsolved.

- YesterdayLand’s meta was solved.

- We finally solved the scavenger hunt, after figuring out all answers in Creative Picture Studios were emoji.

- We had unlocked Safari Adventure.

I ended up contributing the most to Safari Adventure, which is to be expected - it was a really big round. For regular puzzles, I worked on Golden Wolf (figured out the Gashly kids a-ha), Lion (finishing one of the final NYT puzzles), and Sheep (looking up Mega Man characters, pointing out all the last words were 11 letters long, and spending an hour not indexing by game number until someone else tried it and solved the puzzle.) I also worked on Snake, where we got IAMB from *A*B, guessed TROCHEES just in case, then got PACES from PACESA (we had some errors that we guessed through).

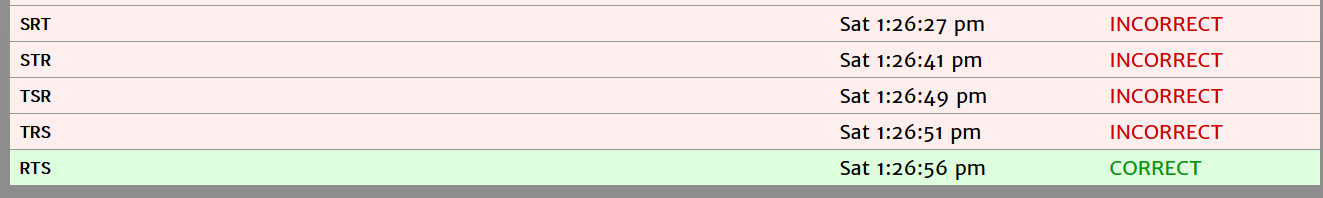

I didn’t work on Hyena, but there is a fun story for it. The group working on it was convinced one answer was the letters in SRT, in some order, but they weren’t sure what. They decided that, well there were only 6 permutations, so…

Working on the second answer, they got the letters CAM, and again, they tried 4 of the permutations, but stopped there out of fear of answer locks. During the Hunt, we thought answer lockouts applied to the entire round. They didn’t want to lock out people forward solving Safari puzzles, so they abandoned it. Much later, we backsolved Hyena, and discovered the answer was one of the 2 permutations we hadn’t tried.

In the middle of Safari Adventure, we unlocked First You Visit…. At the time, we were split across 3 rooms, and it was really funny to hear the groans cascade across the hall as each room discovered the puzzle 5 seconds after each other. Out of Puzzle Traumatic Stress Disorder, no one worked on it, and we didn’t even backsolve it.

For Safari metas, I looked at a few. For The Cubs Scout, my incredibly-minor baseball knowledge helped with how to read the extracted letters. In Sam, I figured out the stars-and-stripes idea, but didn’t get the every other trick. For Zeus, we got Altered Beast really fast, but got stuck on how to extract. The person who eventually solved it said they watched an hour of Altered Beast gameplay, then was inspired to map everything onto BEAST. (Note that nothing about Altered Beast’s gameplay was relevant, except for what animals to use and the ordering of those animals.)

We initially thought the Tiger would be a regular puzzle that was part of 5 metas, which we would have to backsolve entirely. Once we got to a 6th meta that needed Tiger, we realized it had to be the metameta. The Safari metas were getting solved pretty fast, and not every meta solver noted what backsolves were left. In teammate’s tech setup, spreadsheets are made automatically for each new puzzle, but this breaks down when you’re collecting data for a puzzle that doesn’t exist! A group of us ended up making a Tiger spreadsheet manually. After unlocking the metameta, we transferred our manual sheet to the generated sheet, and while doing so someone got the Tyger Tyger a-ha, which just left finishing enough Safari metas to extract.

A PennyPass Postmortem

I haven’t talked about the PennyPass system yet. In this Hunt, teams would get PennyPasses. Each pass could be spent to unlock a puzzle in any open round.

I thought this was a neat choose-your-own-unlock-adventure, without the downsides of 2018’s experiment. In 2018, teams chose which round to unlock, which was cool, but it also meant you might randomly pick the hardest round instead of the easiest one. The organizers also had to be prepared for literally every in-person interaction as soon as teams passed the intro round. It hasn’t come back yet, and I don’t think it should. It adds randomness and tons of logistical headaches for not much benefit.

On the other hand, I wouldn’t mind seeing the PennyPasses again. They’re a nice way for teams to add help in rounds they decide they need help on. Our strategy was that unless we were obviously stuck, we would optimize for the width of the Hunt - the number of unlocked puzzles.

In each round, solving one puzzle unlocked one more puzzle in that round. So, solving within a round either kept your width constant, or decreased your width if you had no more puzzles to unlock in that round. This meant that we always used PennyPasses on the most recently unlocked round, because that round would have the fewest solves, and we’d have a +1 width increase for the longest period of time.

We had one PennyPass left, that was expiring in an hour, and the only round it was valid for was Cascade Bay, since we had unlocked everything else. We therefore had two options.

- Use it right away on Cascade Bay.

- Wait to see if we could solve the 10 puzzles we needed to unlock Cactus Canyon, and use it there.

It seemed really unlikely we’d solve 10 puzzles in an hour, so we spent the pass on Cascade Bay. The pass disappeared, but nothing happened.

We had already guessed Cascade Bay’s gimmick: puzzles from one level would “flow down” into puzzles below. But, we didn’t realize this meant the first meta bottlenecked unlocks in the next level. Our PennyPass wasn’t going to give us a new unlock until we finished more of Cascade Bay.

After the Hunt, there was a postmortem on whether we should have been able to infer that the PennyPass would work this way, based on Cascade Bay’s art. With our backsolving speed on Safari, we actually may have finished 10 puzzles before the Penny Pass expired, and we heavily needed more unlocks in Cactus Canyon at the end of Hunt. By the time the extra unlock kicked in for Cascade Bay, we had already figured out all three meta mechanics, and didn’t need more help.

teammate was tied with Galactic until Cactus Canyon, where they separated from everyone else. If we had been able to use the PennyPass on Cactus Canyon, we may have been able to keep pace. I believe we made the right call for the PennyPass in the moment, and even if we had kept pace with Galactic, I think they still would have figured out the Workshop metameta first, but it’s hard to say.

Cascade Bay

Now that we knew we needed solves in Cascade Bay, the call went out for unattached solvers to help solve puzzles in that round. We had solved Water Guns thanks to an insight from a remote solver, but that still left several puzzles to finish.

(The Pokemon we drew for Water Guns)

I saw my options were Breakfast Menu, or The Excelerator. I looked at the people solving Breakfast Menu, saw “heraldry Waffle House”, and went “This is amazing. I don’t want to do it.” The Excelerator it is!

It looked crazy, but we had the phrase FIND ELEVEN STARTING POINTS. The previous solvers had only found 10 red trigrams, not 11. On a second look, we found the 11th, and it was a decently smooth solve from there. I grinded through some trigrams, and confirmed we wanted to read horizontally along each chain rather than vertically, but it was someone else who noted the four letter words could all be treated as ranges. We had some trouble getting all the range formulas to work, because Google Sheets “helpfully” reorders ranges that are written in reverse order, but besides that it was neat.

Solving The Excelerator gave us the 4th solve in Cascade Bay, which was enough for the meta crew to get the Lazy River meta. For Coast Guard, we had variations on THROWN FOR A LOOP for ages, but were missing the P and L in the cluephrase. Our forward solves kept confirming letters in THROWN FOR A, and we didn’t solve the meta until we finally, finally got the P from a forward solve.

The Swimming Hole unlocked, the meta crew immediately got acid/base, they got some letters and told people they wanted the answer to end in NEUTRALITY, and I moved back to Safari because I figured they’d finish in a few more forward solves, and I wanted to try breaking open more metas.

Finishing Safari Adventure

We were quite close to the end of Safari when I got back. We were just missing 3 metas: The Trainer, Anne, and Ms. Cunningham. Meanwhile, we were stuck trying to take the index of the Tyger Tyger word inside the line it appeared, instead of converting the line number to a letter.

I took a look at Ms. Cunningham, which had no progress. After some false starts, I decided to look up who Ms. Cunningham was, which got me to TaleSpin. Coincidentally, the other 2 metas had break-ins at the same time, and everyone got really excited about finally closing out the round.

My TaleSpin break-in didn’t actually do anything, because we had already determined the Ms. Cunningham animals by process of elimination, and the given clues weren’t even the extraction order. In effect, my only contribution was convincing other solvers we had made progress when we hadn’t, but hey, I claim that still counts.

We solved Safari Adventure metameta with 10 of the 11 metas, got a message saying we should finish all the metas as well. and solved Ms. Cunningham a few minutes later, leaving just two things left: Creative Picture Studios meta, and all of Cactus Canyon.

Creative Picture Studios Meta

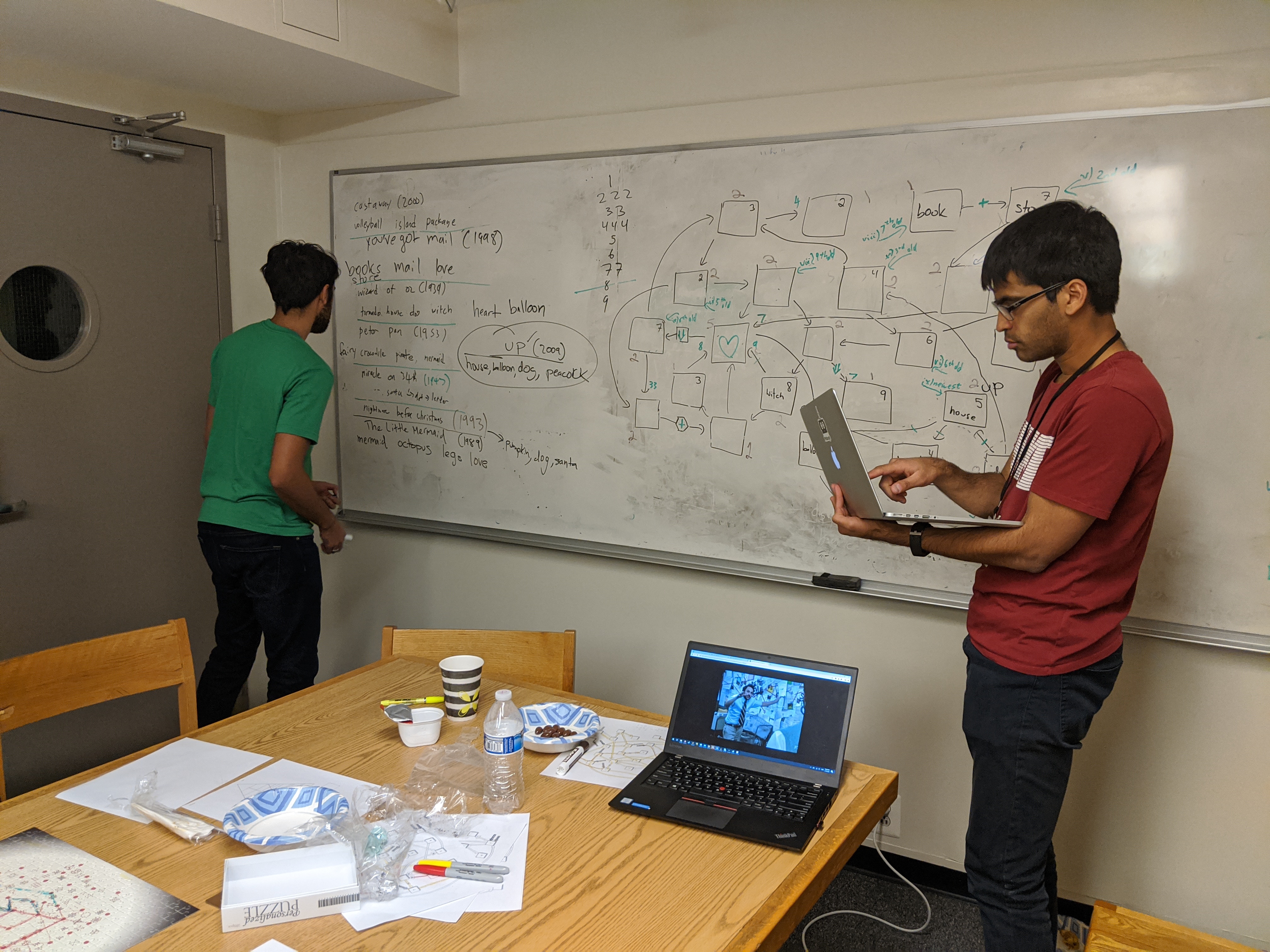

A few people had monopolized a whiteboard for several hours, brainstorming movie plots and moving emojis around.

They had made a lot of progress, including a backsolve of King’s Ransom minutes before an hours-long forward solve was about to finish.

First, there were 3 people yelling movie plots at each other. Then 4, then 5, then 6. There was a lot of shouting about letters to take, which emojis should go where, and guesses on where the missing movies would fit chronologically. A teammate turned to me and said, “There are too many people here.” The two of us left the room to leave it at 4 people yelling at each other, figuring that would be good enough. After 40 minutes (caused by two answer lockouts), they got it.

Cactus Canyon

I didn’t look at the meta in this round. I made small contributions to TEAMWORK TIME: Town Hall Meeting (left when I noticed it didn’t need many people) and Wanted: Gangs of Six (identified the ponies, Big Hero 6, some Watchmen, then left).

For Spaghetti Western, I looked up Spaghetti rules, and got stuck on how to figure out the starter words. Another solver bailed me out by getting the starter words, and with those, the minipuzzles got a lot easier.

Mine Carts was stuck for a long time on trying to pair the Left Track and Right Track carts. (Yes, we knew the counts didn’t work for this. Yes, we tried pairing them anyways.) Eventually we figured out we should form a chain between the carts in each track. Embarrassingly, I missed the My Little Pony chain. In my defense, our spreadsheet was a list of unpaired answers, so I missed that each mine cart mentioned the category we wanted. I recognized SPITFIRE was a pony (she has a fun pony music video), but missed LIGHTNING DUST. (I knew who she was, she’s a key part of two episodes, but she’s also an asshole).

We got GRACE UNDER FIRE as part of extraction for Mine Carts, and that gave me an epiphany. GRACE UNDER FIRE was the answer to Magic Railway! All the Outer Lands had a gimmick to their puzzle structure. Creative Studios used emojis, Safari had puzzles go to several metas. Cascade has metas cascade into lower ones. This had to be the Cactus Canyon gimmick! We were going to get pairings between Cactus Canyon puzzles and other puzzles in the Hunt, and this would be relevant to the meta! All of this was wrong, but I really wanted it to be true.

As we moved to the next stuck puzzle, the people we sent to the Workshop came back with more pennies, and the news that a team had just solved Cactus Canyon’s meta.

“Wait, what? How do you know?”

Here’s what happened: as they left the Workshop, they heard the solve jingle for Cactus Canyon from the Tinkerer’s computer. Why would the Tinkerer from Left Out have solve jingles? Well, suppose a team solved a meta. Left Out would want to prepare for that team picking up their penny. What if they reused the solving jingles to let the Workshop person know what round to prepare? If that were true, then the Cactus Canyony jingle meant a team had just solved Cactus Canyon’s meta, and they were about to start endgame.

This all sounded plausible, but if it was true, there was nothing we could do about it besides solve more puzzles. (We caught up with Galactic people after, and confirmed our penny pick-up matched their Cactus Canyon solve time, so our hunch was correct.)

Remember how I said we thought answer lockouts were per-round? The 40 minute delay in solving the Creative Studios meta was weighing heavily on people’s minds, and we ended up appointing an answer guess dictator. All guesses had to go through them. This led to some tense moments - on opening Cowboy Campfire, many of us wanted to immediately backsolve with HOMECOOKED MEAL for theme reasons, but were overruled because the number of clues didn’t match answer length.

The last puzzle I worked on was Snack Bar. Most of the hard work had been done already, and we split between trying to find new flavors, checking our existing flavors, and trying different arrangements of the flavors in the grid. At one point, we had 10 different copies of the grid, with everyone making copies trying to find a good arrangement. It got complicated when two people duplicated a sheet at the same time. It turns out Google Sheets does not like this, giving you sheet names like “Conflict_of_23812739”.

One of us reported having something promising. We all moved to “Copy of Copy of Copy of Nathan’s sheet”, and solved it. The answer for Snack Bar was very surprising to the meta crew, resolved a bunch of ambiguity (still not sure how), and was the last answer they needed to solve Cactus Canyon’s meta.

Workshop

If you’re interested in the story for this, you can go read Part 1. The short version is: we got very excited, left our HQ to pick up the last penny, got stuck, got extra stuck, and retreated back to HQ as our laptop batteries started dying.

We set aside some floor space for the pennies, and had people cycling between looking at the pennies, looking at our spreadsheets, and backsolving puzzles when people got sick of doing either of those two things.

There was nothing unfair about this puzzle. It was just hard. There was a lot of data, and the phrases were tricky to find if you didn’t know what you were looking for. You needed to look at both the text and pictures to have a chance of getting the a-ha, but only our physical copy had the text and pictures co-located with each other - all our digital copies either had just the text, or just the pictures, but never both side-by-side.

We have really good solvers on teammate, for all types of puzzles, but I believe our edge is that we have a strong team-wide intuition for when we can use tools and programming to speed up solving, or to skip insights entirely through brute force. For example, although we didn’t solve High Noon Duel, our solve went like this.

- Identified the movies and characters.

- Got stuck.

- Note that there are 14 pairs of clues, so there are 14! different pairings of cowboys to get semaphore.

- That’s a bit big for brute force (87 billion), but not impossible with some heuristics, assuming the reading order was by the given order of left cowboys.

- I put together a short script to generate random pairings, and reported that about 14% of permutations gave valid semaphore, approximately 1 in 7.

- Since there are repeat directions in the black cowboys, we have much less than 14! permutations. If we combined lazy unique permutation generation with the 1 in 7 filtering, then added some English language heuristics on top, it should be brute-forceable.

Would this have worked if we coded it up? Well, no, because the cluephrase wouldn’t make sense without understanding the puzzle’s intended mechanic. I mention it because 3 people, all new to the puzzle, independently asked if we should try brute force.

As another example, we gave Number Hunting from Puzzles are Magic to a few different testsolvers. This is all very anecdotal, but many of the testsolvers from teammate had the same realization - once you solve enough to find the list of candidate answers, it’s much faster to export the list of all answers, and use coding to search for matching answer enumerations, instead of forward solving the clues. When I checked the stats for Number Hunting, I was not surprised that a teammate team got the fastest solve. That’s just how we are as a team.

The pennies were definitely not a puzzle where coding trickery or tool knowledge got you to the answer faster. You just had to solve it. We didn’t.

Edit: Correction from the team that got fastest solve on Number Hunting: they actually solved the puzzle by guessing the answer from flavor, after solving a quarter of the clues. So that solve was more about enthusiastic guessing than anything else. My bad!

Runaround

After Galactic found the coin, Left Out called us to give us hints, and we decided we’d rather jump straight to the runaround instead of solving Workshop.

I did the runaround for Cascade Bay. Our gimmick was on the easier side. We played the role of water inside a water pipe, starting in a water pipe above a sign on MIT campus. Along the way, we looped through an all-gender restroom, got lost in some walls, came out of some sprinklers, and solved the puzzle by getting our last letters from some nautical ships.

Meeting back at Left Out’s HQ for the finale, we brought back the Heart of the Park. At one climactic moment, we were supposed to chant the Workshop answer, but because we skipped past it, we ended up chanting “SPOILER FREE ANSWER PHRASE, SPOILER FREE ANSWER PHRASE”, which was delightfully goofy.

I know there are videos of the finale at wrap-up. What that video doesn’t capture is that for some reason, there are twelve projector screens in that room. It is one thing to watch the final Penny Park video. It is another to have it broadcasted to you from twelve screens covering every wall in sight.

Closing Thoughts

I really liked this year’s Mystery Hunt. The puzzle structure got stretched in neat ways, there were a lot of in-person puzzles this year (a side-effect of automatic answer checking freeing up people elsewhere?), and the production value was nuts. Seriously, they made puzzle-specific art in a consistent style for every puzzle in the Hunt? I’m not sure people realize how insane that is. It’s completely insane.

I am a bit worried by the number of puzzles. The number of puzzles in Hunt has definitely trended upwards, and I’m not convinced that’s sustainable. One lesson I remember reading about Random’s 2015 Mystery Hunt was that easy puzzles took the same amount of time to write as hard puzzles, and they were only able to do the School of Fish round because they had a ton of writers. I’m pretty sure this year’s hunt had more puzzles than 2015, and I don’t think Left Out had more writers than Random did…

In lieu of the Coin, we distributed the pressed pennies instead, as mini-Coins. I joked that “oh boy, I get to keep a reminder of the puzzle that caused us incredible pain”, but you know what, it really doesn’t hurt at all anymore, and the penny still looks nice.

As for what’s next: teammate and Galactic had an agreement that if either team won, we’d help each other write Hunt. Some of teammate is joining Galactic to write, but some are planning to solve instead. The team is reckoning with an uncomfortable chain of facts: there’s a chance we could win Hunt, and the team wants to win Hunt if we’re in position to, but we were implicitly relying on help from Galactic to write Hunt if we won. Assuming that no one wants to write MIT Mystery Hunt two years in a row, if teammate wins next year, the cavalry’s not coming from Galactic. It will be less WHOOSH Galactic Trendsetters! NYEEEOW, and more WHOOSH please let us sleep ZZZZZZZ.

The skill set to win Hunt is not the same as the skill set to write Hunt. There’s definitely heavy overlap, since experienced solvers know what good puzzles look like, but that still leaves all the time estimation, organization, theming, and tech required to make the Hunt tick. MIT Mystery Hunt exists because people keep making it happen, and if you can win Hunt, you have the responsibility of continuing to make it happen. The most direct consequence of our strong performance this year is that teammate has had serious discussions about how we can build more puzzle writing expertise.

And, that’s it for MIT Mystery Hunt 2020. Jeez, this got really, really long. For puzzlers looking for new hunts, Cryptex Hunt starts in a few days. It’s my first time doing it, so no idea what to expect, but it should be fun.

-

MIT Mystery Hunt 2020, Part 1

CommentsFor Mystery Hunt 2020, I hunted with teammate, the same team I hunted with last year. We ended up smashing all my expectations. By puzzle count, we were 2nd. By metas, we were 2nd to solve all metas. By Hunt finish time, we were 3rd, due to getting stuck on the last puzzle (more on that later). This was the first year where teammate got a call from HQ warning us that we were in contention for winning, and for much of Saturday, we were the lead team, which is a rather weird prospect to consider. I didn’t believe it until someone came back from trivia for Weakest Carouselink and said we played against Palindrome, indicating we unlocked the puzzle at the same time they did.

Based on questions Left Out asked us during Hunt interactions, I don’t think many Mystery Hunt veterans know where teammate came from. Very briefly, teammate is a mix of people from Puzzlehunt CMU, some Bay Area puzzlers from Berkeley, and friends branching out from there. Before teammate, these people hunted with ✈✈✈ Galactic Trendsetters ✈✈✈, but one year they decided to split into their own team, and teammate and Galactic have been sister teams ever since.

The two teams have similar team culture and age demographics. For the former, both are meme-heavy and very willing to backsolve. For the latter, the majority of both teams are younger than 30. I’m actually not sure if anyone on teammate is over 30, now that I think about it. This showed itself most strongly when Left Out came to deliver the Baby Shower Balloon. They started a clue with “BTS…” and three of us immediately guessed K-Pop. Then we got a question about a VH1 television show that showed music videos (Pop-Up Video), and our reaction was “What’s VH1?”

Before Hunt, members of Galactic and teammate leadership were seriously considering merging the two teams. The downside would be a less fun Hunt, since we’d be way over the recommended limit of 70-80 people. The upside was that we’d have much better odds of winning. We didn’t merge, but based on the solve graphs, we clearly didn’t need to merge to be a contender for the coin this year. We knew Galactic wanted to be more competitive this year, and weren’t surprised they got to the coin first.

* * *

I felt this year’s Hunt was really good, in inventiveness of its structure and polish in its puzzles. However, for me it still feels a bit sour due to how the Hunt ended for us.

On Friday night, we felt we were doing well. I stayed up until 6 AM, then slept for 4 hours and came back. On Saturday night, we knew we were doing well. We rightly guessed we had unlocked every round, we were making good progress on all the metas, and it started to look like if I went to bed, I would wake up after we finished Hunt. So I pulled an all-nighter, and I know other people pulled one as well. At 9 AM, we solved our last meta, and frantically sent someone to get the last penny.

Every time you solved a round in this hunt, you got to pick up a pressed penny. Each penny had 3 arrows, 3 images, and 3 sentences of text. In short, it was definitely puzzle data, but I was expecting a shell metameta, and didn’t bother looking at them too closely. Additionally, our home base was a fairly long walk from Hunt HQ, and Left Out told us it would be fine if we picked the pennies up in batches, to avoid having to send someone there and back for each meta solve.

We picked up the final penny, didn’t unlock a shell, and realized it was a pure metapuzzle, at which point we started looking at the pennies, and got horribly stuck. The Workshop isn’t on the hunt website yet, but the solution is described at wrap-up. We got the penny layout within the 1st hour, and were stuck on extraction for the rest.

About 3 hours in, we had concluded two things. First, if we had been in the lead, there was no way we had a three hour lead over 2nd place. Second, the coin hadn’t been found yet. Assuming the reasonable-length runaround, that implied that we were one of several teams stuck on the same pennies puzzle, and Hunt would come down to whichever team got the break-in first. And, every other contender for the coin would have made the same inference, and were likely staring at the pennies just as intensely. That was great for motivation, but bad for anybody looking to sleep, because any moment could be the moment we got the a-ha.

At 3 PM, Galactic found the coin. Our entire team had been looking at the Workshop pennies for 6 hours. We knew it was the only puzzle that mattered for Hunt completion, and we still couldn’t get it. Feels bad. As soon as the coin was found, Left Out called us, gave us some hints, and fastforwarded us through the endgame, which was both very fun and quite impressive.

So, what happened? Word on the grapevine was that Workshop testsolved perfectly fine within Left Out, and they were surprised it took teams as long as it did to solve. In my opinion, part of what played into this was that we knew the pennies would be part of a puzzle, but we assumed we could look at it later, when we had complete information. By the time we actually looked at the pennies, we were very sleep-deprived due to rushing for the Cactus Canyon meta, so we weren’t as sharp. As time passed, we got increasingly burnt out on trying to figure out the same freaking pennies for hours.

In many ways, this was similar to what happened to my team for Galactic Puzzle Hunt 2019. We put off learning Puflantu until right before looking at metas, under the logic that it’d be easier to learn it all at once after unlocking more artifacts. In practice, it turned the language learning into a big slog. What do you do, when there’s nothing to do but learn Puflantu, and Puflantu is hurting your brain?

I’m trying to figure out why the clocks for April Fool’s Day Town from Mystery Hunt 2019 didn’t have this issue for us. It has a similar structure, where a small bit of information from every round gets pulled into one final puzzle. However, we solved that meta without too much pain in the final hours of Hunt, once we had enough prank answers. I think the crucial difference was that during the Hunt, we started looking at clocks early because there was no indication the clocks didn’t matter for the current town. We tracked them early, giving us all the data we needed to extract once Sunday came around. In contrast, by getting pennies at the end of a round, we got a signal that we didn’t need to look at them until the end. (And if they were part of a shell meta, looking at them early may have been wasted time, compared to solving other puzzles.)

* * *