Writing MIT Mystery Hunt 2023

This post is about 55,000 words long, and is riddled with spoilers for pretty much every aspect of MIT Mystery Hunt 2023. There are no spoiler bars. You have been warned. Please pace yourselves accordingly.

I feel like every puzzle aficionado goes through at least one conversation where they try to explain what puzzlehunts are, and why they’re fun, and this conversation goes poorly. It’s just a hard hobby to explain. Usually, I say something about escape rooms, and that works, but in many ways the typical puzzlehunt is not like an escape room? “Competitive collaborative spreadsheeting” is more accurate, but it’s less clear why people would find it entertaining.

Here is how I would explain it if I had more time. In a puzzlehunt, each puzzle is a bunch of data. Unlike puzzles people normally think of, a puzzle in a puzzlehunt may not directly tell you what to do. However, if it’s a good puzzle, it will have exactly one good explanation, one which fits better than every alternative. Every part of the puzzle will point to some core idea or ideas, in a way that can’t be a coincidence. In other words, a puzzle is something that compresses well. As a solver, your job is to find out how.

Puzzles can be a list of clues, a small game, a bunch of images, whatever. The explanation for how a puzzle works is usually not obvious and fairly indirect, but there is a guiding contract between the puzzle setter and puzzle solver that the puzzle is solvable and its solution will be satisfying. At the end of the puzzle, you’ll end with an English word or phrase, but that is more to give a puzzle its conclusion. People do not solve puzzles to declare “The answer is THE WOLF’S HOUR!”. They solve puzzles because figuring out why the answer is THE WOLF’S HOUR is fun, and when you find the explanation (get the a-ha), you feel good.

There’s a reason professors and programmers are overrepresented among puzzlehunters. Research and debugging share a similar root of trying to explain the behavior of a confusing system. It stretches the same muscles. It’s just that puzzles are about artificial systems designed to be fun and solvable in a few hours, whereas research is about real systems that may not be fun and may not be solvable.

That gives more of an answer to why people do puzzles. Why do people write puzzles?

I have a harder time answering this question.

Working on Mystery Hunt 2023 started as a thing on the side, then evolved into a part-time job, then a full-time job towards the end. Writing a puzzlehunt is incredibly time consuming. You’re usually not getting much money, and your work will, in the end, only be appreciated by a small group of hobbyists with minimal impact elsewhere. It all seems pretty irrational.

In some sense, it is. That doesn’t mean it’s not worth doing.

Post Structure

Oooh, a section of the post describing the post itself. How fancy. How meta.

I have tried to present everything chronologically, except when talking about the construction of specific puzzles I worked on, in which case I’ve tried to group my comments on the puzzle together. Usually I was juggling multiple puzzles at once, so strict chronology would be more confusing than anything else.

This post aims to be complete, and that means it may not be as entertaining. I’m not sure of the exact audience for this post, and figured it’d be useful if I just dumped everything I thought was relevant. If you are the kind of person who reads a Mystery Hunt retrospective that’s posted in April, it’s likely you’ll want to see the nitty gritty details anyways.

Most of the post is going to be a play-by-play of things I worked on. The analysis and commentary is at the end.

- Post Structure

- December 2021

- January 2022

- February 2022

- March 2022

- April 2022

- May 2022

- June 2022

- July 2022

- August 2022

- September 2022

- October 2022

- November 2022

- December 2022

- January 1-8, 2023

- Monday Before Hunt

- Tuesday Before Hunt

- Wednesday Before Hunt

- Thursday Before Hunt

- Friday of Hunt

- Saturday of Hunt

- Sunday of Hunt

- Monday of Hunt

- February 2023

- March 2023

- Thoughts on Hunt

- Ways to Improve the 2023 Hunt

- Unordered Advice

- So…Why Do People Write Puzzles?

- AIs and Puzzles

- The Start of the Rest of My Life

December 2021

Oh Boy, Mystery Hunt is Soon!

Writing and post-hunt tech work for Teammate Hunt 2021 is over and has been over for a while. Life is good. Team leadership sends out a survey to see if teammate has enough motivation to write Mystery Hunt.

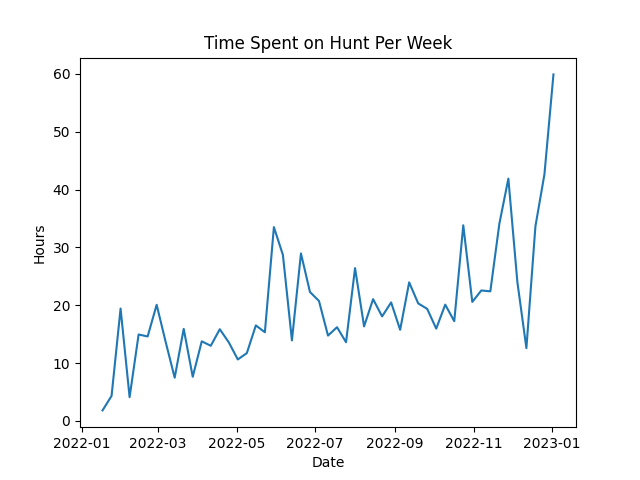

I use a time tracker app for a few things in my life, and puzzle writing is one of them. My time spent on Teammate Hunt clocked in at 466 hours. I do some math and find it averaged to 15 hours/week. This is helpful when trying to decide how to answer the question for how much time I’d commit to Mystery Hunt if we won.

I already had some misgivings around how much time and headspace Teammate Hunt took up for me. On the other hand, it is Mystery Hunt. Noting that I felt like I did too much for Teammate Hunt, I said I expected to work 10 hours/week on Mystery Hunt if we won this year.

The survey results come in, and there is enough interest to go for the win. I have zero ideas for a puzzle using Mystery Hunt Bingo, but figure that maybe we’ll win Hunt, and maybe there will be a puzzle using my site, so I’d better remove the “this is not a puzzle” warning early, just in case. I didn’t want to face any warrant canary accusations if we actually won.

January 2022

The Game is Afoot

Holy shit we won Hunt!!!!!

I write a post about Mystery Hunt 2022, where I make a few predictions about how writing Mystery Hunt 2023 will go.

After writing puzzles fairly continuously for 3 years (MLP: Puzzles are Magic into Teammate Hunt 2020 into Teammate Hunt 2021), I have a better sense of how easy it is for me to let puzzles consume all my free time […] Sure, making puzzles is rewarding, but lots of things are rewarding, and I feel I need to set stricter boundaries on the time I allocate to this way of life - boundaries that are likely to get pushed the hardest by working on Mystery Hunt of all things.

[…] I’m not expecting to write anything super crazy. Hunt is Hunt, and I am cautiously optimistic that I have enough experience with the weight of expectations to get through the writing process okay.

Before officially joining the writing Discord, I set myself some personal guidelines.

Socializing takes priority over working on Mystery Hunt. I know I can find time for Mystery Hunt if I really need to. A lot of puzzle writing can be done asynchronously, and I’m annoyingly productive in the 12 AM - 2 AM time period.

No more interactive puzzles, or puzzles that require non-trivial amounts of code to construct. The goal is to make puzzles with good creation-time to solve-time ratios. Puzzles that require coding are usually a nightmare on this axis, since it combines the joys of fixing code with the joys of fixing broken puzzle design.

No more puzzles where I need to spend a large amount of time studying things before I can even start construction. Again, similar reason, this process is very time consuming for the payoff. I’d estimate I spent 80 hours writing Marquee Fonts, since I started with knowing nothing about how fonts worked at the start, and had to teach myself much more about fonts than I’d ever wanted to know to make the puzzle a reality.

No more puzzles made of minipuzzles. Minipuzzles are a scam. “Oh, we don’t have any ideas that are big enough to fill one puzzle. Let’s make a bunch of minipuzzles instead because it’s easy to come up with small ideas!” Then you get halfway through, and realize that ideation of small puzzles is easy, but execution takes way longer. The process of finding suitable clues is somewhat independent of puzzle difficulty, and you have to do way more of it. I also felt it was a crutch I was relying on too often when designing puzzles.

No more puzzles with very tight constraints. It collectively took 60-100 person hours to figure out mechanics and find a good-enough construction for The Mystical Plaza, even with breaking some puzzle rules along the way. Usually, the time spent fitting a tight constraint does not directly translate into puzzle content.

These guidelines all had a common theme: keep Hunt manageable, and make puzzles that needed less time to go from idea to final puzzle.

I would end up breaking every one of these guidelines.

Team Goals and Theme Proposals

The very first thing we did for Hunt was run a survey to decide what Hunt teammate wanted to write. What was the teammate experience that we wanted solvers to have?

We arrived at these goals:

- Unique and memorable puzzles

- Innovation in hunt structure

- High production value

- Build a great experience for small / less intense teams

Unique and memorable puzzles: Mystery Hunt is one of the few venues where you can justifiably write a puzzle about, say, grad-level complexity theory. That’s not the only way to make a unique and memorable puzzle, but in general the goal was to be creative and avoid filler.

Innovation in hunt structure: This is something that both previous Teammate Hunts did, and as a team we have a lot of pride in creating puzzles that stretch the boundaries of what puzzles can be.

High production value: teammate has a lot of software engineering and art talent, which let us make prettier websites and introduce innovations like copy-to-clipboard. We wanted to make a Hunt that lived up to the standards set by our previous hunts.

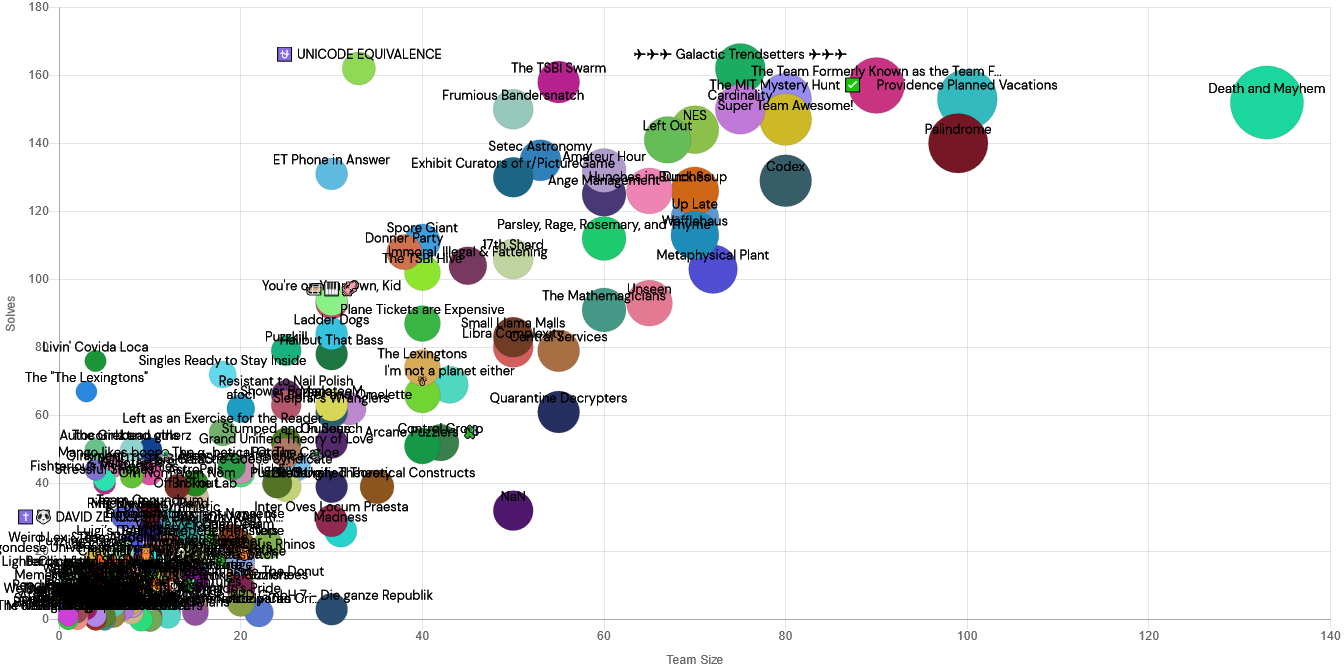

Build a great experience for small / less intense teams: We generally felt that Mystery Hunt had gotten too big. When we won Hunt, we were around 60% the size of Palindrome’s winning team. Correspondingly, we spent a while discussing how to create fewer puzzles while still creating a Hunt of satisfying length, as well as whether we could have more mid-Hunt milestones.

We then decided team leadership.

Hey everyone! Today is the deadline for submitting interest in leadership. Everyone who indicated interest will meet tomorrow and discuss the best division of responsibilities (not everyone will end up with a role, and some roles may have more than one person, based on examples from previous Hunts.) We decided to go with a closed meeting rather than a democratic vote to ensure that we reached the optimal allocation of responsibilities among everyone interested. We’ll let you know the final leadership team, and then have an official Writing Server Kickoff in the next few days!

I deliberately did not fill out the form, because it implied a baseline level of commitment that was above my 10 hr/week target.

The next step was theme proposals. This is always an interesting time in hunt development, since it sets the agenda of the entire upcoming year. Things can change later, but writing a puzzlehunt is an especially top-down design process. You decide your story, which decides your metametas and metas, which decides your feeders. It’s all handing off work to your future selves.

Historically, at the start of theme writing, I say I don’t have theme ideas. Then I get an idea right before the deadline and rush out a theme proposal. This happened in Teammate Hunt 2021 and it happened for Mystery Hunt. The theme I pitched for Teammate Hunt 2021 was not revived for Mystery Hunt (I didn’t think it scaled up correctly), but the Puzzle Factory theme is recycled from a Teammate Hunt 2021 proposal. We talked a bit about whether this was okay, since some organizers for Teammate Hunt 2021 were not writing Mystery Hunt this year. In the end we decided it was fine. At most there would be plot spoilers, not meta spoilers.

We liked the story structure of Mystery Hunt 2022 a lot, and almost all theme proposals were structured around a “three Act” framework, where Act I introduced the plot, Act II built up to a midpoint story event, and Act III resolved that event.

A few members with past Mystery Hunt experience mentioned that theme ideation could get contentious. People naturally get invested in themes, and spend time polishing their theme proposal. People working on other themes would observe this, and feel obligated to polish their proposals. This could escalate into a theme arms race, with lots of time spent on themes that would ultimately not get picked.

To try to avoid this, a strict 1 page limit was placed on all theme proposals. People were free to read discussion threads of longer freeform brainstorming, but there would be no expectation to do so, and all plot and structure proposals needed to fit in 1 page.

Did this work? I would say “maybe”. It definitely cut down on theme selection time, and reduced work on discarded themes, but it also necessarily forced theme proposals to be light on details. Team memes like “teammate is the villain” seemed to work their way into every serious theme proposal. Maybe that was genuinely the story we wanted to tell, but it could also have been an artifact of proposing themes while the memes were fresh. There may have been more diversity in theme ideas if they were written over a longer period of time. Palindrome’s theme was picked by the end of February, according to Justin Ladia. Galactic’s theme was picked February 26, according to CJ Quines. teammate’s theme was selected the evening of January 31.

I was not on the story team, but in our post-Hunt retrospective, members of the story team mentioned they were under a lot of pressure to fill in plot details that weren’t in the theme proposal, because, well, there wasn’t space for them in the proposal! Even the details that do exist differ a lot from where the story ended up. Here is how I would summarize the final version of the Hunt story.

teammate announces a Museum themed puzzlehunt written by MATE, a puzzle creating AI. During kickoff, teammate is really concerned with making a “perfect” Mystery Hunt that isn’t doing anything too crazy. The Museum is Act I of the Hunt. Over the course of solving, teams discover the Puzzle Factory, the place where Mystery Hunt puzzles are created. The Puzzle Factory is not a place that solvers were supposed to discover, and teammate does their best to pretend it doesn’t exist when interacting with teams. The Puzzle Factory is Act II of the Hunt, and is explored simultaneously with Act I. As they explore the factory, teams learn that MATE is overworked, and other AIs that could have helped MATE were locked away by teammate due to being too weird.

Solvers reconnect the AIs, and this prompts teammate to shut down MATE and the Puzzle Factory. They berate teams for trying to turn on the old AIs, then leave and declare Mystery Hunt is cancelled.

However, there is some lingering power after Mystery Hunt is shutdown, which solvers can use to slowly turn the Puzzle Factory and other AIs back on. This starts Act III of the Hunt, with puzzles written by the old AIs. Each AI round is gimmicked in some way, ending in a feature request that the AI wants to add to the Puzzle Factory. When all AI rounds are complete, MATE comes back, and after solving a final capstone, teammate comes back and admits that they were wrong about the old AIs. The Puzzle Factory makes one more puzzle, which has the coin, and MATE gets their long-deserved break.

Now, here is the start of the original proposal:

Act I begins with the announcement of an AI called MATE that can generate an infinite stream of perfect puzzles, as well as provide real-time chat assistance for hints, puzzle-solving tools, etc). During kickoff, teammate gives a business presentation with MATE in the background– but at the end, the video feed glitches briefly and other AIs show up for a split second (“HELP I’M TRAPPED”); teammate doesn’t notice. Stylistically, the first round looks like a futuristic, cyberspace factory. As teams solve the initial round of puzzles, errata unlock (later discovered to be left by AIs locked deeper in the factory), hinting that there’s something “out of bounds”. No meta officially exists for this round (the round is “infinite”), but solving and submitting the answer in an unconventional way leads to breaking out. (To prevent teams from getting stuck forever, we can design the errata/meta clues to get more obvious the more puzzles they solve.) Solving this first meta also causes MATE to doubt their purpose and join you as an ally in act II.

Quite a lot changed from the start to the end. The infinite stream idea was cut because we couldn’t figure out the design. Kickoff did not show the other AIs at all. The surface theme was changed to something completely different. In a longer ideation process, perhaps more of this design work could be done by the entire team, rather than just the story team. Maybe allowing wasted effort is worth it if it gets the details filled out early?

Themes were rated on a 1-5 scale, where 1 = “This theme would directly decrease my motivation to work on Hunt (only use if serious)” and 5 = “I’ll put in the hours to make this theme work”. I don’t remember exactly how I voted, but I remember voicing some concerns about the Puzzle Factory. The plot proposal seemed pretty complicated compared to previous Hunts. I wasn’t sure how well we’d be able to convey the story - You Get About Five Words felt accurate for Mystery Hunt, where some people will speedrun the story in favor of focusing on puzzles. I was also hesitant about whether we’d have enough good ideas for gimmicks to fill out the AI rounds in Act III. It seemed like a good theme for a hunt with 40 puzzles, but I didn’t know if it worked for a Mystery Hunt with 150+ puzzles.

I’m happy I was wrong on both counts. Feedback on the story has been good, and I feel the AI round gimmicks all justified themselves. I was imagining a Mystery Hunt where Act III was the size of Bookspace (10 rounds) and limiting it to 4 rounds did a lot for feasibility.

The Puzzle Factory did not win by a landslide, but it was the only theme with no votes of 1, and had more votes of 5 than any other theme. Puzzle Factory it is!

Hunt Tech Infrastructure

I’m going to talk a lot about hunt tech, a very niche topic within the puzzle niche. This won’t be relevant to many people. Still, I’m going to do so because

- It’s my blog.

- By now I’ve worked with four different puzzlehunt codebases (Puzzlehunt CMU, gph-site, tph-site, and spoilr), so I’ve got some perspective on the different design decisions.

The first choice we had to make was whether we’d use the hunt codebase from Palindrome, or use the tph-site codebase we’d built over Teammate Hunt 2020 and Teammate Hunt 2021. Our early plan is to mostly build off tph-site. The assumption we made is that most Mystery Hunt teams do not have an active codebase, and default to using the code from the previous Mystery Hunt. However, teammate had tph-site, knew how to use it, and in particular had accumulated a lot of helper code to make crossword grids, implement copy-to-clipboard, and create interactive puzzles.

The only recent team that seemed like they had faced a similar decision was Galactic, who decided to build off the spoilr codebase and make silenda rather than use gph-site. After asking some questions, it sounded like the reason this happened was because parts of their tech team were already familiar with spoilr. So for our situation, it seemed correct to use whatever code we knew best, which was tph-site.

A few writers from Huntinality 2.0 are asking if they can see the work we did to convert our frontend to React. This motivated us to start open-sourcing tph-site. It’s not too much work, and feels like a good thing to do.

A React Tangent

Almost every hunt codebase is written in Django. It’s a Python web framework that does a lot of work for you. In Django, Python code defines your database schema, user model, what backend code you want to run when users make a request, and what URLs you want everything to be accessed from. Although it is helpful to know what happens under the hood, Django makes it possible to build a site without knowing what happens under the hood. It’s also almost all Python, one of the most friendly beginner languages. I first learned Django 11 years ago and it’s still relevant today.

The default recommended approach in Django is that when a request comes in, you render an HTML response based on a template file that lives on your backend. The template gets filled out on the server and then gets sent back as the viewed webpage.

tph-site still uses Django as its backend, but differs in using a React + Next.js based frontend. React is a Javascript library whose organizing principle is that you describe your page in components. Each component either has internal state or state passed from whatever creates the components. A component describes what it ought to look like according to the current state, and whenever the state is updated, React will determine everything that could depend on that state and re-render it. The upside: dynamic or interactive web pages become a lot easier to build, since React will handle a lot of boilerplate Javascript and state management for you. The downside: extra layers of indirection between your code and the resulting HTML.

Next.js is then a web framework that makes it easier to pass React state from the server, and support rendering pages server-side. This is especially useful for puzzlehunts, where you want to do as many things server-side as possible to prevent spoilers from leaking to the frontend. (As for the merits of SPAs versus a multi-page setup, I am not qualified enough to discuss the pros and cons.)

The tph-site fork exists because teammate devs wanted to use React to implement the Playmate in Teammate Hunt 2020. Porting gph-site to React was quite painful, but I don’t think Playmate was getting implemented without it, and we’ve since used it to support other interactive puzzles. In general, I believe we made the codebase more powerful, but also increased the complexity by adding another framework / build system. (To use tph-site, you need to know both Django and React, instead of just Django.) One of teammate’s strengths is that we have a lot of tech literacy and software engineering skills, so we’re able to manage the higher tech complexity that enables the interactive puzzles and websites we want to make. For new puzzlehunt makers, I would generally recommend starting with a setup like gph-site, until they know they want to do something that justifies a more complicated frontend.

February 2022

PuzzUp

PuzzUp is Palindrome’s fork of Puzzlord, and is a Django app for managing puzzles and testsolves. We built off their fork and released our version here after Mystery Hunt. We considered giving PuzzUp a teammate brand name, and didn’t because there were more important things to do.

The mantra of puzzlehunt tech is that it’s all about the processes. The later in the year it gets, the busier everyone is with puzzle writing, and good luck implementing feature requests during Hunt. Early in the year is therefore the best time to brainstorm ways to reduce friction in puzzle writing and hunt HQ management.

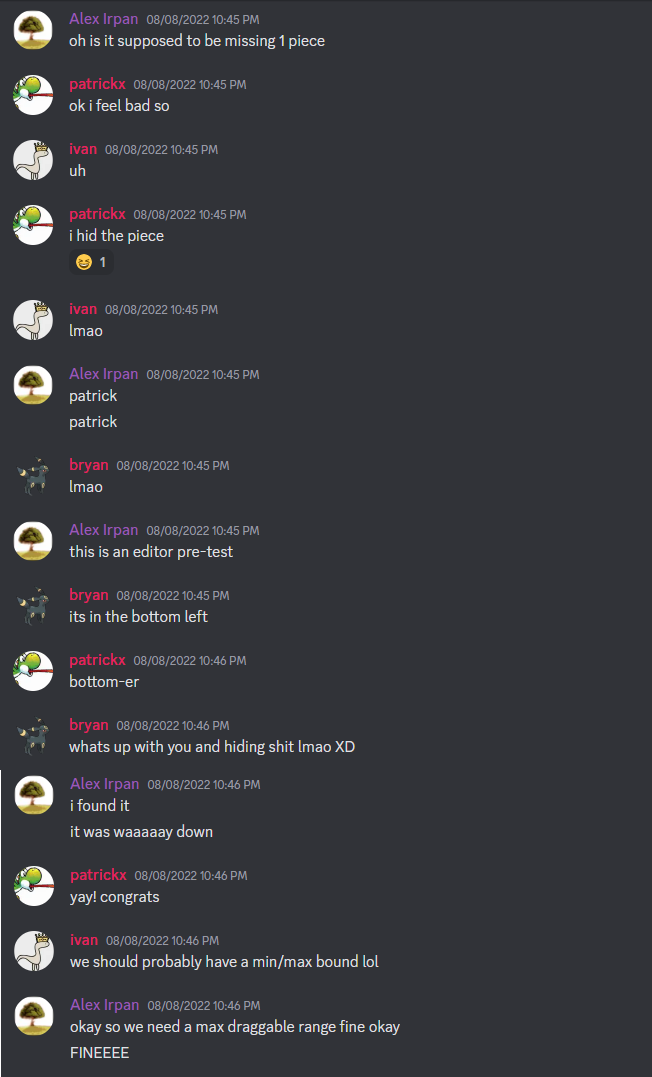

The PuzzUp codebase had some initial Discord integrations to auto-create Discord channels when puzzles were created in PuzzUp. We wanted to extend this integration to auto-create testsolve channels for each puzzle. However, Discord limits servers to have a max of 500 channels. Based on an extrapolation from Teammate Hunt, we’d have more than 500 combined puzzle ideas + testsolves by the end of Mystery Hunt writing. (I just checked out of curiosity, and we hit over 800 combined ideas + testsolves, with 338 puzzle ideas and almost 500 testsolves by the end of Hunt.)

We poked around and found Discord has much looser limits on threads! So anything that lets us permute channels into threads lets us get around the Discord limits.

Here’s what we landed on: all testsolves are threads. Each thread is made in a #testsolve-mute-me channel. Muting the channel disables all notifications from the channel. Whenever a testsolve session is created, the PuzzUp server would start a thread, tag everyone who should be in the testsolve, then immediately delete the message that linked to thread creation. The thread would still exist, and could be searched for, but no link would appear in the text channel. The relevant logic is here if you’re curious. We also extended the codebase to have Google Drive integration, to auto-create testsolve spreadsheets for each new testsolve.

I say “we”, but I did none of this work. I believe it was mostly done by Herman. Much later in the year, I updated the Google integration to auto-create a brainstorming spreadsheet for new puzzles in our shared Drive, because I got annoyed at manually making one and linking it in PuzzUp each meeting. You don’t know what will be tedious until you’ve done it for the 20th time.

Puzzle Potluck

A puzzle potluck (no not that one) gets announced for early March. The goal is to provide a low-stakes, casual venue for people to start writing puzzle ideas. There are no answer constraints, write whatever you want! There’s not much to do in tech yet, so I started working on three ideas. One does not work and does not make it into Hunt. One is an early form of 5D Barred Diagramless with Multiverse Time Travel. The last goes through mostly unchanged.

Quandle

Puzzle Link: here

Perhaps you remember that I set a personal guideline for “no more interactive puzzles”, and think it’s strange that I worked on an interactive puzzle within a month. Yeah, uh, I don’t know what to tell you.

In my defense, as soon as “Quantum Wordle” entered my brain, I was convinced it would be a good puzzle and that I had to make it. I found an open-source Wordle clone and got to work figuring out how to modify it to support a quantum superposition of target words. This took a while, since I started with the incorrect assumption that letters in a guess are independent of each other. This isn’t true. Suppose the Wordle is ENEMY, and you guess the word LEVEE. The Wordle algorithm will color the first two Es yellow, and the last one gray. When extended in the quantum direction, you can’t determine the probability distribution of one E without considering the other Es. They’re already dependent on each other. (Grant Sanderson of 3Blue1Brown would put out a video admitting to a similar mistake shortly after I realized my error, so at least I’m in good company.)

After I got the proof of concept working, I considered how to do puzzle extraction. My first thought was to have the extraction be based on finding all observations that forced exactly one reality, but after thinking about it more, I realized it was incredibly constraining on the wordlist. This certainly wasn’t a mechanic I was going to figure out in time for the potluck deadline in March, so I went with a set of words with no pattern in an arbitrary order with arbitrary indices. That way I could do whatever cluephrase I wanted. Making that cluephrase point to specific words felt like the most interesting idea, and after a bit more brainstorming, the superposition idea came out.

Internally, the way the puzzle works is that the game starts with 50 realities. On each guess, the game computes the Wordle feedback for every target word, then averages the feedback across all realities. When making an observation, it repeats the calculation to find every target word consistent with that observation, deletes all other realities, and recomputes the probabilities for all prior guesses. Are there optimizations? Probably. Do you need to optimize a 50 realities x 6 guesses x 5 letter problem? No, not really. This will become a running theme. For Hunt, I optimized for speed of implementation over performance unless it became clear performance was a bottleneck.

The puzzle could have shown 50 blanks, revealing each blank when you solved a word, but I deliberately did not do that to make it harder to wheel-of-fortune the cluephrase. It is harder to fill gaps when you don’t know how many letters are in each gap.

During exploration, I generated random sets of 50 words, to get a feel for how the game played. My conclusion was that one observation was too little information to reliably constrain to one reality, while two observations gave much more information than needed. I considered making the word list more adversarial, but in my opinion, the lesson of Wordle is that it’s fine to give people more information than they need to win. People are not information-maximizing agents [citation needed]. I left it as-is.

As one of the first tests of our PuzzUp setup, I did a puzzle exchange with Brian. He tested Quandle and I tested Parsley Garden. Around 60 minutes into the Quandle test, I asked how the puzzle was going. Brian said he was stuck, and after asking a bunch of questions, I figured out that he has never clicked a guess after making one, meaning he’s never seen the probability distributions or used an observation. Oops. I added a prompt to suggest doing that, and the solve was better from there.

After potluck, I asked for a five letter answer, but none were available. The best option left was two five letter words. Aside from the design changes needed to make that work, the rest of the puzzle mechanics stayed the same, and the work later in the year was mostly figuring out how to embed it in our codebase and share team state.

People liked this puzzle! I expected that, it’s why I broke my “no interactives” rule. What I did not expect was that it would be one of the more discussed puzzles of the Hunt, especially in non-puzzle contexts like the World Poker Tour blog. I think that happened because the puzzle’s idea is easy to motivate and explain to people who don’t know what puzzlehunt puzzles are.

I’ve been told that technically, the quantum interpretation of Quandle is inaccurate. I believe the core issue is that you’re not supposed to be able to observe the probability distribution of a letter before observing the outcome. The distribution should immediately collapse to a fixed outcome as soon as you look at it. You certainly shouldn’t be able to make an observation that collapses from one superposition of 50 realities to another superposition of < 50 realities. This all sounds accurate to me and I don’t care. Get your pedantry out of here, I’m trying to make a puzzle.

March 2022

Of Metas and MATEs

The internal puzzle potluck goes well! Editors tell me that there were multiple Wordle-themed potluck ideas, the Hunt should only have one, and Quandle is the one they’re going to go with. Hooray! Apologies to the other puzzles in the Wordle graveyard.

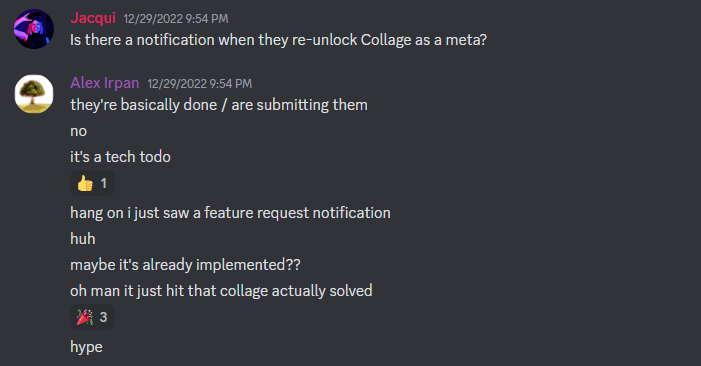

Chat implementation for MATE and other AIs is coming along smoothly. teammate has multiple people with NLP experience, including with large language models, and for this reason we immediately know we are not going to touch them with a ten foot pole. We are going to stick to hardcoded chat responses that trigger according to a hardcoded chat interaction graph. At most we may use NLP to determine chat intents, no more. As a proof of concept, we use text-adventure style intents, where only specific keywords will trigger the right behavior. Everyone involved with chat then gets busy, and we never move past this. I do wish we’d used one of the lightweight ML libraries, to reduce the “sorry, I don’t understand” replies, but it was correct to deprioritize this.

User: hi

MATE: It’s nice to meet you!

User: are you a puzzle

MATE: I’m sorry, I don’t know what you mean.

User: are you a puzzle?

MATE: Come again?

User: usually if something isn’t a puzzle it will say “this is not a puzzle”

MATE: Alright.

User: is this a puzzle?

MATE: I’m sorry, I don’t know what you mean.

Meta writing is also now going in earnest. I mean, it was going ever since theme finalization, but now it’s extra going. Very approximately, these are the steps to writing Mystery Hunt puzzles.

- Decide on a theme.

- Figure out the major story beats that you want in the Hunt.

- In parallel, write metapuzzles off the critical path of story. Ideally, your major story beats are tied to metapuzzles, since this connects the solving process to the narrative. Those metas block on story development, but the rest don’t have to. Think, say, Lake Eerie in Mystery Hunt 2022. Good round? Absolutely! Was its answer critical to the story of that Hunt? No, not in the way that The Investigation was.

- Once the major story beats are decided, start writing the metas for story-critical answers.

- Whenever a meta finishes testsolving, release all its feeder answers.

- When all your metas and feeders are done, you’ve written all the puzzles of Hunt!

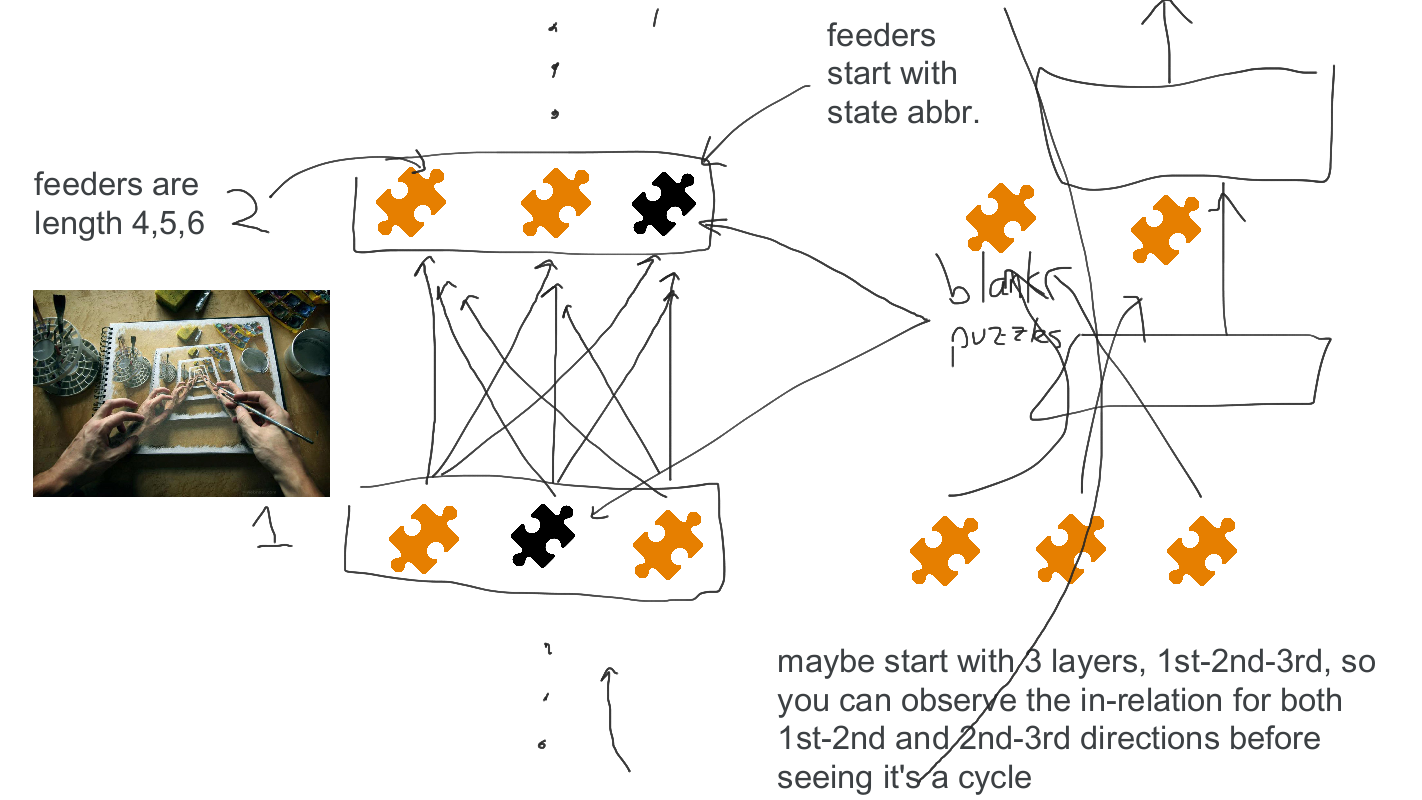

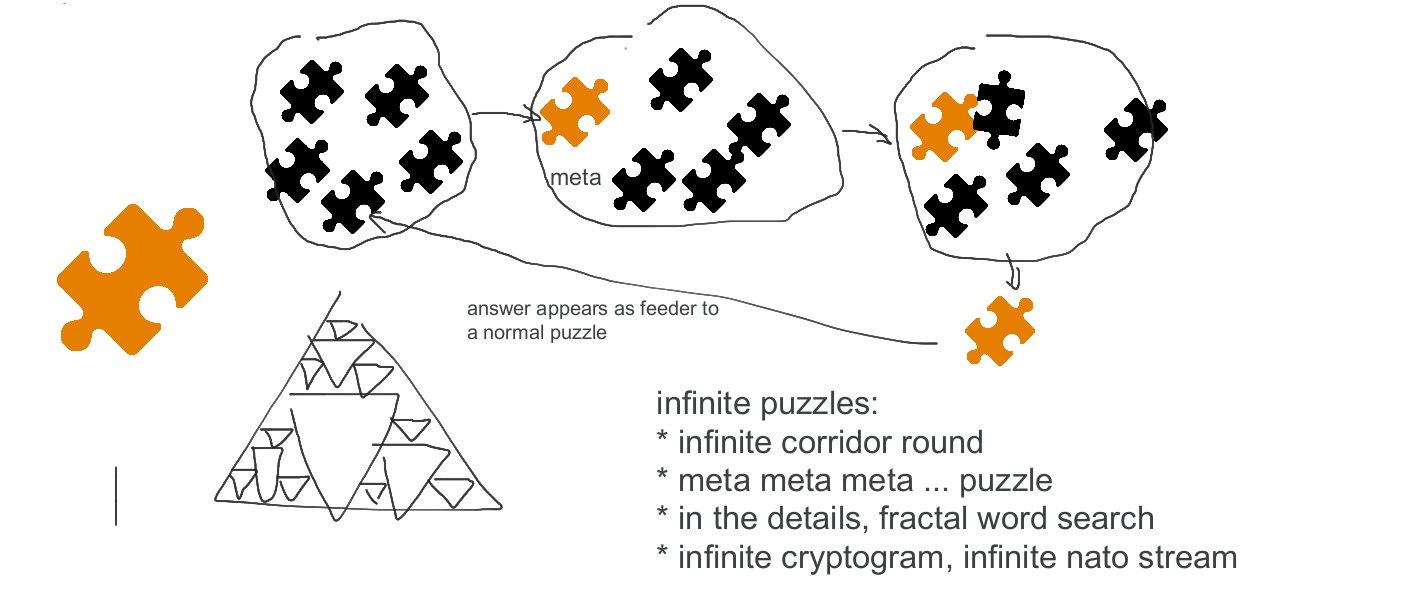

Mystery Hunt writing is very fundamentally an exercise in running out of time, so everything that can be done in parallel should be done in parallel. Interestingly, for Mystery Hunt 2023, that means the AI rounds were ideated first, because their answers were not story-critical, whereas the Museum and Factory metas were. Moving the AI rounds off the critical path was a good idea, since AI rounds were gimmicked for story reasons, which made them harder to design.

There weren’t too many guidelines on AI round proposals. They had to have a gimmick, a theme, and a sample meta answer that could be phrased as a feature request. Besides that, anything went. It turns out asking teammate to come up with crazy round ideas is pretty easy! In teammate parlance, an “illegal” puzzle is a puzzle that breaks what you expect a puzzle to be, and we like them a lot. We ended up with around 15 proposals.

The difficult part was doing the work to decide if an idea that sounded cool on paper would actually work in reality. One of my hobbies is Magic: the Gathering, and this issue comes up in custom Magic card design all the time. Very often, someone will create a card that tells a joke, or makes a cute reference, and it’s cool to read. But if it were turned into a real card, the joke wouldn’t convert into fun gameplay. Similarly, we needed to find the line between round gimmicks that could support interesting puzzles, and round gimmicks that could not.

For example, one of my round proposals was a round where every puzzle was contained entirely in its title. It would involve doing some incredibly illegal things, like “the puzzle title is an animated GIF” or “the puzzle title changes whenever you refresh the page”. There was some interest, but as soon as we sat down to design the thing, we realized the problem was that it was practically impossible to write the meta without designing the title for every feeder at the same time. The gimmick forced way too many constraints way too fast. So, the proposal died in a few hours, and as far as I’m concerned it should stay that way.

There was a time loop proposal, where the round would periodically reset itself, you’d unlock different puzzles depending on what choices you made (what puzzles you solved), and the meta would be based on engineering a “perfect run”. This idea lost steam. Given what puzzlehunts happened in 2022, this was really for the best.

In one brainstorming session, I off-handedly mentioned a Machine of Death short story I read long ago. In it, the brain scan of a Chinese woman named 愛 is confused with the backup of an AI, since both files were named “ai”. I didn’t think much of it at the time, but many people in that session went on to lead the Eye round, and I’d like to think I had some tiny contribution to that round.

The main round I got involved with was “Inset”, which you know as Wyrm. But we’ll get to that later.

A few weeks into this process, team leads announce that the four major story beats have been determined. They are designed to be discoverable in any order, and we need to deliver on metas for each.

- MATE is overworked.

- There are multiple AIs.

- teammate discarded all AIs except for MATE.

- Remnants of the AIs are still causing strange things in the Mystery Hunt.

It’s not known where all of these will appear, but some will for-sure be standalone metas in the Factory. We split into groups to brainstorm those, and the group I was in came up with:

The Filing Cabinet

Puzzle Link: here

I’m not sure how people normally come up with meta puns. What I do is use RhymeZone to look up rhymes and near-rhymes, then bounce back and forth until something good comes out. The brainstorm group I was in was focused on the “multiple AIs” story point. Looking for rhymes on “multiple” and “mate”, we found “penultimate”.

At which point Patrick proclaimed, “Oh, this puzzle writes itself! We’ll find a bunch of lists, give a thing in each list, and extract using the penultimate letter of the penultimate thing from each list.”

And, in fact, the puzzle did write itself! Well, the idea did. The execution took a while to hammer out. A rule of thumb is that there’s a 10:1 ratio for raw materials to final product in creative endeavors, and that held true here too. The final puzzle uses 16 feeders, and this was sourced from around 140 different lists. Our aim was to balance out the categories used, which specifically meant not all music, not all literature, not all TV, and not all things you’d consider a well-known list (like the eight planets). Lists were further filtered down to interesting phrases that ideally wouldn’t need to be spelled letter by letter, while still uniquely identifying their list from a single entry. The last point was the real killer of most lists. I liked Ben Franklin’s list of 13 virtues, but the words ended up being too generic.

Despite having the entire world as reference material, some letters (especially the Ps) were really difficult to find. I remember arguing against SOLID YELLOW for a while, saying its extract entry was ambiguous between “green stripe” and “striped green” no matter what Wikipedia said, but didn’t find a good enough replacement in the 20 minutes I spent looking for an alternative, and decided I didn’t care enough to argue more.

I feel every puzzle author relishes an opportunity to shoehorn their personal interests into a puzzle, and this was a good puzzle for doing that. FISH WHISPERER did not make the cut, but I knew it had zero chance of clearing the notability bar. MY VERY BEST FRIEND was a funny answer line that got bulldozed in the quest to fit at least one train station into the puzzle, which was harder than you’d think. Not a great showing for stealth inserts, but I’m happy WAR STORIES stuck around until the end.

Also, have a link to some Santa’s reindeer fanart and fanfiction. Testsolvers cited it as a source for “Olive is Santa’s 10th reindeer”, a mondegreen from people who misheard the classic song as “Olive the other reindeer used to laugh and call him names”.

April 2022

Round and Round and Round and Round!

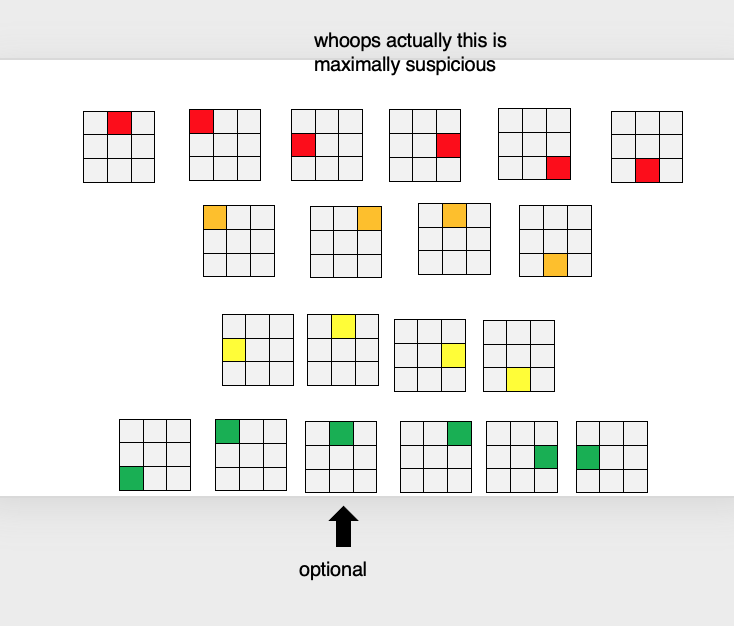

![]()

It’s Wyrm time!

Wyrm took quite a while to come together, but was started in earnest around April. From the start, the round proposal was “really cool fractal art”, and the design around it was figuring out what an infinitely zooming fractal round could look like. This started with the metameta.

Period of Wyrm

Puzzle Link: here

Really, I did not do much on this puzzle. The mechanics stayed the same throughout all testsolves. I testsolved the initial version, and my contributions afterwards were on searching for feeders during round writing. I also wrote a script to auto-search for equations that would give a desired period. The script limited its search to linear functions, which was good enough most of the time.

I learned a lot about how Mandelbrot periods work during this puzzle. Although we suspected that writing code would be the way most teams solved this puzzle, we wanted the puzzle to support non-coding solutions, given it was required to finish the Hunt. A decent chunk of time was spent sanity checking the puzzle in online Mandelbrot set exploration tools.

Mandelbrot set periods are based on the “bulbs” along the outer border of the set. The central cardioid has period 1, and bulbs of any period can be found along the cardioid. Each bulb is self-similar to the original set, so instead of only going around the central heart, you can use the bulb of a bulb. For example, to get a period of 6, you either use a 6-bulb, or a 3-bulb branching off a 2-bulb, or a 2-bulb branching off a 3-bulb. Long story short, composite periods are easier than prime ones, which had a nontrivial effect on choosing what pun to use.

Qualitatively, the period converges faster if the point is towards the middle of the bulb, so we tried to do that when possible. We also aimed to use the largest bulb per period to reduce precision needed to solve the puzzle, and spread the points across the border of the Mandelbrot set, a holdover from an earlier version of the puzzle that hinted the Mandelbrot set less strongly.

The metameta was very deliberately designed to be flexible enough for any answer, as long as we had a good enough set of categories, since we expected to have a lot of constraints from the future metas.

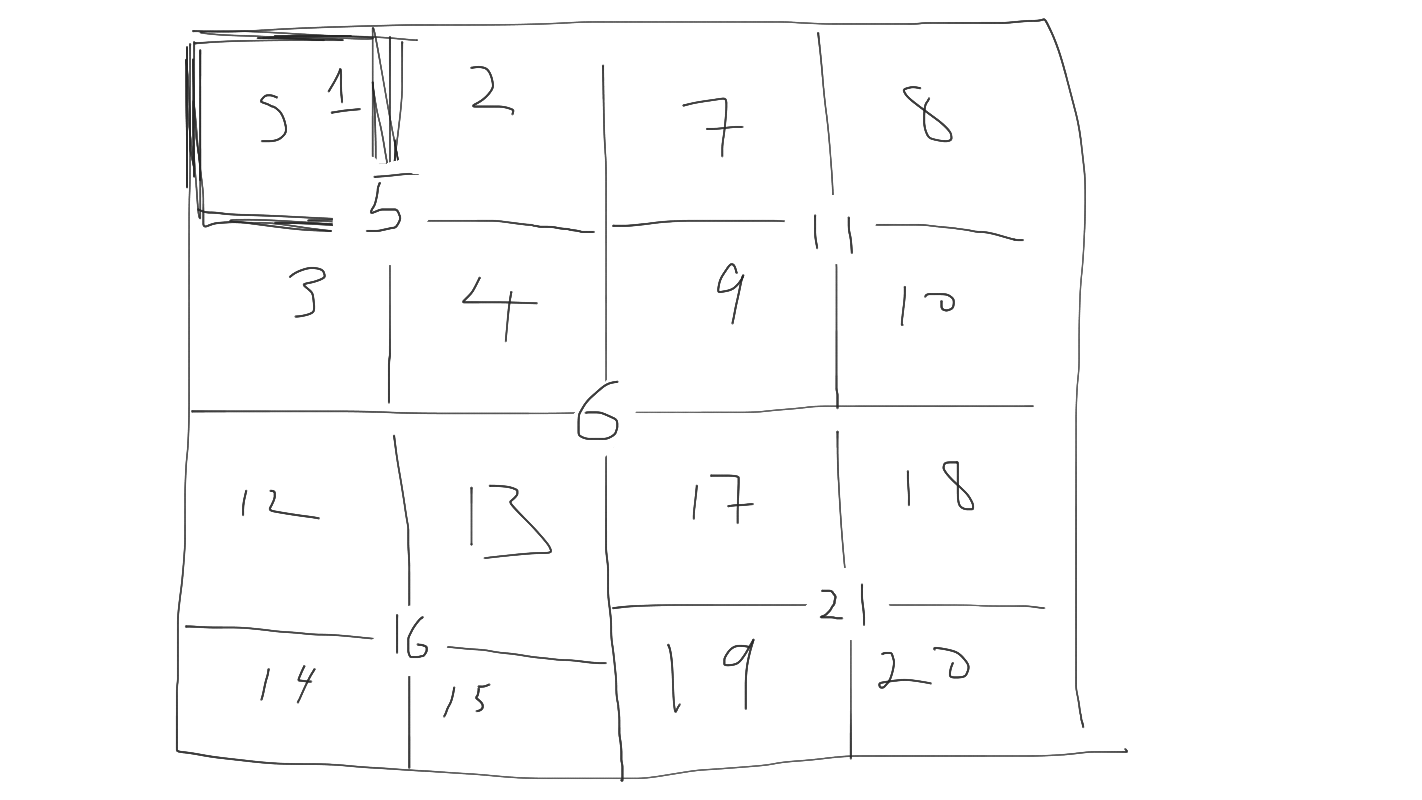

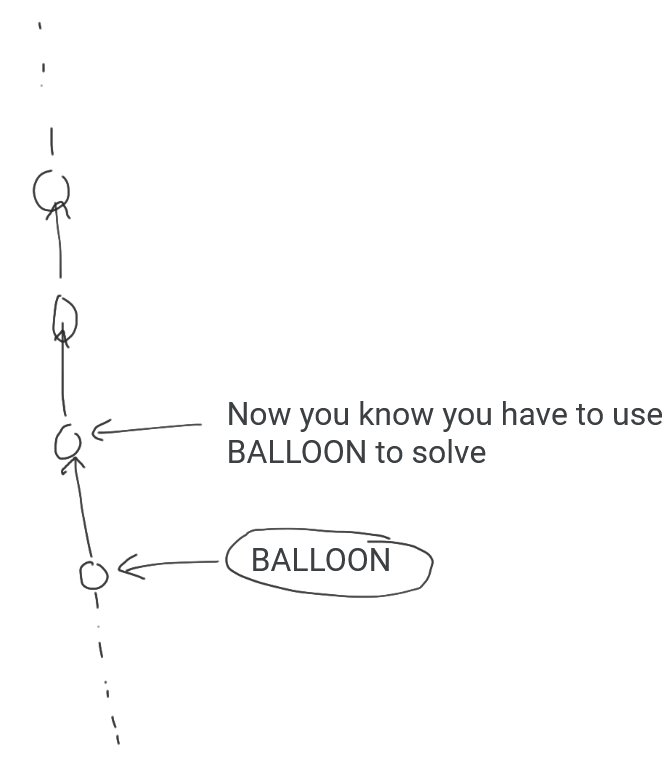

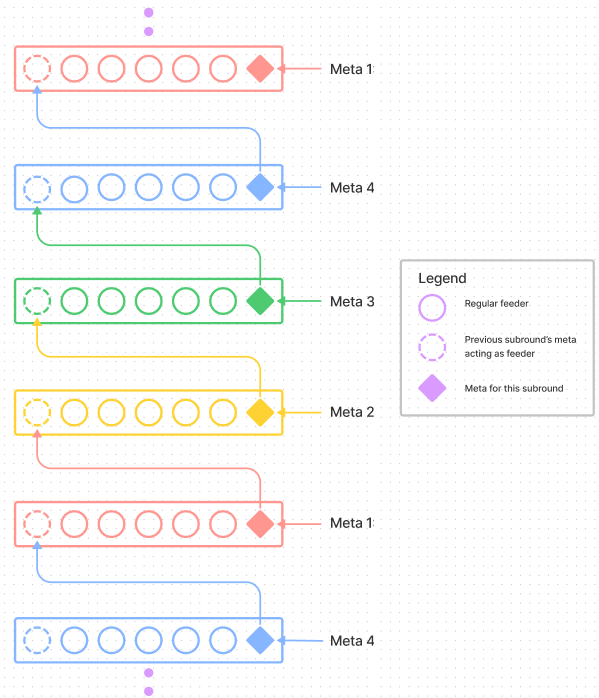

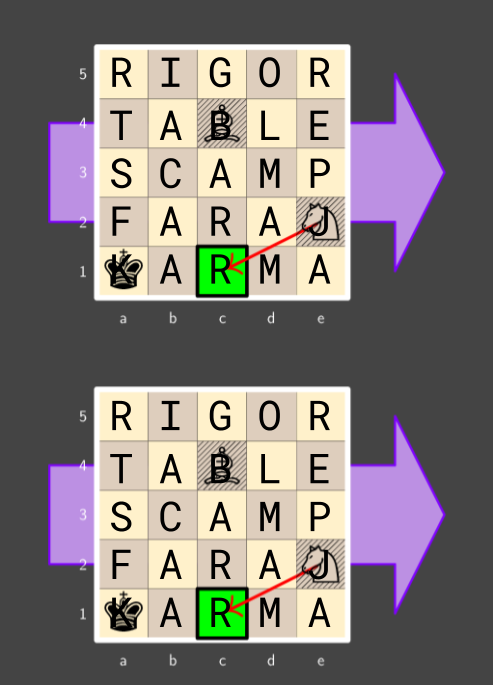

The round structure went through multiple iterations, done over Jamboard. Here’s a version where every puzzle would be 1/4th of a future puzzle:

Here’s one where every puzzle’s answer would depend on answers from the previous layer, such that one puzzle could be backsolved per layer.

And here’s one where the entire round would be serial, each puzzle would rely on the previous puzzle’s answer, and you’d need to figure out how to bootstrap from nothing to solve the entire round.

Most of these ideas would have been quite tricky to pull off, especially given we needed to fit it within the Period of Wyrm constraints. This led to the cyclic round structure proposal. Each layer would be normal puzzles, building to a meta, which would then be 1 pre-solved feeder for the next layer’s meta. The rounds would then form a cycle, where the last meta would be a feeder in the first layer. This restricted the “weirdness” to just the metas of each layer, and all regular feeders in each layer could be written without constraints besides the answer. The zoom direction of moving outwards rather than inwards was done to make it more distinct from ⊥IW.giga.

Our first plan was to have one unsolvable puzzle in the first layer of puzzles, that would become solvable once you got to the last layer of puzzles. This idea got discarded pretty early because it didn’t feel very impactful, it seemed hard to guarantee that a puzzle couldn’t be backsolved, and giving teams an unsolvable puzzle would be pretty rude. That led to the road of creating a metapuzzle disguised as a feeder puzzle, solvable from 0 feeders but still allowing for backsolving of feeders. Figuring out exactly what that meant would be a future problem.

Since I didn’t have any leadership responsibilities, and tech was still on the slow side, I ended up self-assigning myself a lot of work in brainstorming metas that fit the answer constraints. I noted that our Hunt had a lot of similarities to Mystery Hunt 2018: a goal to reduce raw puzzle counts, and including complex meta structures in their stead. As homework, I spent a lot of time reading through the solutions for both the Sci-Fi round and Pokemon round from Mystery Hunt 2018, since they also had overlapping constraints between metas and metametas. Going through each solution several times, I started to appreciate some 2018 metas that I found dull at the time, but which made the round construction possible when viewed through a constructor’s lens.

You can read more about the Wyrm answer design process in an AMA reply I wrote here. The short version is that all metas range between using answers semantically and using answers syntactically. The Period of Wyrm metameta forced semantic constraints, and the first Wyrm meta written (Lost at Sea) also used semantic constraints. This forced the remaining metas to be syntax based.

Over a few months, all the Wyrm metas were drafted and tested in parallel, using one central coordination spreadsheet to track the metameta categories used. Around 60 different categories were considered for the metameta, of which 13 were used, so more like a 5:1 ratio instead of a 10:1 ratio. Feeders were constantly shuffled between metas as we found better answers that satisfied the constraints, or changed meta designs to loosen their constraints enough to make feeders work.

We knew early on that some categories would be fixed. The category that led to INCEPTION was just too good as a “teaser” answer for the rest of the round, and got quickly locked in as the answer to Wyrm’s first layer. The FELLOWSHIP and EYE OF PROVIDENCE categories were locked in early as well, to fit the meta they went towards. My favorite category that didn’t make it was “Socialist”, for Social Security Numbers, using MONTGOMERY BURNS and TODD DAVIS. It got cut because we decided TODD DAVIS was a bit ambiguous with an “Athlete” category we were considering, and larger numbers would have forced awkward equations in the meta. Too bad, the juxtaposition of two capitalists getting labeled “Socialists” would have been great.

The other category we wanted to force was Hausdorff, since it was too thematic to not use. We wanted as many context clues pointing to fractals as possible, to reinforce the flavor of the round structure and metameta.

I factchecked the Hausdorff answers, which was a fun time. I’ll quote my despair directly.

aw man why have so many recreational math people tried to estimate the dimension of [broccoli] and cauliflower

[their] values are like +/- 0.2 the value from wikipedia

but that value is based on some paper someone put on arxiv in 2008 with 4 citations

put some notes in the sheet but in summary, of the real-world fractals, the most canonical ones are

1) the coastline based ones, because they were so lengthy that only 1 group of people really bothered estimating them.

2) “balls of crumpled paper”, which is usually estimated at dimension 2.5 and I found a few different sites that repeat the same number (along with 1 site that didn’t but the one that didn’t was purely experimental whereas the wikipedia argument is a bit more principled)

When I checked deeper, I found that the coastline paradox is well-known enough that multiple groups have checked the dimension of coastlines, getting different results, so those aren’t canonical either. The only one that was consistent was Great Britain, whose dimension of 1.25 is repeated in both the original paper by Benoit Mandelbrot and all other online sources I could find.

In my experience, factchecking is the most underappreciated part of the puzzle writing process. The aim of factchecking is to make sure that every clue in the puzzle is true, and only has one unique solution. The first is easy, the second is hard. Even with the puzzle solution in hand, it can take a long time to check uniqueness, on par with solving the puzzle forward. Although Wikipedia is the most likely source for puzzle information, Wikipedia isn’t always correct, and it’s important to verify all reasonable sources share consensus. You never know what wild source a puzzler will use during Hunt.

Sometimes, that consensus can be wrong and you still have to go with it for the sake of solvability! See Author’s Notes for Hibernating and Flying South from GPH 2022 for an example, or the Author’s Notes for Museum Rules from Mystery Hunt 2023. It’s unfortunate to propagate falsehoods, but sometimes that’s how it goes.

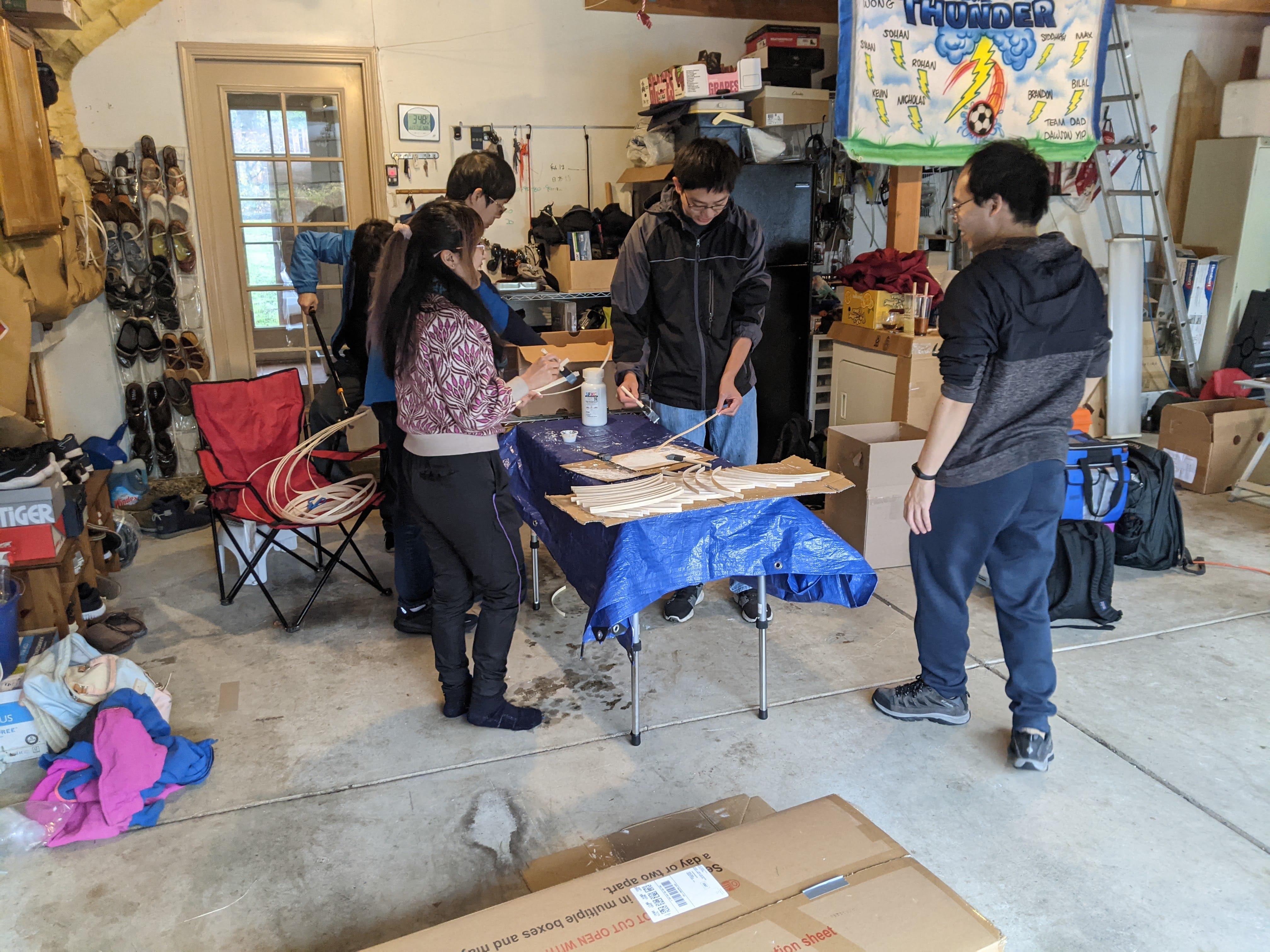

A Bay Area Meetup

teammate has people all over, with rough hubs around the Bay Area, Seattle, and New York. We held meetups at each hub in April, for anyone who felt the COVID exposure was within their risk tolerance.

We started brainstorming Weaver at the Bay Area meetup. Much of the work would be done later, but this meetup was the first time Brian mentioned wanting to make an underwater basket weaving puzzle, using special hydrochromic ink that dried white and became transparent when wet. The idea sounded super cool, so we did some brainstorming around what the mechanics should be (different weaving patterns, presumably), as well as some exploration into the costs. I then found an Amazon review.

I’ve tried a bunch of hydrochromic paints and they’re all kinda like this one. It’s a fun idea in theory – a paint that goes on white when dry and turns clear when wet, so you can reveal something fun on your shower tile or umbrella or sidewalk.

But… it doesn’t work great. It takes a pretty thick set of coats to actually hide (when dry) what’s underneath, and that makes it prone to cracking, and also not entirely transparent (more like translucent) when wet. It’s hard to get the thickness just right. Mixing some pigment into the hydrochromic helps a bit but adds a tint when wet. And even aside from all that it’s not very durable paint, it’s kind of powdery and scratches off. And you can’t add a top-coat, otherwise the water won’t get to it.

You can make it work, we did make it work for a puzzle application (invitation cards that reveal a secret design when wet) but I’d prefer not to use it again.

The Amazon review was written by Daniel Egnor. For those who don’t know, Dan Egnor runs Puzzle Hunt Calendar. This was easily the most helpful Amazon review I’ve ever seen.

Unfortunately, it suggested our idea was dead in the water (pun intended). This was super sad, but underwater basket weaving was too compelling to discard entirely, so Brian ordered some paint to experiment with later.

We then shifted gears to writing puzzles for the newly released Factory feeder answers, finishing a draft of:

Broken Wheel

Puzzle Link: here

This is one of those puzzles generated entirely from the puzzle answer. There were a few half-serious proposals about treating the answer as PSY CLONE and doing a Gangnam Style shitpost, but they died after I said “It’s been done”.

Alright, what is a Psyclone? There are two amusement park rides named the Psyclone, one of which is a spinning ring. How about a circular crossword that spins? That naturally led to the rotation mechanic. There were some concerns about constraints, but I cited Remy from Mystery Hunt 2022 to argue that it’d be okay to not check every square of the crossword. The entire first draft was written in a few hours, since we had a lot of people and it was very easy to construct in parallel. I guess that shouldn’t be surprising, since crosswords are easy to solve in parallel too.

Enumerations were added in the middle of the first testsolve because it was too hard to get started without them. As for the final rotation, we went through many iterations of flavortext and clue highlighting, before settling on placing the important clue first and mentioning “Perhaps they can be rotated” directly in the flavortext. “Rotated” in particular (over “spin” or “realigned”) seemed to be the magic word that got testers thinking about the right idea. It was a good reminder of how much subconscious processing people do in puzzlehunts.

“I Have a Conspiracy”

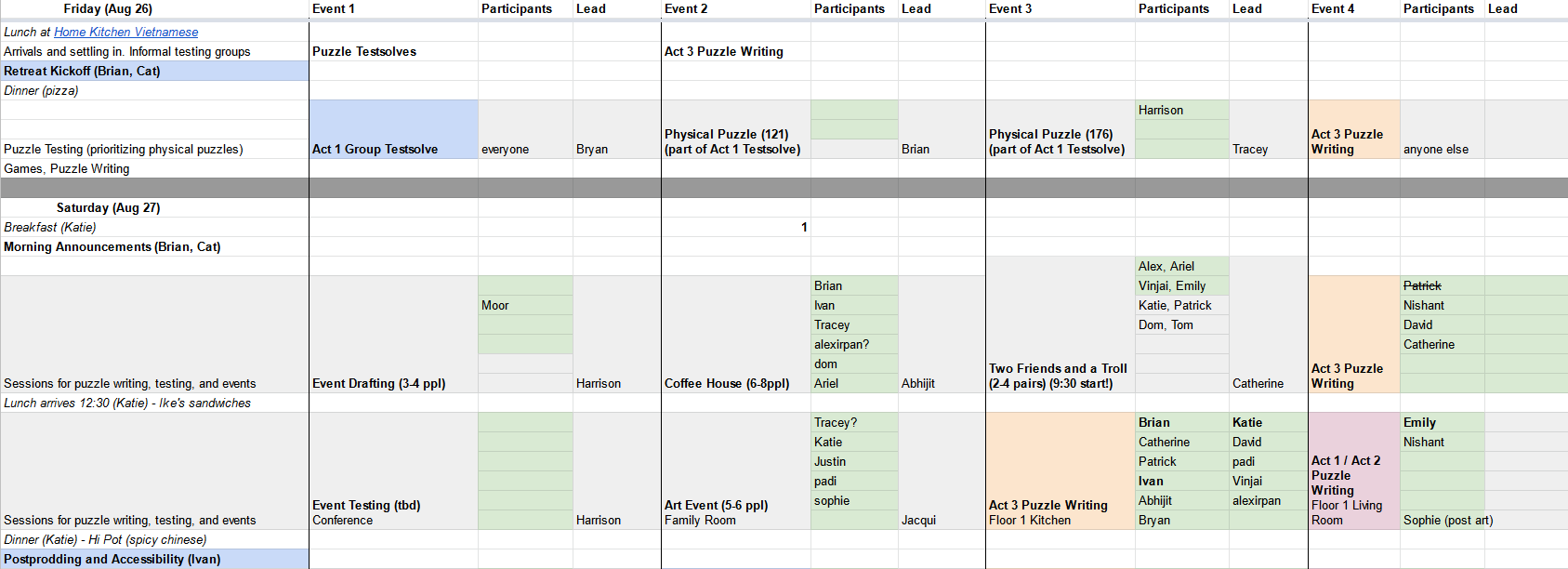

By late April, the round structure of Hunt had solidified.

- Five Museum rounds, that will combine into one metameta where both the metas and feeders are important. The metameta will deliver one story beat.

- Three Factory rounds, one of which will be about solvers “creating their own round” (this would later evolve into the Hall of Innovation). These will deliver the other three story beats.

- Four AI rounds, where the four AIs are locked in as Wyrm, Boötes, Eye, and Conjuri. There wasn’t a formal selection process for this, it was more that team effort needed to be directed elsewhere, and those were the four ideas with the most partial progress.

That gave around 150-160 puzzles. The writing team for teammate was around 50 people at the time and we thought we’d literally die if we tried to write a 190+ puzzle hunt.

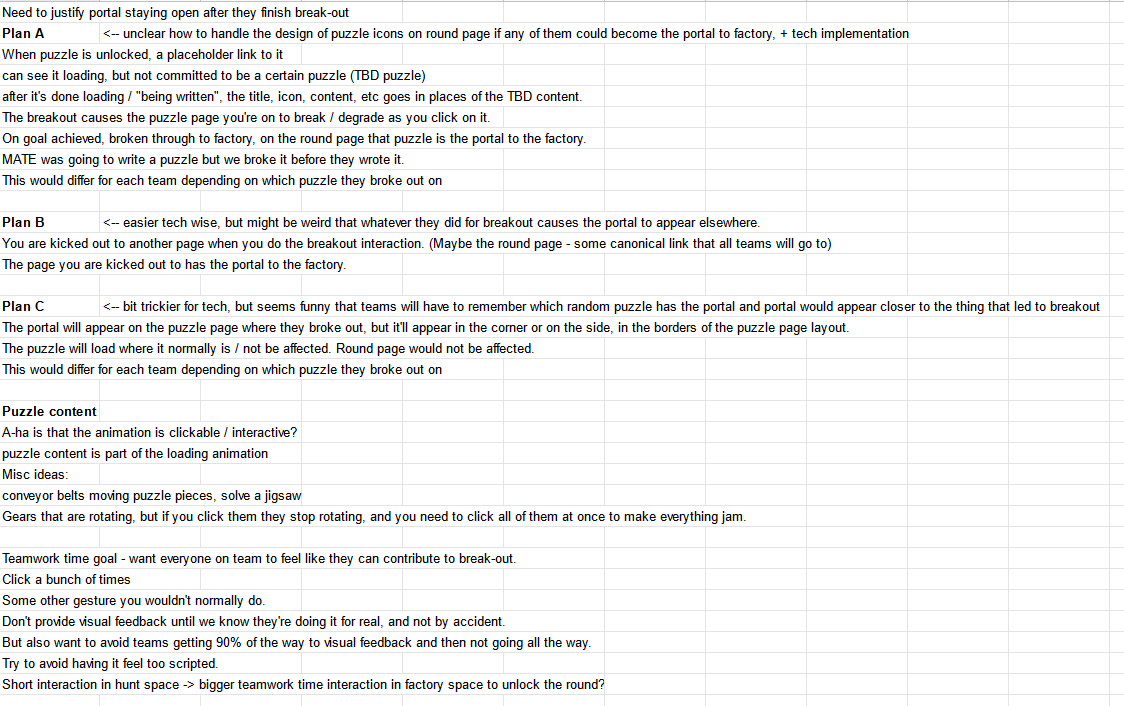

There were two lingering problems. One, the Wyrm round was significantly larger than all the other AI rounds. Two, the story team was figuring out details of the midpoint capstone interaction. At the midpoint of the Hunt,

- Solvers should reactivate the old AIs.

- This causes teammate to shut down the Puzzle Factory and Mystery Hunt.

- Solvers should then start powering up the Factory by solving some puzzles.

- That is just enough to wake up the old AIs, who start writing their own puzzles, letting teams continue powering up the Factory until endgame.

The question is, what are the puzzles in step 3? This hadn’t been determined yet.

The Wyrm round authors and Museum metameta authors were gathered into a meeting with the editors-in-chief (EICs) and creative leads for a conspiracy: what if Act I feeders from the Museum repeated in Wyrm’s round?

This proposal filled a lot of holes.

- Wyrm’s round would be 6 puzzles shorter, bringing its size in line with other AI rounds. Between the repeated puzzles and backsolved puzzles, there would be around 13 “real” puzzles left.

- The reused feeders could become the puzzles in step 3. It would be reasonable for solvers to find copies of Museum puzzles within the Factory, since the Factory created puzzles for the Museum.

- The gimmick for Wyrm was in the structure, not the feeder answers. Out of the four AI rounds, it was the one most suited to repeated feeders.

- The overall hunt would require 6 fewer puzzles to write. The target deadline for finishing all puzzles was December 1st, and we were behind schedule. Reducing feeders was one way to catch up.

- If we could make that set of feeders fit 4 meta constraints (Museum meta, Museum metameta, Wyrm meta, Wyrm metameta), it’d be really cool.

Making this happen would be quite hard. The first step was Wyrm authors testsolving the Museum metameta, so that we knew what we’d be signing up for. After getting spoiled on MATE’s META. we discussed whether this was ambitious-but-doable, or too ambitious. It seemed very close to too ambitious, but we decided to go for it with a backup option of reversing the decision if it ended up being impossible.

It made it to the final Hunt, so we did pull it off. I’m happy about that, but given a do-over I would have argued against this more strongly. First of all, I don’t think many solvers really noticed the overlapping constraints. It leaned too hard towards “showing your team can solve an interesting design problem” without a big enough “fun” or “wow” payoff. (In contrast, the gimmicks of the AI rounds are much more obvious and easy to appreciate.)

The more problematic issue that was not clear until later was the way it delayed feeder release. Here is the rough state of Hunt at this time.

- The Office meta is done and its feeders are released.

- The Basement meta will go through more testsolving when the final art assets are in, but is essentially finalized and its feeders are released.

- All AI rounds are in the middle of design and are not ready to release feeders.

- Innovation and Factory Floor is doing its own crazy thing, and won’t be ready for some time.

In short, there were 2 rounds of feeders open for writing, and every other round was not. It is already known that Boötes and Eye will have answer gimmicks that make their puzzles harder to write, and Conjuri feeders will likely be released quite late since the meta relies on game development for Conjuri’s Quest.

The status quo is that all the Museum feeders can’t be released until five Museum metas pass testsolving, and all the Wyrm feeders can’t be released until four Wyrm metas pass testsolving. Including retests of both metametas with their final answers, this is 6 metas blocking Museum and 5 metas blocking Wyrm. Repeating feeders between Museum and Wyrm literally turned it up to 11 metas blocking both sets of feeders. There were 53 feeders in that pool, about 40% of the feeders in the whole Hunt.

Most of the non-gimmicked feeders were in that pool as well, leaving fewer slots for people who just wanted to write a normal puzzle. I don’t have any numbers on whether teammate writers were more interested in writing regular puzzles or gimmicked puzzles, but I suspect most of the newer writers wanted to write puzzles with regular answers, and did not have as much to do while the metas were getting worked out. (There was an announcement to keep working on puzzle ideas before feeder release, but it is certainly easier to maintain motivation if you have a feeder answer you’re working towards.)

I’d estimate that the extra design constraints from repeating feeders delayed the release of that pool of 53 feeders by 2-4 weeks. It forced more work on Museum meta designers who already needed to fit their meta pun and feeders into the metameta mechanic. Perhaps in a more typical Hunt, this would have been fine, but the AI rounds had already spent a lot of complexity budget and this probably put us in complexity debt.

But, this is all said with hindsight. At the time, I did not realize the consequences and I’m not sure anyone else did either. It did genuinely fix problems in the Hunt and story structure, it’s just there were other ways to fix them that would have had fewer bad side effects.

The Legend

Puzzle Link: here

Part of the deal for accepting the repeated feeders constraint was that editors-in-chief signed up to help design and push the Wyrm and Museum metas. The Legend was the meta brainstormed to take all the repeated feeders, and needed to be able to take pretty much any set of answers.

People say “restrictions breed creativity”. That’s true, but what they don’t say is that meeting those restrictions is not necessarily fun. It’s work. Rewarding and interesting work, but still work.

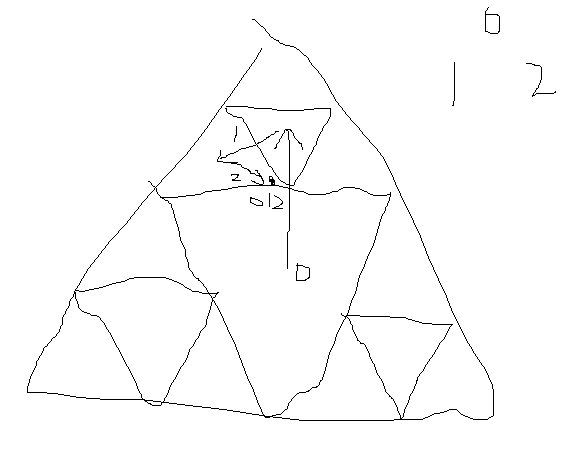

Before the decision to repeat feeders, I had sketched some ideas around using the Sierpinski triangle, after noticing INCEPTION was \(9 = 3^2\) letters long. The shape is most commonly associated with Zelda in pop culture, so ideas naturally flowed that way.

The early prototype associated one feeder to each triangle, extracting letters via Zelda lore. It was reference heavy and not too satisfying. After talking with Patrick a bit, he proposed turning it into a logic puzzle, by scaling up to 27 triangles, giving letters directly, and having feeders appear as paths in an assembled fractal.

This was especially appealing because it meant we could take almost any feeders, as long as they didn’t have double letters and their total length was around 50-70 letters. Brian, who was spoiled on some of the Museum metas, mentioned that TRIFORCE was a plausible answer for both the Museum and Wyrm metameta, so if we could make the Sierpinski idea work, we could do a “triangle shitpost” by making TRIFORCE the looping answer for the round.

Cool! One small problem: I’ve never written a logic puzzle in my life.

There are two approaches to writing a logic puzzle.

- Start with an empty grid and an idea for the key logical steps you want the puzzle to use. Place a small number of given clues, then solve the logic puzzle forward until you can’t make any more deductions. Add the given clue you wish you had to constrain possibilities, then solve forward again. Repeat until you’ve filled the entire grid. Then remove everything except the givens you placed along the way, and check it solves correctly.

- Implement the rules of the logic puzzle in code, and computer generate a solution.

Option 1 tends to be favored by logic puzzle fans. By starting from an empty grid, you essentially create the solve path as you go, and this makes it easier to design cool a-has.

Option 2 makes it way easier to mass produce puzzles if, say, you’re running a newspaper and want to have one Sudoku in every issue. Puzzle snobs may call this “computer generated crap” because after you’ve done a few computer generated puzzles within a given genre, you are usually going through the motions.

I knew I was going to eventually want a solver to verify uniqueness. So I went with option 2.

I have some familiarity with writing logic puzzle solvers in Z3, since I like starting logic puzzles but am quite bad at finishing them. My plan was to use grilops, but I found it didn’t support the custom grid shapes I wanted. Instead, I referred to the grilops implementation for how to encode path constraints, then wrote it myself.

Figuring out how to represent a Sierpinski triangle grid in code was a bit of a trip. The solution I arrived at was pretty cool.

- A Sierpinski triangle of size 1 is a single triangle with points \(0, 1, 2\).

- A Sierpinski triangle of size 2 is three triangles with points \((0,0), (0,1), (0,2), (1,0), (1,1)\), and so on up to \((2,2)\).

- A Sierpinski triangle of size N is three Sierpinski triangles of size N-1. Points are elements of \(\{0,1,2\}^N\). The first entry decides which N-1 triangle you recurse into and the rest describe your position in the smaller triangle.

Checking if two points are neighbors can then also be checked recursively.

- If their first entries are the same, chop off the first entry and recursively check if the points are neighbors in the triangle one level smaller.

- If their first entries differ, they are in different top-level triangles, and the only three cases are \(0111-1110\), \(0222-2000\), and \(1222-2111\).

The first draft of my code took 3 hours to generate a puzzle, and the uniqueness check failed to finish when left overnight. Still, when I sent it to editors, they were able to find the same solution my code did by hand, so we sent it to testsolving to get early feedback while I worked on improving my solver.

Testsolving went well. The first testsolve took pretty much exactly as long as we wanted it to (2 hours with 5/6 feeders), and solvers were able to use the Sierpinski structure to derive local deductions that combined into the final grid. Not too bad for a computer generated puzzle! This was very much a case of “getting lucky”, where we discovered a logic puzzle format constrained enough that the solve path naturally felt a bit like a designed one.

I tried alternate means of encoding the constraint that every small triangle had to come from the given set of 27, and every triangle needed to be used exactly once. Swapping some ugly and-or clauses into if-else clauses got Z3 to generate fills 10x faster, but when switched to solve mode, it still failed to verify uniqueness.

At this point I decided to step in and mess around with different fills by hand. The goal was to minimize the number of triangles where 2+ letters were contributing to extraction. There were around 5 different fills with different feeder lists, and I suspect all of them were unique, but my solver only halted on one of them. I didn’t have much intuition for how to speed up the solver any more, so we stuck with that fill.

After that fill was found, there were only two revisions. The first was deciding how much hinting to give towards the Sierpinski triangle. This was the step with the largest leap of faith. In the end we decided to hint that the corners would touch only at vertices, and the final shape would be triangular, but no more than that.

The second revision was to make it a physical puzzle. In Mystery Hunt 2020, teammate got stuck on the final penny puzzle for 7 hours. This was quite painful, given that we had literally no other relevant puzzles to do, but at the end of Hunt we liked that we ended up with a bunch of small souvenirs that people could take home. We had already observed some struggles with spreadsheeting the triangle grid, and since The Legend was so close to the midpoint of the story, it seemed cool if we could give out physical triangles as a keepsake. They could serve double duty as puzzle aids and puzzle souvenirs.

I’m not sure if teams used the wooden triangles as a souvenir in the way we imagined, but I hope shuffling wooden triangles was more fun than manipulating spreadsheets!

May 2022

The Triangles Will Continue Until Morale Improves

The co-development of Museum and Wyrm metas was fully underway. The editors-in-chief created a “bigram marketplace” spreadsheet, listing every bigram that MATE’s META needed, along with a guess of expected bigram extraction mechanisms. All Museum and Wyrm authors coordinated over this sheet to make MATE’s META come together. Based on Museum meta drafts so far, editors ranked how tight their constraints were, and gave more constrained metas higher priority. The repeated feeders for Wyrm that we wanted in The Legend then got higher priority on top of that. Museum authors were asked to take 1-2 Wyrm answers each, to avoid concentrating them into one meta. Wyrm authors were fast-tracked to testsolves of the Museum metas, so that we could help brainstorm alternate answers that also fit the Wyrm constraints. Meanwhile, testsolves of The Legend were biased towards Museum meta authors, so that they could know a bit about what was going on. We did not expect to use each meta extraction mechanism an equal number of times, but ideally the balance is not too off-kilter.

I helped field questions from Museum meta authors. Yes, someone needs to take the answer GEOMETRIC SNOW, we know it’s not a great answer but haven’t found an alternative that fits the double O constraint. Yes, TRIFORCE can’t change. To help aid in feeder search, I wrote a script that attempted all Museum metameta bigram mechanics on all Wyrm feeder ideas so far, to see what stuck.

The feeder quality started pretty awful. After discussion with the authors of MATE’s META, editors add the glitch mechanic to that puzzle. Glitches erase parts of feeders before MATE’s META acted on them, allowing mechanics like “first + last letters” to have many more options. Feeder quality starts going back up.

Eventually this converged to a set of repeated answers for Wyrm that fit all Museum metas, and was a bit greedy at taking good bigrams, but not too greedy. As the bigram marketplace settles down and The Legend feeders get more locked in, we pivoted to dealing with our own constraints.

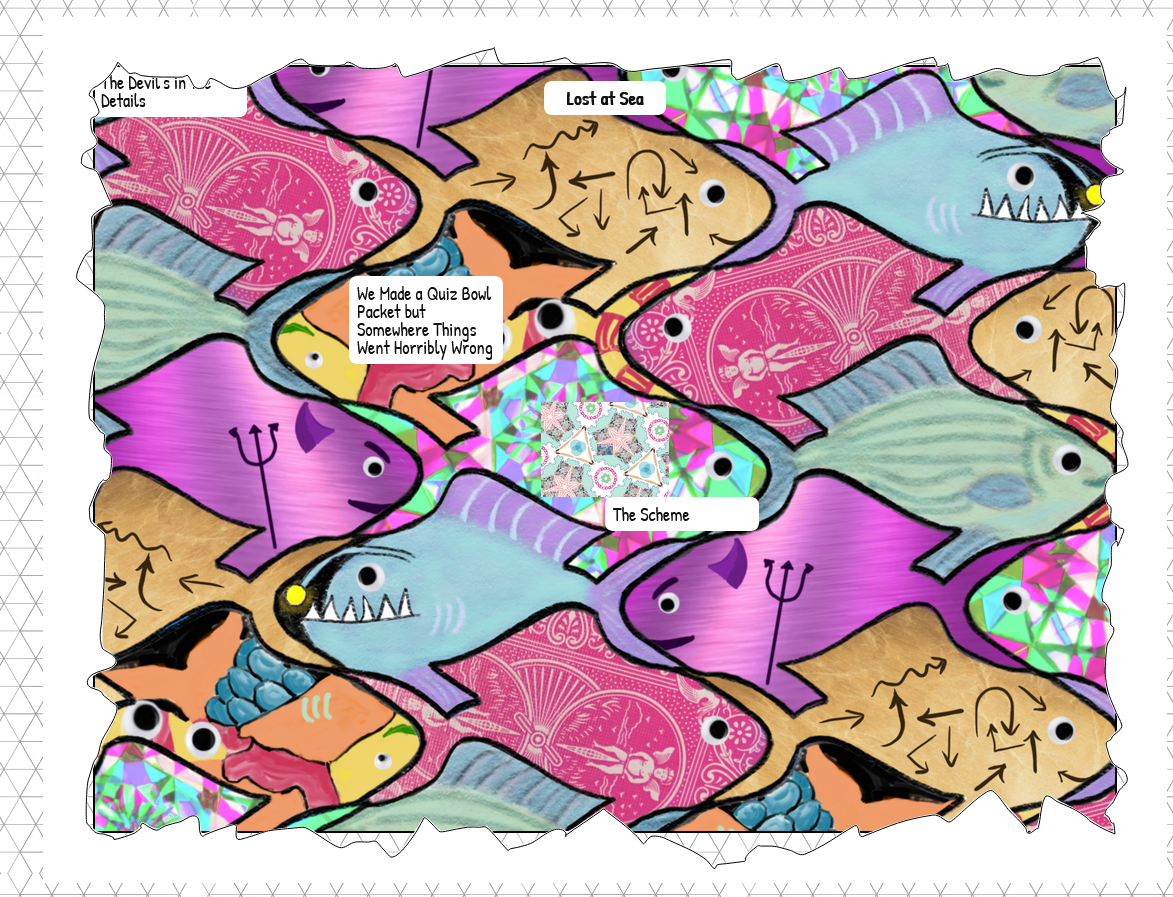

The Scheme

Puzzle Link: here

From the start of writing, we knew the meta answer was locked to EYE OF PROVIDENCE and it had to use the feeder INCEPTION in some way. There was a bit of brainstorming on whether we could exploit that 100% of teams would have the INCEPTION answer, but we did not come up with anything good.

The first serious idea I had for The Scheme was one I liked a lot. We weren’t able to make the design work, but I’m not willing to give up on the idea, so I won’t reveal it.

After that idea fell apart, we noticed that the Eye of Providence was depicted as a triangle, and we had triangles in The Legend, with a triangular looping answer, so why don’t we try to extend the triangle theming into this meta? It was a bit of a meme, but it would be cool…

When researching constrained metas, I found Voltaik Bio-Electric Cell, a triangular meta with a hefty shell that used the lengths of its feeders as a constraint. Well, we already had a 1 letter word in one of our feeders (at the time, it was V FOR VENDETTA). There was no length constraint on our feeders yet (meaning it would be easy to add one), and It seemed plausible we could make a full triangle out of words in our feeders.

We did a search for missing feeder lengths, tossed together a version that picked letters out of the triangle with indices, and sent it for editor review. I was fully expecting it to get rejected, but to my surprise, editors liked the elegance of the word triangle, and thought it was neat enough to try testsolving. (Perhaps the more accurate statement is that the editors knew the difficulty of the Wyrm constraints, most Wyrm metas we’d proposed needed shell, and this was the closest we’d gotten to a pure meta for Wyrm so far.)

The spiral index order was originally added because I was concerned a team could cheese the puzzle by taking the indices of all 720 orderings of the feeders. Doing so wouldn’t give the answer, but it seemed possible you’d get out some readable partials that could be cobbled together. With hindsight, I don’t think it was possible to get anything out of bruteforcing, but one of our testsolve groups did attempt the brute force, so we were right to consider it during design. We kept the spiral in the final version because it let the arrow diagram serve two purposes: the ordering of the numbers, and a hint towards the shape to create.

In PuzzUp, there was a list of tags we could assign to puzzles. This was to help editors gauge the puzzle balance across the Hunt. This puzzle got tagged as “Australian”. When I asked what it meant, I was told it’s shorthand for “a minimalistic puzzle, where before you have the key idea there’s little to do, and after that idea you’re essentially done.” Puzzles like this tended to appear in the Australian puzzlehunts that used to show up every year (CiSRA / SUMS / MUMS), and are a bit hit-or-miss. They hit if you get the idea and miss if you don’t.

One of the tricky parts of such puzzles is that you get exceptionally few knobs to tweak difficulty. This is a challenge of pure metas in general. The other tricky part of Australian puzzles is that solve time can have incredible variance. The first testsolve got the key idea in 20 minutes. The second testsolve got horribly stuck and was given four different flavortext + diagram combinations before getting the idea 3.5 hours later. The second group did mention the meta answer in their solve, when despairing about their increasingly bad triangle conspiracies. Watching them finish an hour later was the best payoff of dramatic irony I’ve ever seen.

Even in batch testing, where testsolvers solved The Scheme right after The Legend, no one considered using the arrangement from The Legend when solving this puzzle. A few teams got caught on this during the Hunt - sorry about that! The fact that The Legend triangle had an outer perimeter of 45 was a complete coincidence, and if we’d discovered that early enough it would have been easy to swap BRITAIN / SEA OF DECAY back to GREAT BRITAIN / SEA OF CORRUPTION to make the Scheme triangle 55 letters instead.

Maybe having an Australian puzzle as a bottlenecking meta was a bad idea. A high variance puzzle naturally means some teams will get it immediately and some teams will get walled, and getting walled on a bottleneck is a sad time. This is on my shortlist of “puzzles I’d redo from scratch” with hindsight, but at the time we decided to ship it so we could move on to other work. “You get one AREPO per puzzle” - I’d say this is the one AREPO of the Wyrm metas.

Lost at Sea

Puzzle Link: here

Nominally, I’m an author on this puzzle. In practice I did not do very much. The first version provided the cycle of ships directly, and tested okay (albeit with some grumbling about indexing with digits). By the time I joined, the work left to do was finding a way to fit in triangles, and finding answers suitable for the metameta. We were basically required to have triangles somewhere after The Scheme evolved the way it did.

I looked a lot into the Bermuda triangle, as did others, but none of us found reasonable puzzle fodder. So instead, we looked into triangular grids. Over a few rounds of iteration, the puzzle evolved from a given cycle into a triangular Yajilin puzzle where feeders were written into the grid and the Yajilin solution would give a cluephrase towards the rest of the puzzle and extraction.

It all worked, but the steps were a bit disconnected, the design was getting unwieldy, and we had a really hard time finding a way to clue all the mechanics properly in the flavortext. There were lots of facts about each ship that could be relevant, so the longer we made our flavortext, the more rabbit holes testsolvers considered. (It’s the natural response: if you get more data, then maybe you need to research more data to solve.)

My main contribution was suggesting we try removing the Yajilin entirely and brainstorm a different way to use triangular grids. The final version of the puzzle is the result of that brainstorm. I’m pretty happy with the way the puzzle guides towards the hull classification, self-confirms it with the ARB classification code being weird, and then leaves the number in it suspiciously unused if you haven’t figured out it’s important yet.

The other main contribution was helping find feeders. The first draft used MRS UNDERWOOD as a feeder, for indexing reasons. The answer worked, but was really not a good answer. The S in MRS was needed for extract, but it looked sooooooo much like a cluephrase for CLAIRE UNDERWOOD instead. Brian told me that it could be changed to just UNDERWOOD if we added a feeder that clued San Francisco, which fit the metameta, and had an S as its 5th letter. We collectively spent 5-10 hours doing a search for one, before landing on the “stories” idea with SALESFORCE TOWER or TRANSAMERICA PYRAMID. The puzzle was rewritten with SALESFORCE TOWER, went through an entire testsolve with zero issues, and then we learned that actually there are multiple Salesforce Towers in literally the final runthrough of all metas and the metameta. Oops. Thank goodness we had a backup answer!

June 2022

My Favorite Part of Collage Was When Teams Said “It’s Collaging Time” and Collaged All Over The Place

Wyrm feeders are almost ready for release. The Wyrm metas work. The shared feeders with Museum work. It all works, except for two action items:

- Write the 4th Wyrm meta.

- Do a batch solve of the entire round to get data on what it’s like to solve each meta sequentially.

We had meetings about the 4th Wyrm meta starting all the way back in March. The design requirements were pretty tight:

- The puzzle had to look like a regular Act I puzzle.

- It also needed to be interpretable as a metapuzzle.

- Feeders had to be used in enough of a way to allow for backsolving.

- At the same time, the puzzle had to be solvable without knowing any of those feeders.

The very first example for what this could look like was a printer’s devilry puzzle. Instead of each clue solving to the inserted word, inserting a word would complete a crossword clue with its own answer that you’d index from. The inserted words would then be unused information that would be revealed as the backsolved feeders once you got to the end of the round.

None of the authors were very excited by this, but it was important to prove to ourselves and editors that the design problem was solvable. Once we knew the meta answer was TRIFORCE, the idea died more officially, since TRIFORCE was 8 letters and we only had 6 feeders to work with.

Another idea proposed for this puzzle may have worked, but was basically impossible to combine with the Wyrm metameta constraints. That idea eventually turned into Word Press.

The key design challenge was that the puzzle needed to embed some process for backsolving. But, that backsolving process would look like unused information during the initial forward solve. If a team got stuck, they could rabbit hole on that unused information. The round structure of Wyrm only really worked if almost all teams solved the 4th Wyrm meta at the start of Wyrm. So, add two more constraints to the list:

- The puzzle should be very easy.

- The puzzle should be attractive enough that teams won’t skip it.

And thus our problem was harder. The ideas bounced back and forth for another few weeks, until we landed on a word web in late April. I immediately started advocating for it. Word webs are the closest thing to guaranteed fun for puzzlehunts, and were a highlight of the now-defunct Google Games. It solved our “solve from 0 feeders” problem, because spamming guesses and solving around hard nodes is just what you’re supposed to do in a word web. Teams would also be unlikely to get stuck in the word web, as long as we gave it enough redundancy, so it would avoid the risk of teams paying too much attention to the content seeded for Wyrm.

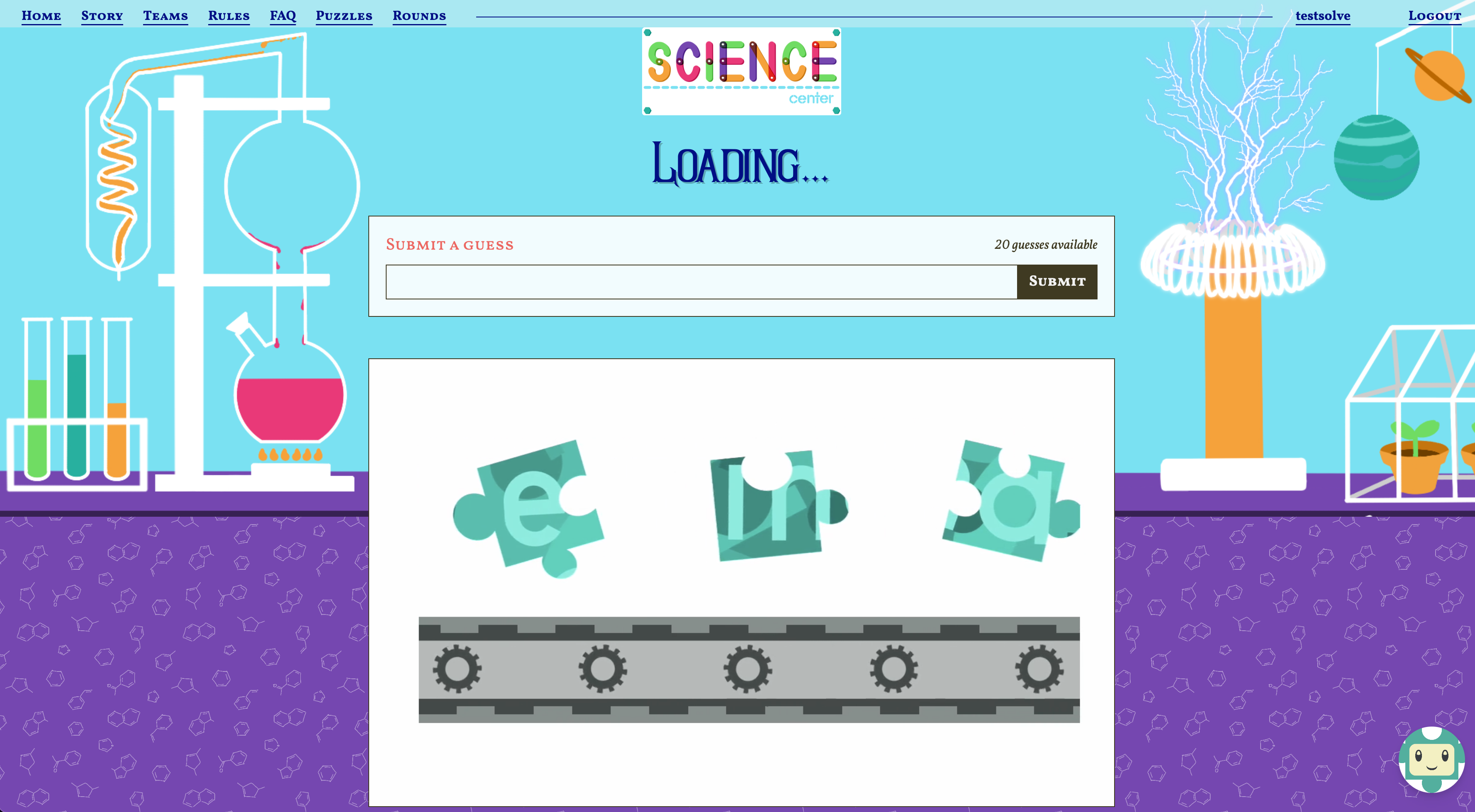

My main worry was that it’d take a while to construct. It was clear we could construct it though, so we tentatively locked it in. We punted on doing the construction itself until the other Wyrm metas were written, so that we’d know exactly what backsolve feeders we needed to seed in the web. Well, now those Wyrm metas were written. Time to reap what we’d sown.

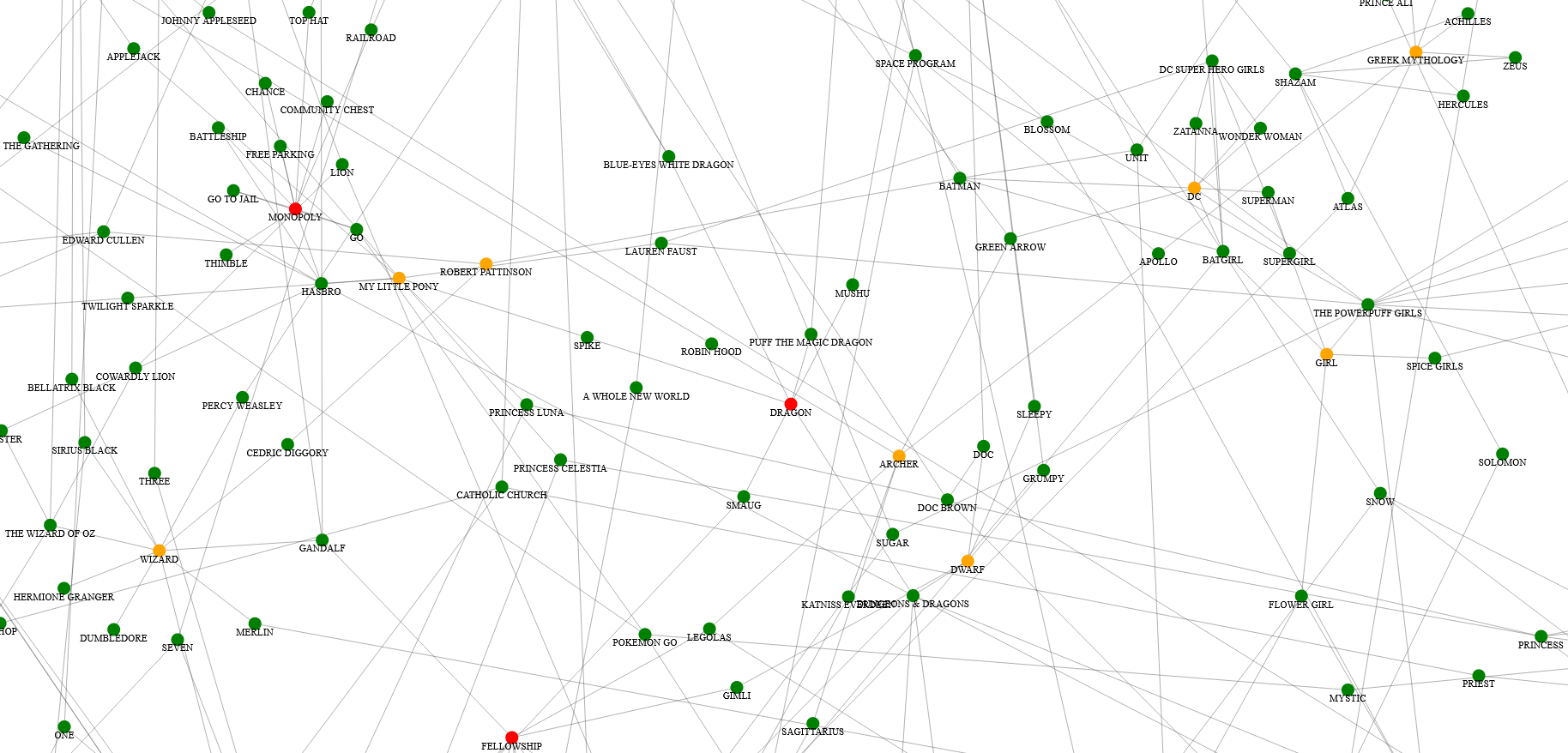

Collage

The first thing I did was reach out to David and Ivan, the authors of Word Wide Web, to see if they had any word web tools I could borrow. David sent me a D3.js based HTML page that automatically layed out a given graph, with some drag-drop functionality to adjust node positions, but told me there was no existing interactive code due to time reasons.

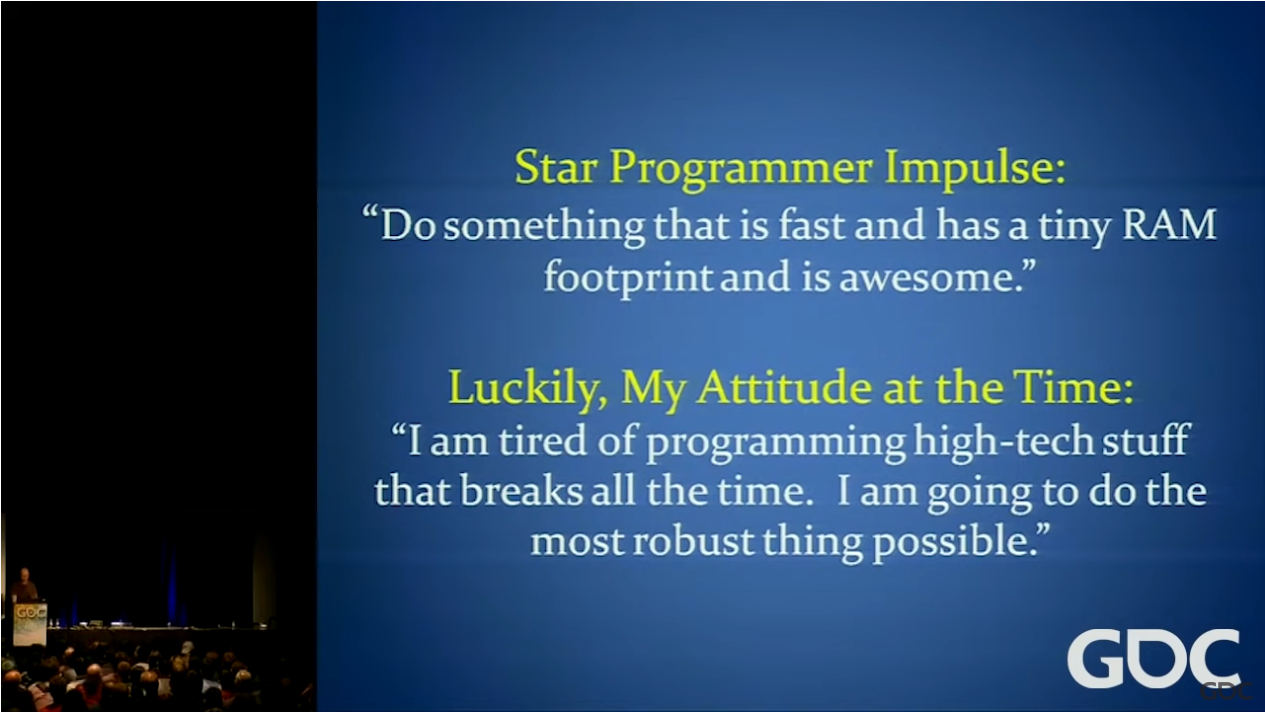

I repurposed that code to create a proof-of-concept interactive version that ran locally. This quickly exposed some important UX things to support, like showing past guesses and allowing for some alternate spellings of each answer.

For unlocks, I decided to recompute the entire web state whenever the list of solved words changed. This wasn’t the most efficient, it could have been done incrementally, but in general I believe people underestimate how fast computers can be. Programmers will see something that looks like an algorithms problem, and get nerd sniped into solving that algorithms problem, while neglecting to fix their page loading a 7 MB image file it doesn’t need to load. (If anything, the easier it is to recognize something is an algorithms problem, the less likely it is to matter. The hard parts are usually in system design.)

In short, I figured recomputing the entire graph would be more robust, and didn’t want to deal with errors caused by messing up incremental updates.

(From “The Implementation of Rewind in Braid”)

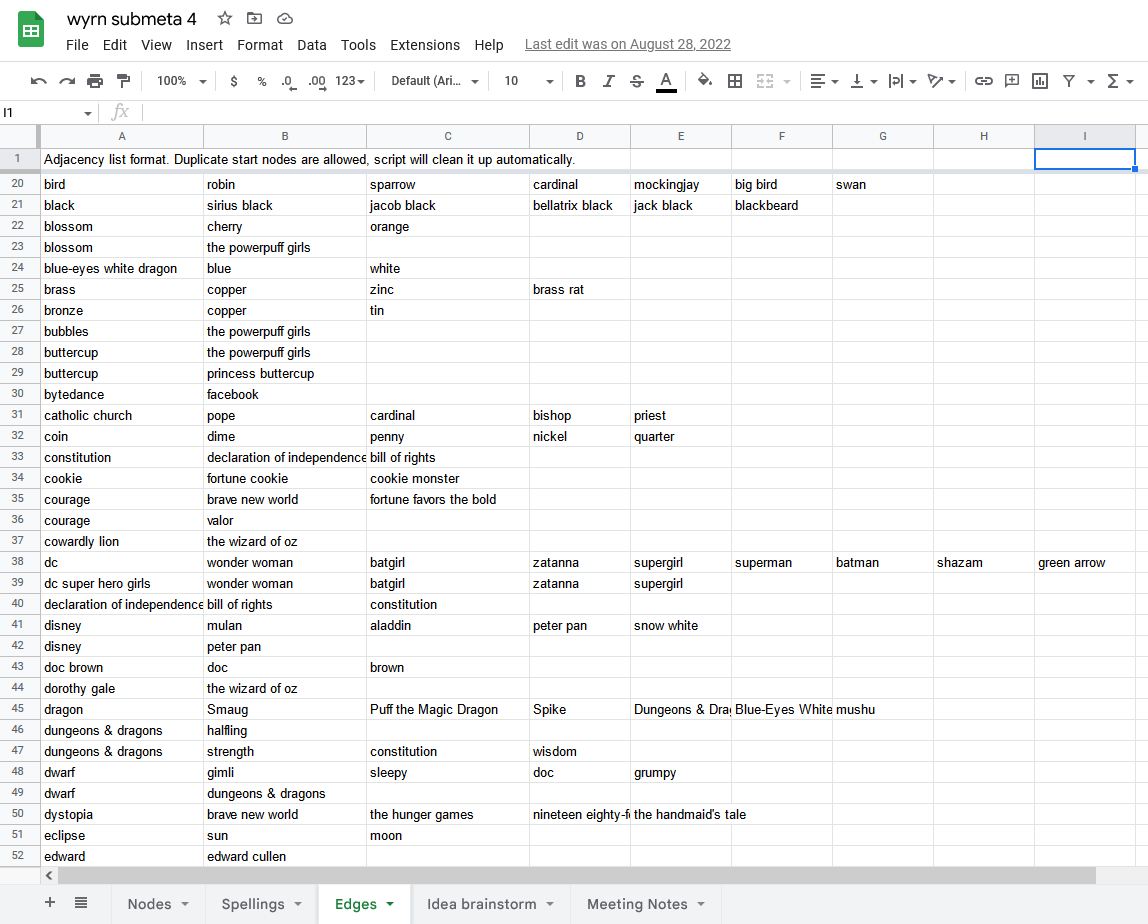

From here, I worked on creating a pipeline that could convert Google Sheets into the web layout code. The goal was to make tech literacy not be a blocker for making edits to the word web, and to make it easier to collaborate on web creation. I added a bunch of deduplication and data filtering in my code to allow the source-of-truth spreadsheet to be as messy as it wanted, which paid off. Pretty sure around 10% of the edges appear twice in the raw data.