The Argument for Contact Tracing

A few days ago, Apple and Google announced a partnership to develop an opt-in iOS and Android contact tracing app. Apple’s announcement is here, and Google’s announcement is here.

I felt it was one of the biggest signs of optimism for both ending stay-at-home orders and maintaining control over COVID-19, assuming that people opt into it.

I also quickly realized that a bunch of people weren’t going to opt into it. Here’s my attempt to fix that.

This post covers what contact tracing is, why I believe it’s critical to handling COVID-19, and how the proposed app implements it while maintaining privacy, ensuring that neither people, nor corporations, nor governments learn personal information they don’t need to know.

As a disclaimer, I do currently work at Google, but I have no connection to the people working on this, I’m speaking in a personal capacity, and I’ve deliberately avoided looking at anything besides the public press releases.

What Is Contact Tracing?

Contact tracing is the way we trace who infected people have been in contact with. This is something that hospitals already do when a patient gets a positive test for COVID-19. The aim of contact tracing is to warn people who may be infected and asymptomatic to stay home. This cuts lines of disease transmission, slowing down the spread of the disease.

Much of this is done by hand, and would continue to be done by hand, even if contact tracing apps become widespread. Contact tracing apps are meant to help existing efforts, not replace them.

Why Is Contact Tracing So Necessary?

Stay-at-home orders are working. Curves for states that issued stay-at-home orders earlier are flatter. This is all great news.

However, the stay-at-home orders have also caused tons of economic damage. Now, to be clear, the economic damage without stay-at-home orders would have been worse. Corporate leaders and Republicans may have talked about lifting stay-at-home orders, but as relayed by Justin Wolfers, UMich Economics professor, a survey of over 40 leading economists found 0% of them agreed that lifting severe lockdowns early would decrease total economic damage.

Survey of leading economists:

— Justin Wolfers (@JustinWolfers) March 29, 2020

"Abandoning severe lockdowns at a time when the likelihood of a resurgence in infections remains high will lead to greater total economic damage than sustaining the lockdowns to eliminate the resurgence risk."

0% disagree.https://t.co/6NNAaLlSjq pic.twitter.com/7kcnVVPw2N

Understand the incentives: CEO's and bankers are calling for workers to be recalled. Economists—whose models also account for what's in the workers' best interests—disagree. Epidemiologists—who understand how pandemics spread—also disagree.

— Justin Wolfers (@JustinWolfers) March 29, 2020

So, lockdowns are going to continue until there’s low risk of the disease resurging. As summarized by this Vox article, there are four endgames for this.

- Social distancing continues until cases literally go to 0, and the disease is eradicated.

- Social distancing continues until a vaccine is developed, widely distributed, and enough of the population gets it to give herd immunity.

- Social distancing continues until cases drop to a small number, and massive testing infrastructure is in place. Think millions of tests per day, enough to test literally the entire country, repeatedly, to track the course of the disease.

- Social distancing continues until cases drop to a small number, and widespread contact tracing, plus a large, less massive number of tests are in place.

Eradication is incredibly unlikely, since the disease broke containment. Vaccines aren’t going to be widely available for about a year, because of clinical trial timelines. For testing, scaling up production and logistics is underway right now, but reaching millions of tests per day sounds hard enough that I don’t think the US can do it.

That’s where contact tracing comes in. With good contact tracing, you need fewer tests to get a good picture of where the disease is. Additionally, digital solutions can exploit what made software take over the world: once it’s ready, an app can be easily distributed to millions of people in very little time.

Vaccine development, test production, and contact tracing apps will all be done in parallel, but given the United States already has testing shortfalls, I expect contact tracing to finish first, meaning it’s the best hope for restarting the economy.

What About Privacy?

Ever since the Patriot Act, people have been wary of governments using crises as an excuse to extend their powers, and ever since 2016, people have been wary of big tech companies. So it’s understandable that people are sounding alarm bells over a collaboration between Apple, Google, and the government.

However, if you actually read the proposal for the contact tracing app, you find that

- The privacy loss is fairly minimal.

- The attacks on privacy you can execute are potentially annoying, but not catastrophic.

When you contrast this with people literally dying, the privacy loss is negligible in comparison.

Let’s start with a privacy loss that isn’t okay, to clarify the line. In South Korea, the government published personal information for COVID-19 patients. This included where they traveled, their gender, and their rough age. All this information is broadcasted to everyone in the area. See this piece from The Atlantic, or this article from Nature, for more information.

Exposing this level of personal detail is entirely unnecessary. There is no change in health outcome between knowing you were near an infected person, and knowing you were near an infected person of a certain age and gender. In either case, you should self-quarantine. The South Korea model makes people lose privacy for zero gain.

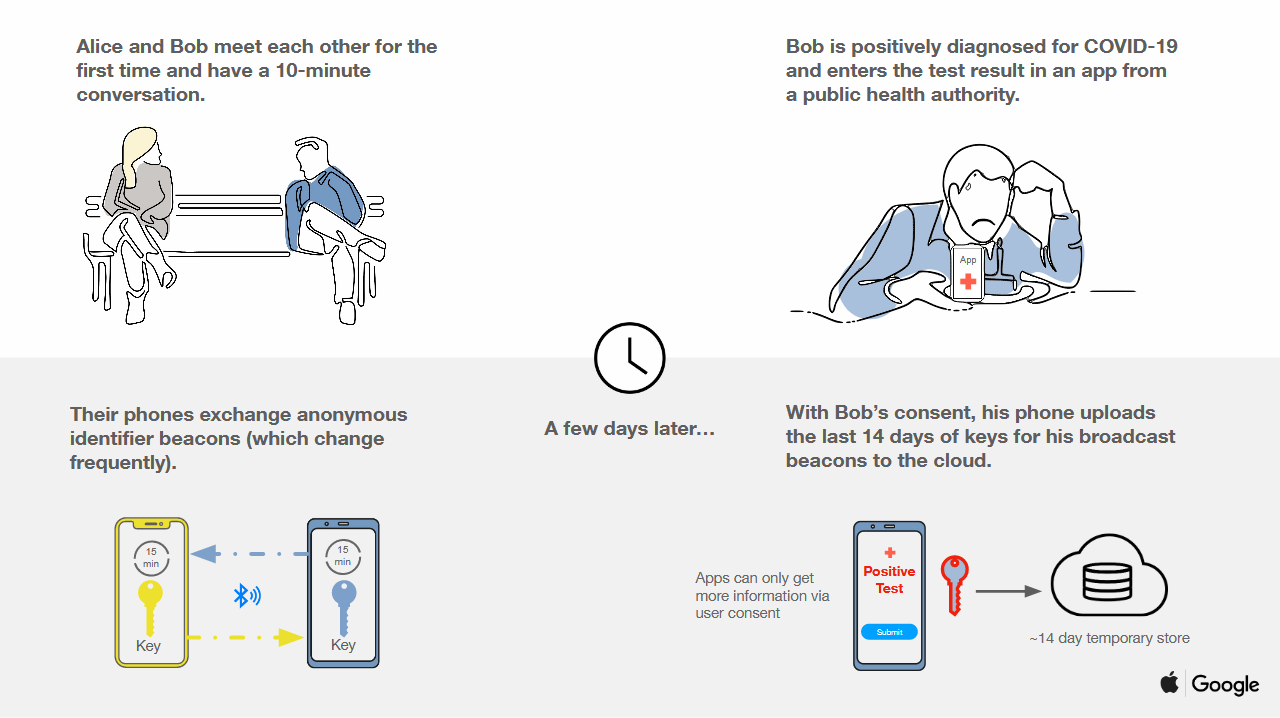

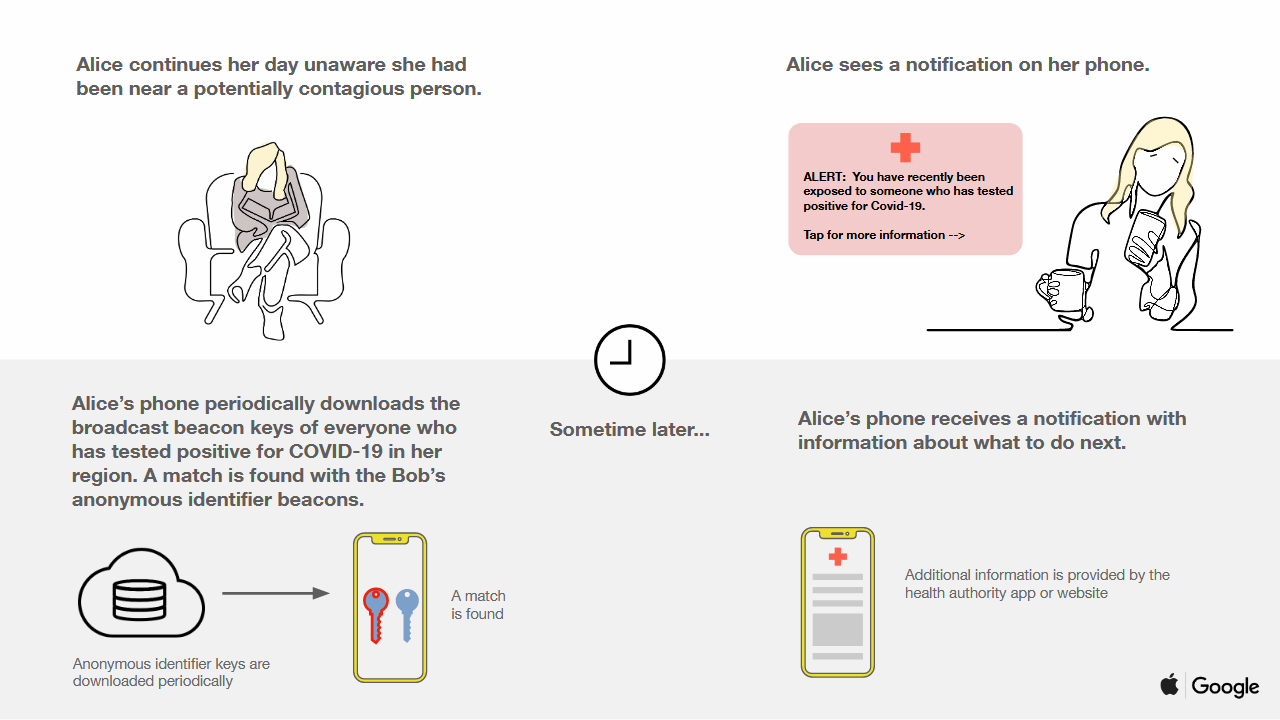

How does the Apple and Google collaboration differ? Here is the diagram from Google’s announcement.

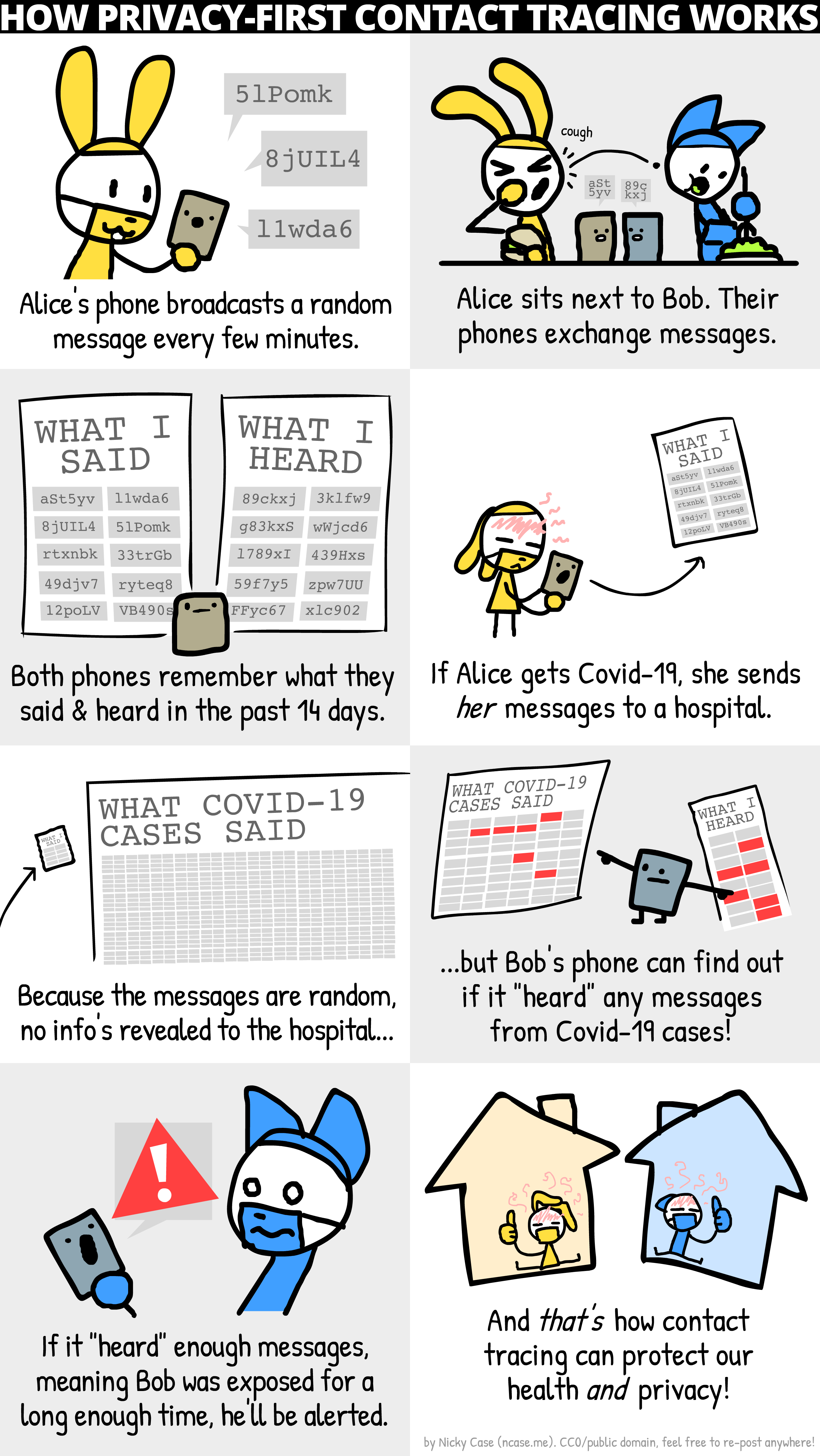

This is similar to the DP-3T protocol, which is briefly explained in this comic by Nicky Case.

- Each phone continually generates random keys, that are broadcasted by Bluetooth to all nearby devices. These keys change every 5-15 minutes.

- Each device records the random messages it has heard from nearby devices.

- Whenever someone tests positive, they can elect to upload all their messages to a database. This upload requires the consent of both the user and a public health official.

- Apple’s and Google’s servers store a list of all messages sent by COVID-19 patients. They will be stored for 14 days, the incubation period of the virus.

- Periodically, every device will download the current database. It will then, on-device, compare that list to a locally saved list of messages received from nearby phones.

- If there is enough overlap, the user gets a message saying they were recently in contact with a COVID-19 case.

What makes this secure? Since each phone’s message is random, and changes frequently, the messages on each phone don’t indicate anything about who those messages correspond to. Since the database is a pile of random messages, there’s no way to extract further information from the stored database, like age, gender, or street address. That protects people’s privacy from both users and the database’s owner.

The protocol minimizes privacy loss, but it does expose some information, since doing so is required to make contact tracing work. Suppose Alice only meets with Bob in a 14 day period. She later gets a notification that someone she interacted with tested positive for COVID-19. Given that Alice only met one person, it’s rather obvious that Alice can conclude Bob has COVID-19. However, in this scenario, Alice would be able to conclude this no matter how contact tracing is implemented. You can view this as a required information leak, and the aim of the protocol is to leak no more than the required amount. If Alice meets 10 people, then gets a notification, all she learns is that one of the 10 people she met is COVID-19 positive - which, again, is something that she could have concluded anyways.

If implemented as stated, neither the hospital, nor Apple, nor Google should learn who’s been meeting who, and the users getting the notification shouldn’t learn who transmitted the disease to them.

What If Apple and Google Do Something Sketchy?

First, the simpler, less technical answer. So far, Apple and Google have publicized and announced their protocol ahead of time. Their press releases include a Bluetooth specification, a cryptography specification, and the API that both iOS and Android will support. This is standard practice if you want to do security right, because it lets external people audit the security. It also acts as an implicit contract - if they deviate from the spec, the Internet will bring down a firestorm of angry messages and broken trust. If you can count on anyone to do due diligence, it’s the cryptography nerds.

In short, if this was a sneaky power grab, they’re sure making it hard to be sneaky by readily giving out so much information.

Maybe there’s a backdoor in the protocol. I think that’s very unlikely. Remember, it’s basically the DP-3T protocol, which was designed entirely by academic security professors, not big tech companies. I haven’t had the time to verify they’re exactly identical, but on a skim they had the same security guarantees.

When people explain what could go wrong, they point out that although the app is opt-in, governments could keep people in lockdown unless they install the app, effectively making it mandatory. Do we really want big tech companies building such a wide-reaching system?

My answer is yes, absolutely, and if governments push for mandatory installs, then that’s fine too, as long as the app’s security isn’t compromised.

Look, you may be philosophically against large corporations accumulating power. I get it. Corporations have screwed over a lot of people. However, I don’t think contact tracing gives them much power they didn’t already have. And right now, the coronavirus is also screwing over a lot of people. It’s correct to temporarily suspend your principles, until the public health emergency is over. Contact tracing only works if it’s widespread. To make it widespread, you want the large reach of tech companies, because you need as many users as possible. (Similarly, you may hate Big Pharma, but Big Pharma is partnering with the CDC for COVID-19 test production, and at this time, they’re best equipped to produce the massive numbers of tests needed to detect COVID-19.)

NOVID is an existing contact tracing app, with similar privacy goals. It got a lot of traction in the math contest community, because it’s led by Po-Shen Loh. I thought NOVID was a great effort that got the ball rolling, but I never expected it to have any shot of reaching outside the math contest community. Its footprint is too small. Meanwhile, everyone knows who Apple and Google are. It’s much more likely they’ll get the adoption needed to make contact tracing effective. Both companies also have medical technology divisions, meaning they should have the knowledge to satisfy health regulations, and the connections to train public health authorities on how to use the app. These are all fixed costs, and the central lesson of fixed costs is that they’re easier to absorb when you have a large war chest.

Basically, if you want contact tracing to exist, but don’t want Apple or Google making it, then who do you want? The network effects and political leverage they have makes them most able to rapidly spread contact tracing. I’m not very optimistic about a decentralized solution, because (spoiler for next section) that opens you up to other issues. For a centralized solution, the only larger actor is the government, and if you don’t trust Apple or Google, you shouldn’t trust the government either.

Frankly, if you were worried about privacy, both companies have plenty of easier avenues to get personal information, and based on the Snowden leaks, the US government knows this. I do think there’s some risk that governments will pressure Apple and Google to compromise the security for surveillance reasons, but I believe big tech companies have enough sway to avoid compromising to governmental pressure, and will choose to do so if pushed.

What If Other Actors Do Something Sketchy on Top of Apple and Google’s Platform?

These are the most serious criticisms. I’ll defer to Moxie Marlinspike’s first reaction, because he created Signal, and has way more experience on how to break things.

These contact tracing apps all use Bluetooth, to enable nearby communication. A bunch of people who wouldn’t normally use Bluetooth are going to have it on. This opens them up to Bluetooth-based invasions of privacy. For example, a tracking company can place Bluetooth beacons in a hotspot of human activity. Each beacon registers the devices of people who walk past it. One beacon by itself doesn’t give much, but if you place enough of these beacons and aggregate their pings, you can start triangulating movements. In fact, if you do a search for “Bluetooth beacon”, one of the first results is a page from a marketing company explaining why advertisers should use Bluetooth beacons to run location-based ad campaigns. Meaning, these campaigns are happening already, and now those ads will work for a bunch more people.

Furthermore, it’s a pretty small leap to assume that advertisers will also install the contact tracing app to their devices. They’ll place them in a similar way to existing Bluetooth beacons, and bam, now they also know the rough frequency of COVID-19 contacts in a given area.

My feeling is that like before, these attacks could be executed on any contact tracing app. For contact tracing to be widespread, it needs to be silent, automatic, and work on existing hardware. That pretty much leaves Bluetooth, as far as I know, which makes these coordinated Bluetooth attacks possible. And once again, compared to stopping the start of another exponential growth in loss of life, I think this is acceptable.

Moxie notes that he expects location data to be incorporated at some point. If the app works as described, each day, the device needs to download the entire database, whose size depends on the number of new cases that day. At large scale, this could become 100s of MBs of downloads each day. To decrease download size, you’d want each phone to only download messages uploaded from a limited set of devices that includes all nearby devices…which is basically just location data, with extra steps.

I disagree that you’d need to do that. People already download MBs worth of data for YouTube, Netflix, and updates for their existing apps. If each device only downloads data when it’s on Wi-Fi and plugged in, then it should be okay. I’d also think that people would be highly motivated to start those downloads

- without them, they don’t learn if they were close to anyone with COVID-19!

If users upload massive amounts of keys, they could trigger DDOS attacks by forcing gigabytes of downloads to all users. If users declare they are COVID-19 positive when they aren’t, they could spread fake information through the contact tracing app. However, both of these should be unlikely, because uploads will require the sign-off of a doctor or public health authority.

This is why I’m not so optimistic about a decentralized alternative. To prevent abuse, you want a central authority. The natural choice for a central authority is the healthcare system. You’ll need hospitals to understand your contact tracing app, and that’s easiest if there’s a single app, rather than several…and now we’re back where we started.

Summary

Here are the takeaways.

Contact tracing is a key part to bringing things back to normal as fast as is safe, which is important for restarting the economy.

Of the existing contact tracing solutions, the collaboration between Apple and Google is currently the one I expect to get the largest adoption. They have the leverage, they have the network effects, and they have the brand name recognition to make it work.

For that solution, I expect that, while there will be some privacy loss, it’ll be close to the minimum amount of privacy loss required to make widespread contact tracing work - and that privacy loss is small, compared to what it prevents. And so far, they seem to be operating in good faith, with a public specification of what they plan to implement, which closely matches the academic consensus.

If contact tracing doesn’t happen - if it doesn’t get enough adoption, if people are too scared to use it, or something else, then given the current US response, I could see the worst forecasts of the Imperial College London report coming true: cycles of lockdown on, lockdown off, until a vaccine is ready. Their models are pessimistic, compared to other models, but it could happen. And I will be really, really, really mad if it does happen, because it will have been entirely preventable.

I originally posted this essay on Facebook, and got a lot of good feedback. Thanks to the six people who commented on points I missed.